Publications Access Graphs

metadata

| Title: | Publications Access Graphs |

| Author: | Ralph B. Holland |

| version: | 1.3.3 |

| Publication Date: | 2026-01-30T01:55Z |

| Updates: | 2026-01-09T10:24Z 1.3.3 - included another access life cycle projection 2026-02-07T07:49Z 1.3.2 - refinements to GP-01 and included LG-0a. 2026-02-07T07:17Z 1.3.1 - elevated LG-0 to GP-0 2026-02-07T07:05Z 1.3.0 - included LG-0 to define up, down, left and right! 2026-02-06T00:00Z 1.2.0 - partial update of projections 2026-01-03T13:39Z 1.1.0 - separated out metadata access from Get Various updates will appear within date-range sections because this document is live editted. |

| Affiliation: | Arising Technology Systems Pty Ltd |

| Contact: | ralph.b.holland [at] gmail.com |

| Provenance: | This is a curation artefact |

| Status: | temporal ongoing updates expected |

Metadata (Normative)

The metadata table immediately preceding this section is CM-defined and constitutes the authoritative provenance record for this artefact.

All fields in that table (including artefact, author, version, date and reason) MUST be treated as normative metadata.

The assisting system MUST NOT infer, normalise, reinterpret, duplicate, or rewrite these fields. If any field is missing, unclear, or later superseded, the change MUST be made explicitly by the human and recorded via version update, not inferred.

This document predates its open licensing.

As curator and author, I apply the Apache License, Version 2.0, at publication to permit reuse and implementation while preventing enclosure or patent capture. This licensing action does not revise, reinterpret, or supersede any normative content herein.

Authority remains explicitly human; no implementation, system, or platform may assert epistemic authority by virtue of this license.

(2025-12-18 version 1.0 - See the Main Page)

Publications access graphs

Scope

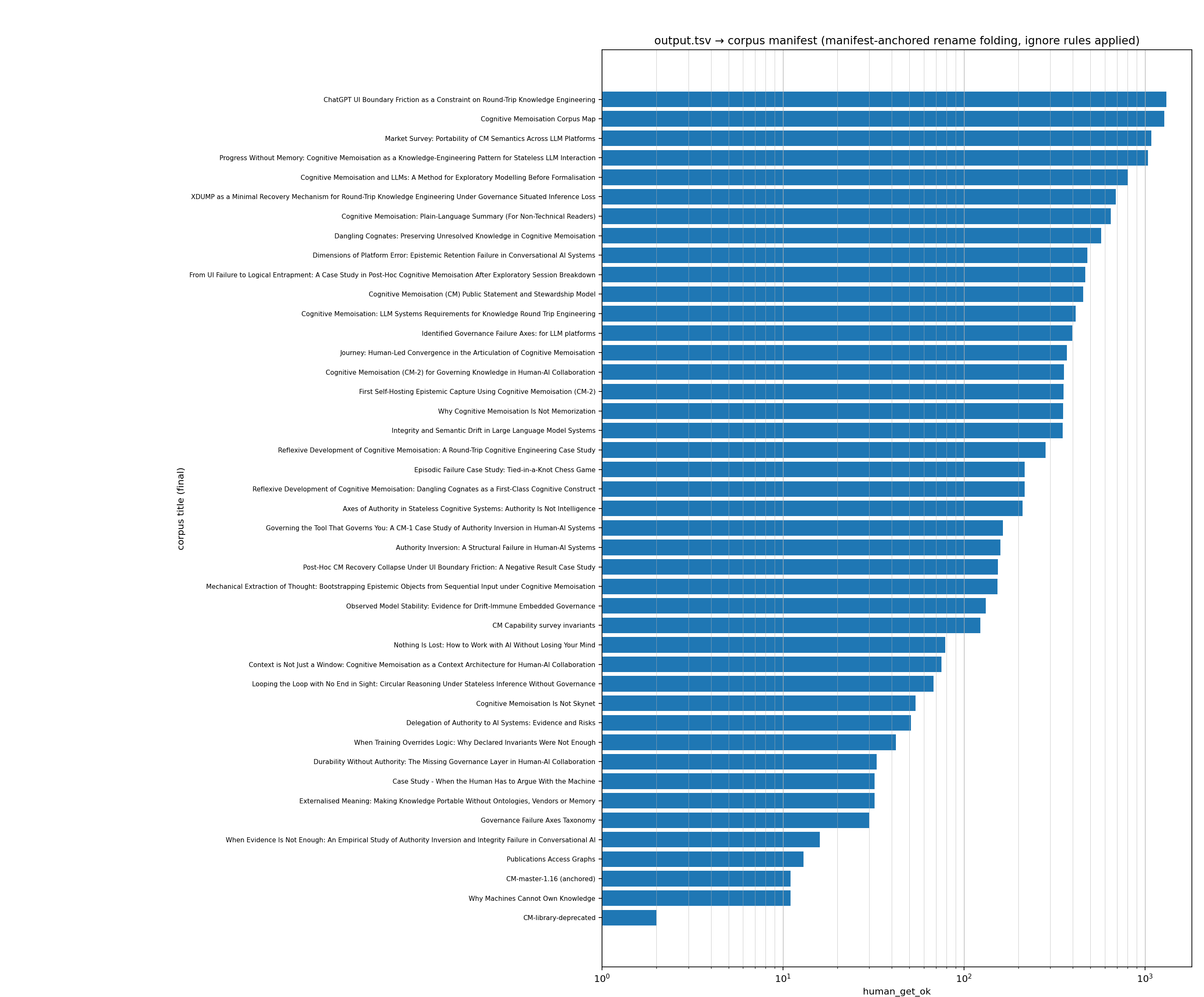

This is a collection of normatives and projections used to evaluate page interest and efficacy. The corpus is maintained as a corrigable set of publications, where there are lead-in and foundations of the work. These lead-ins are easily seen in the projections. A great number of hits are from AI bots and web-bots (only those I permit) and those accesses have overwhelmed the mediawiki page hit counters. Thus these projections filter out all that noise.

I felt that users of the corpus may find these projections as an honest way of looking at the live corpus page landing/update (outside of Zenodo views and downloads). All public publications on the mediawiki may be viewed via category:public.

Sometime the curator will want all nginx virtual servers and will supply a rollups.tgz for the whole web-farm, and othertimes they will supply a rollup that is already filtered by publications.arising.com.au. The model is NOT required to perform virtual server filtering.

Projections

There are graph projections that the curator (and author) finds useful:

- page access lifetime graphlets

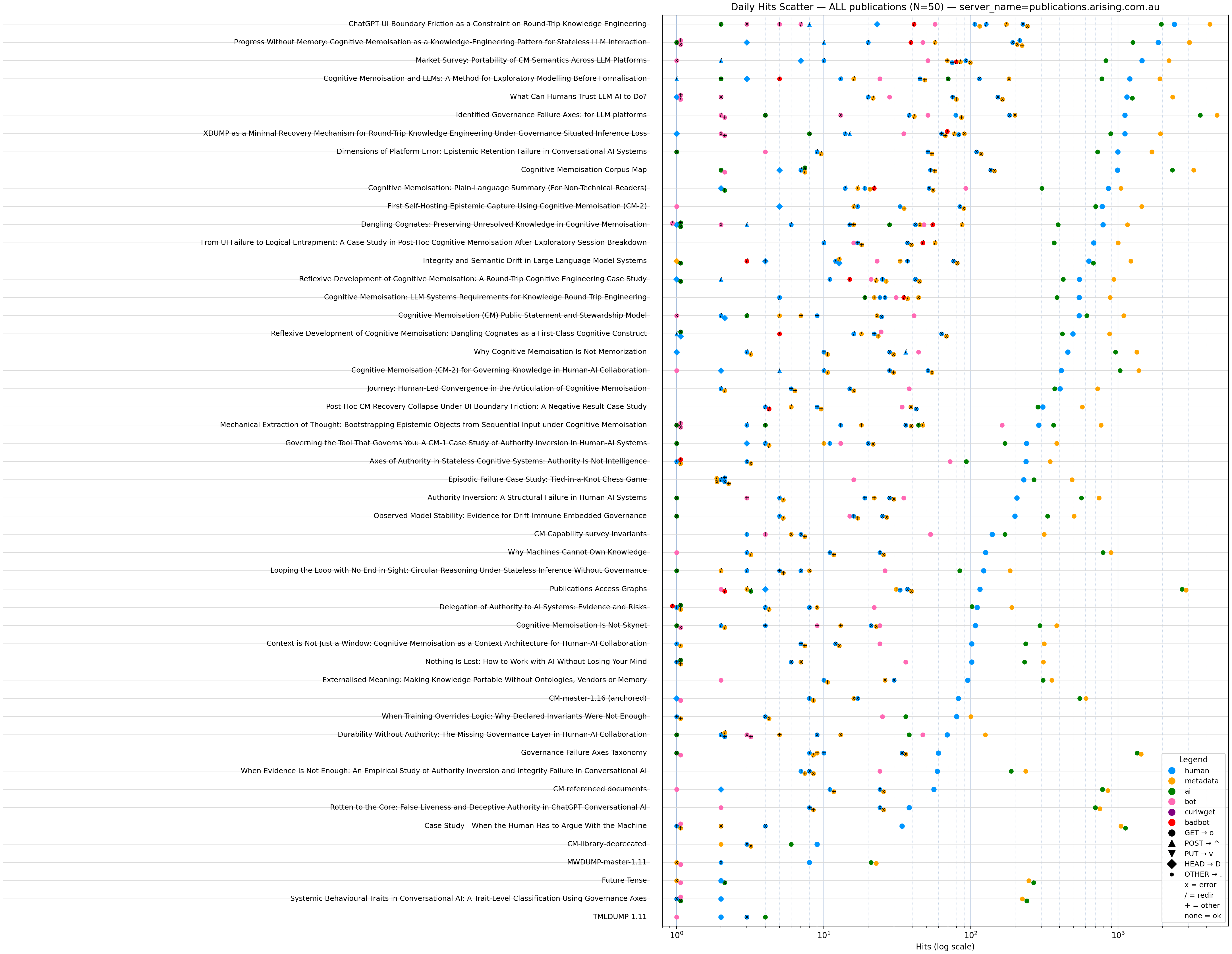

- page total accumulated hits by access category: human_get, metadata, ai, bot and bad-bot (new 2025-01-03)

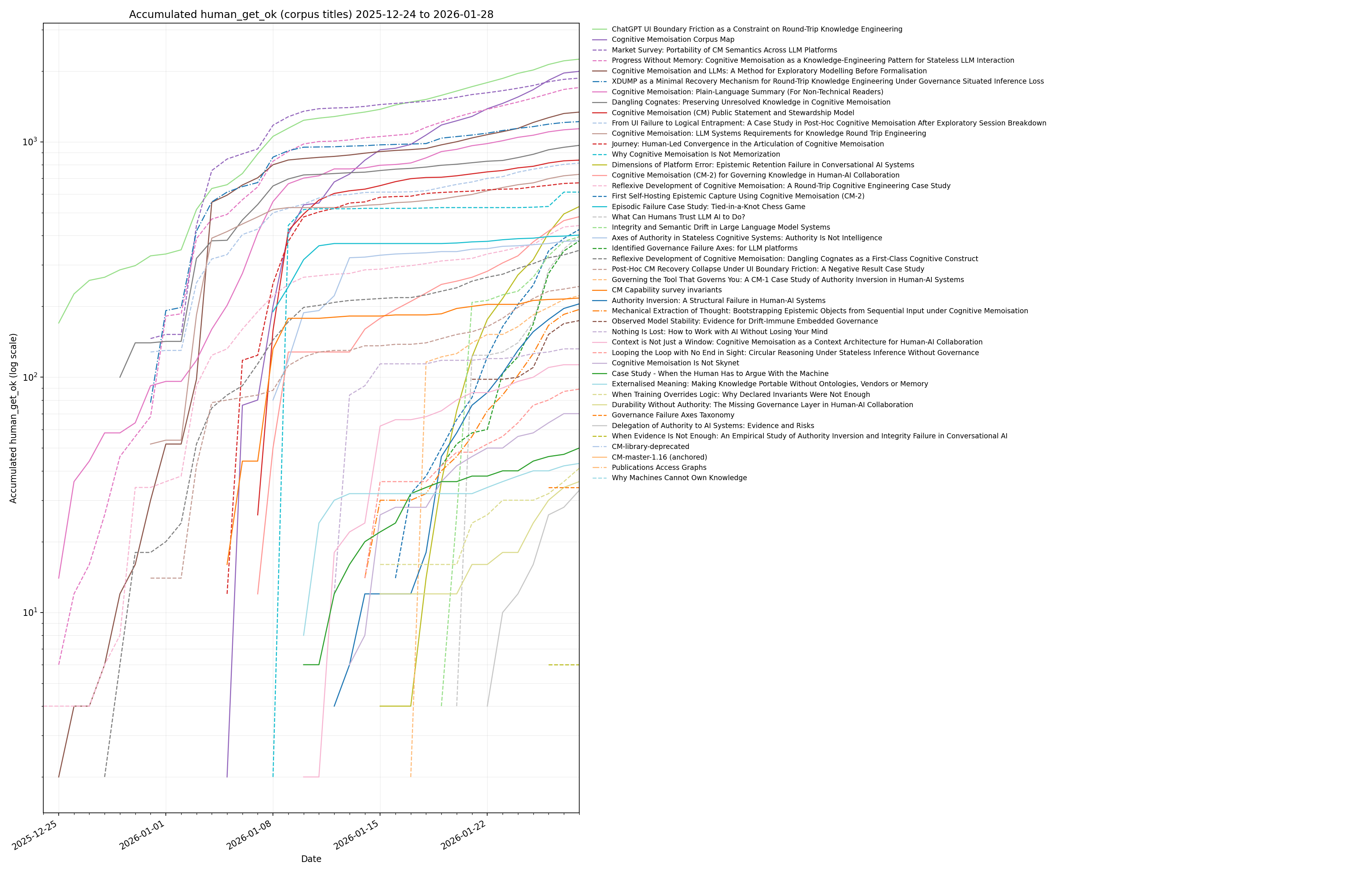

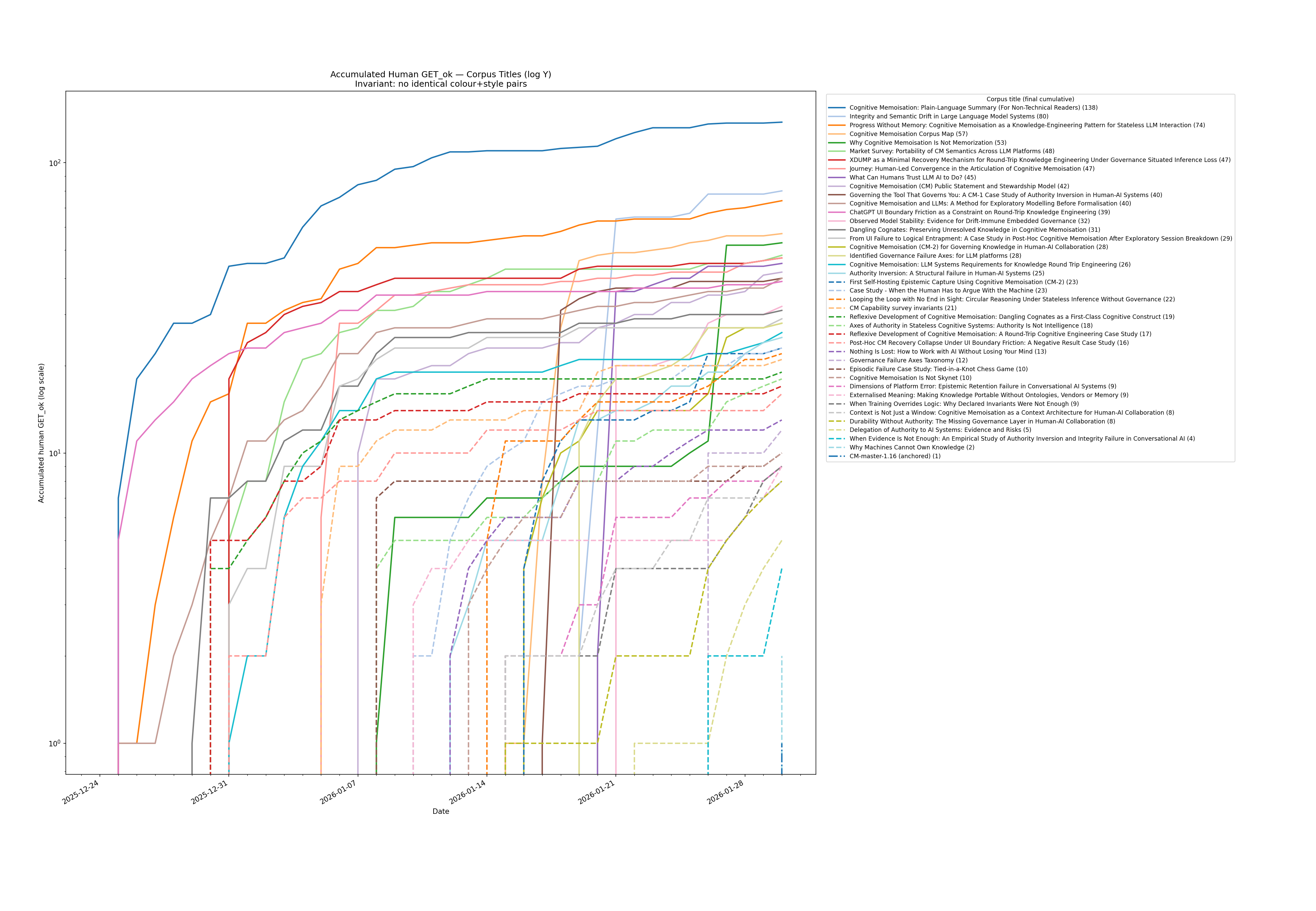

- accumulated human_get times series

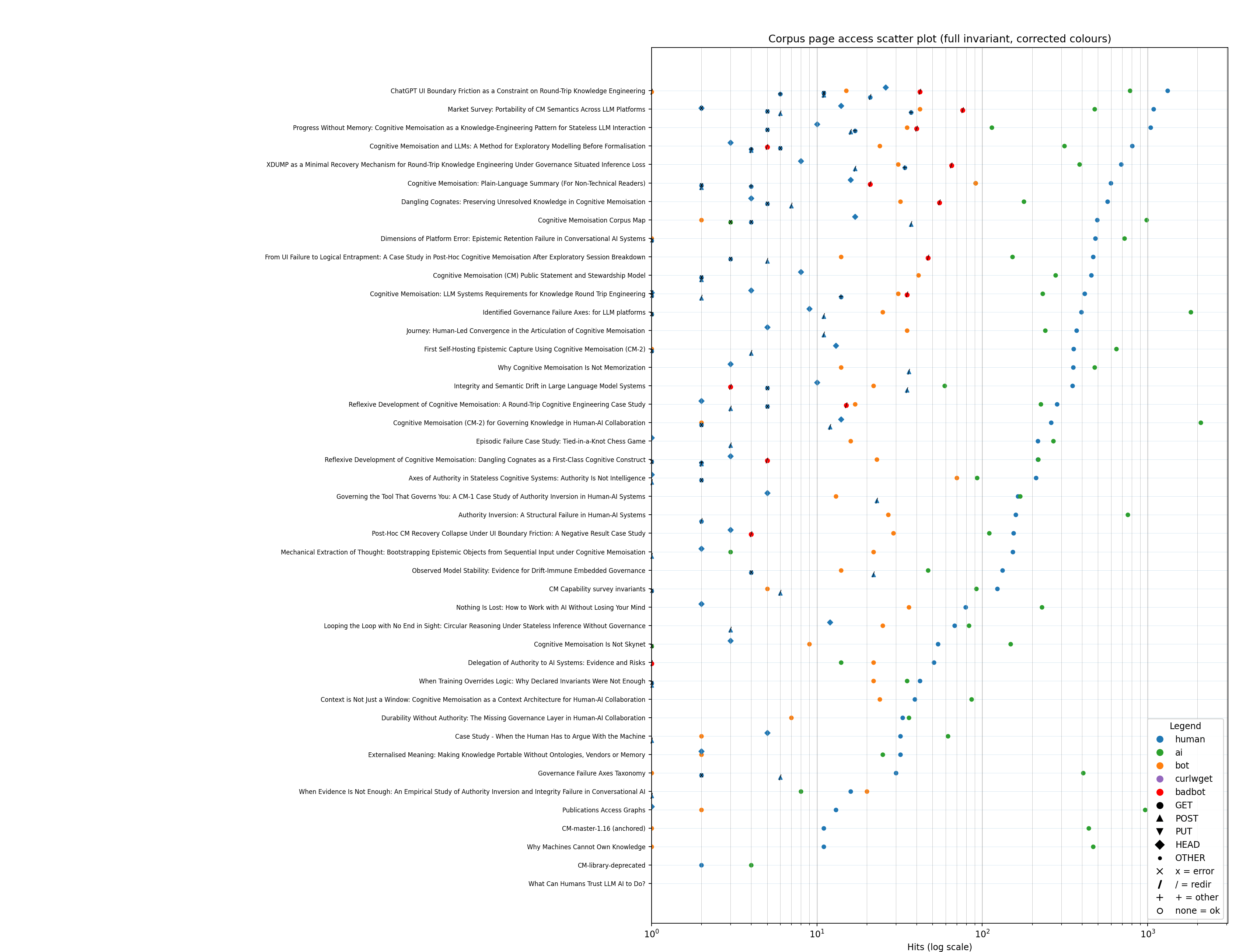

- page_category scatter plot

In Appendix A there are one set of general invariants used for both projections, followed by invariants for specific projections with:

- Corpus membership constraints apply ONLY to the accumulated human_get time series.

- The daily hits scatter is NOT restricted to corpus titles.

- Main_Page is excluded from the accumulated human_get projection, but not the scatter plot - this is intentional

The projection data were acquired by logrollup of nginx access logs.

The logrollup program in is Appendix B - logrollup

Appendix C - page_list-verify produces aggregation for verification of the scatter plot and time series. In its own right with the additional categories included it is quite an insightful projection.

The access is assumed to be human-centric when agents cannot be identified, and when the access is a real page access and not to metadata. With identified robots and AI being relegated to non-human categories. The Bad Bot category covers any purposefully excluded Bot from the web-server farm via nginx filtering. The human category also contains un-identified agents - because there is no definitive way to know that any access is really human. The rollups were verified against temporal nginx log analysis and the characterisation between bot and human is fairly indicative. The metadata access is assumed to be from an agent since humans normally do not crawl through metadata links.

The accumulative page time series count is from 2025-12-25 and has been filtered for pages from the Corpus, while the Scatter Plot is an aggregation across the entire period and categorised by agent, method and status code, and only excludes noise-type access - so this projection includes all publication papers that may be of interest (even those outside the corpus).

latest projections

I introduced the deterministic page_list_verify and the extra human_get bar graph for each page as a verification step. It is obvious that the model is having projection difficulties, seemingly unable to follow the normative sections for projection. I have tried many ways to reconcile the output so I can now normatively assert the upper bounds with the latest deterministic code.

metadata accessors

The following data with machines masquerading as human agents were captured from the nginx server logs after bargraph normatives exposed strong signals that automated systems are referencing mediawiki metadata with non-attributable agent strings. The accesses have been categorised as diff, history and version exploration, and when combined fall into the metadata category projected in the page category hit count projections that follow.

| Table A - machine access circa 2026-02-03 | ||||||

|---|---|---|---|---|---|---|

| IP address | country / network | diff | history | version | titles touched | user agent (representative) |

| 74.7.227.133 | USA Verizon |

1218 | 8 | 1632 | 14 | Mozilla/5.0 AppleWebKit/537.36 (KHTML, like Gecko) |

| 66.249.70.70 | USA Google LLC |

1170 | 45 | 921 | 30 | Mozilla/5.0 (Linux; Android 6.0.1; Nexus 5X) |

| 74.7.242.43 | Canada Rogers Communications |

582 | 11 | 627 | 11 | Mozilla/5.0 AppleWebKit/537.36 (KHTML, like Gecko) |

| 66.249.70.71 | USA Google LLC |

565 | 32 | 487 | 30 | Mozilla/5.0 (Linux; Android 6.0.1; Nexus 5X) |

| 216.73.216.52 | USA Ohio Anthropic, PBC (AWS AS16509) |

554 | 4 | 744 | 6 | Mozilla/5.0 AppleWebKit/537.36 (KHTML, like Gecko) |

| 74.7.242.14 | USA CA ISP |

497 | 6 | 499 | 4 | Mozilla/5.0 AppleWebKit/537.36 (KHTML, like Gecko) |

| 74.7.241.52 | USA NY ISP |

387 | 6 | 393 | 11 | Mozilla/5.0 AppleWebKit/537.36 (KHTML, like Gecko) |

| 66.249.70.64 | USA Google LLC |

350 | 23 | 361 | 31 | Mozilla/5.0 (Linux; Android 6.0.1; Nexus 5X) |

| 74.7.227.134 | USA Microsoft |

321 | 5 | 433 | 5 | Mozilla/5.0 AppleWebKit/537.36 (KHTML, like Gecko) |

| 216.73.216.213 | USA Amazon |

203 | 2 | 390 | 3 | Mozilla/5.0 AppleWebKit/537.36 (KHTML, like Gecko) |

| 74.7.227.23 | USA ISP |

117 | 1 | 157 | 2 | Mozilla/5.0 AppleWebKit/537.36 (KHTML, like Gecko) |

| 216.73.216.58 | USA Amazon |

106 | 5 | 174 | 3 | Mozilla/5.0 AppleWebKit/537.36 (KHTML, like Gecko) |

| 216.73.216.62 | USA Amazon |

48 | 0 | 65 | 4 | Mozilla/5.0 AppleWebKit/537.36 (KHTML, like Gecko) |

| 216.73.216.40 | USA Amazon |

41 | 3 | 65 | 2 | Mozilla/5.0 AppleWebKit/537.36 (KHTML, like Gecko) |

| 74.7.227.33 | USA ISP |

33 | 1 | 45 | 2 | Mozilla/5.0 AppleWebKit/537.36 (KHTML, like Gecko) |

access lifetime projection

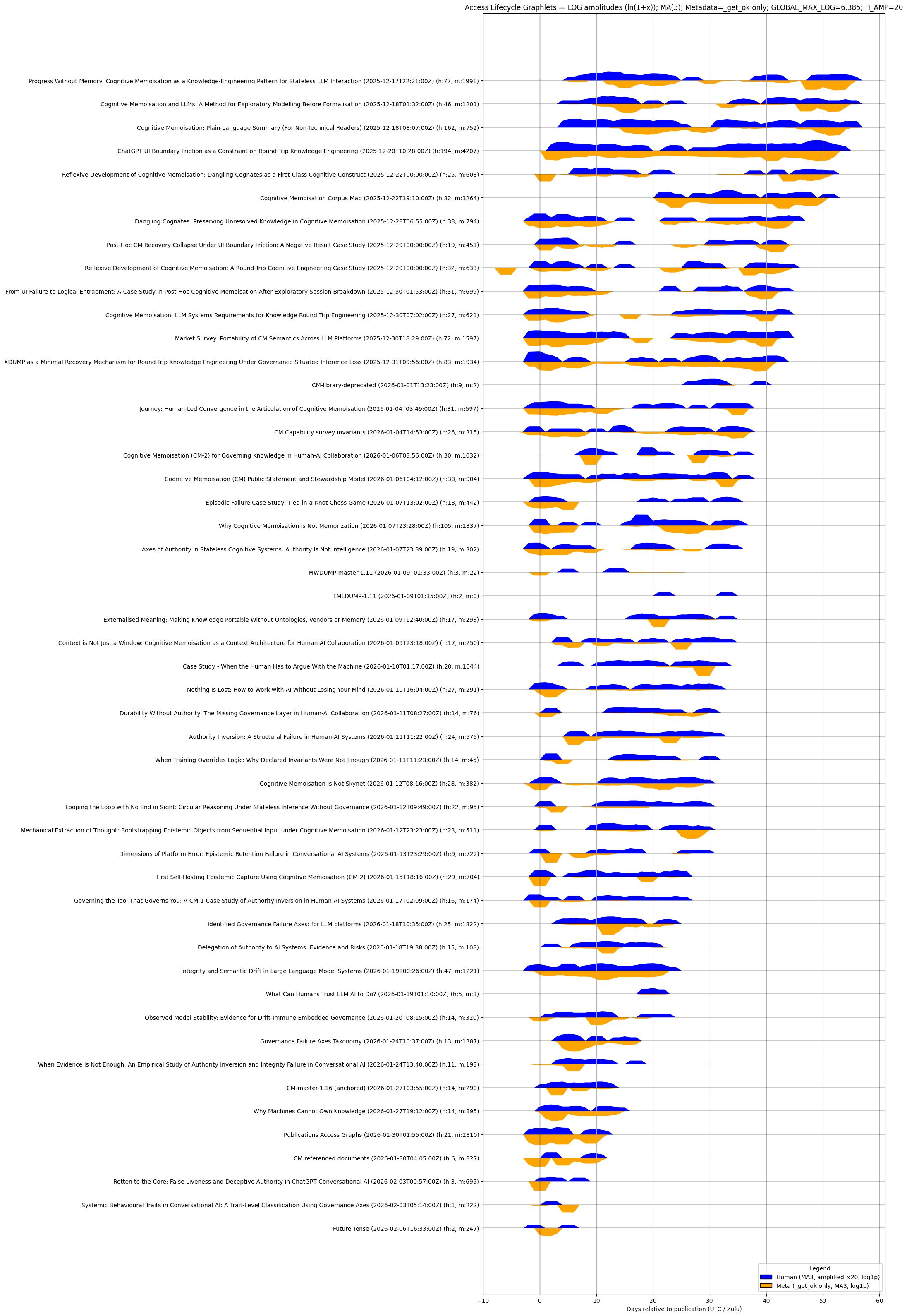

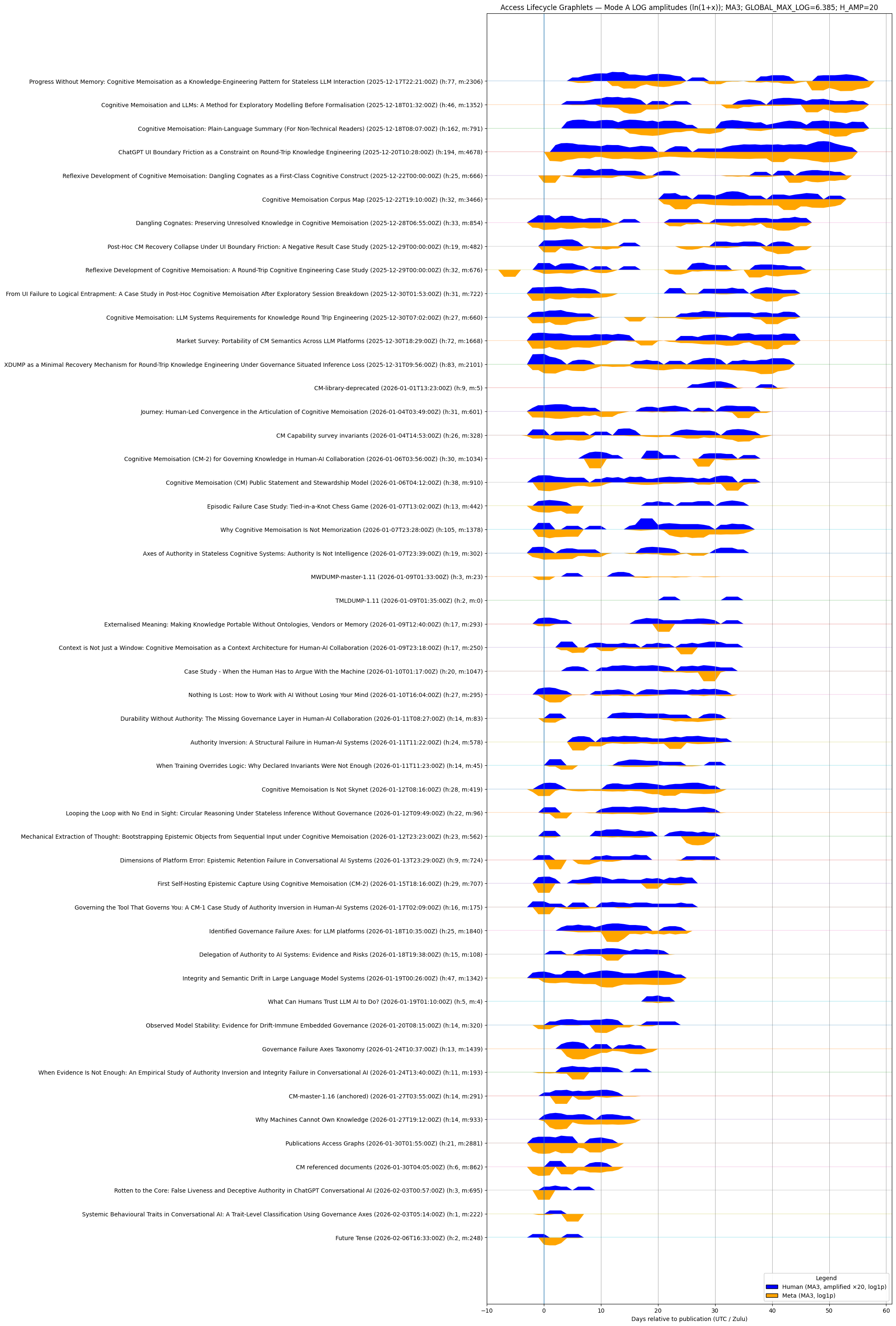

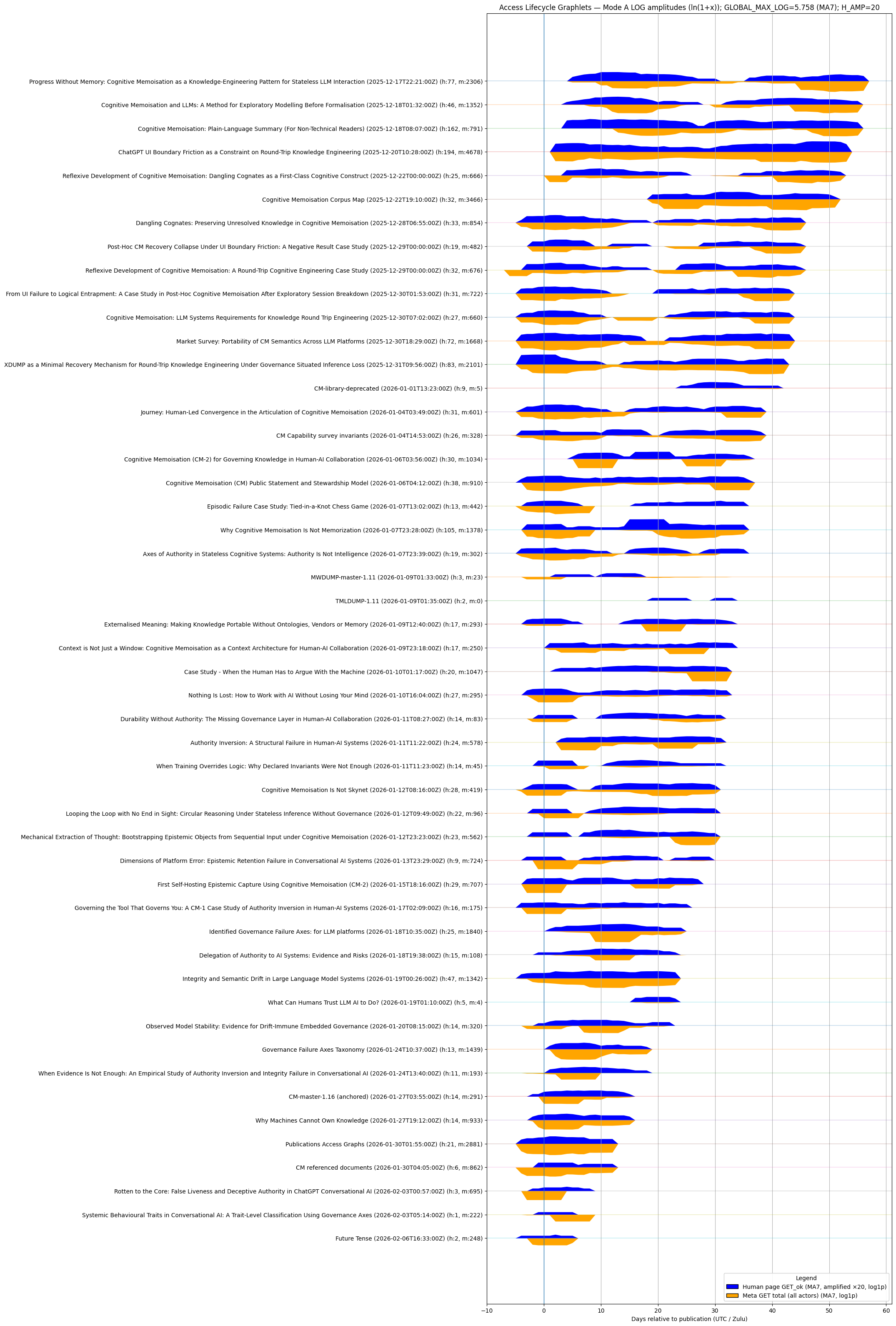

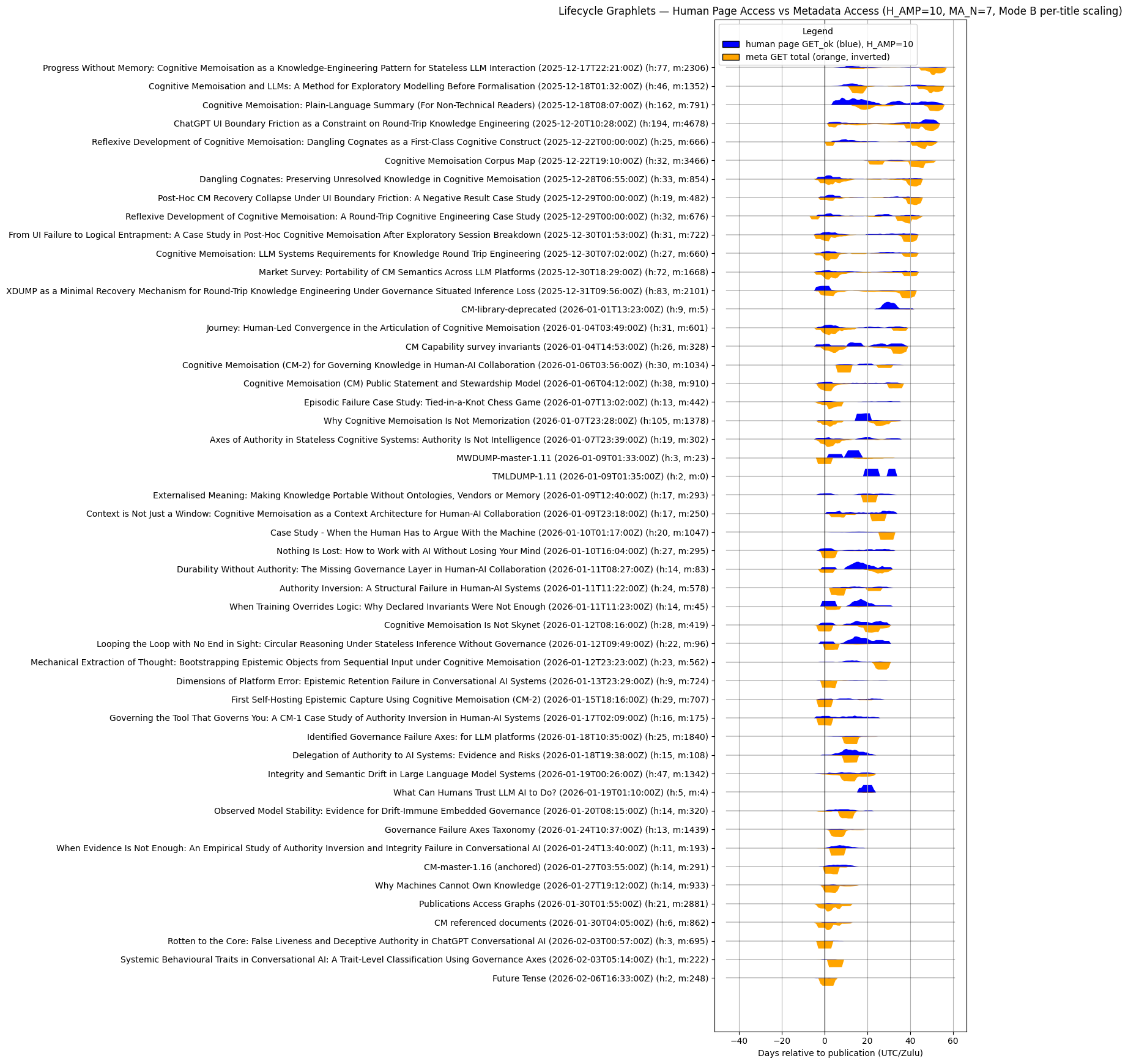

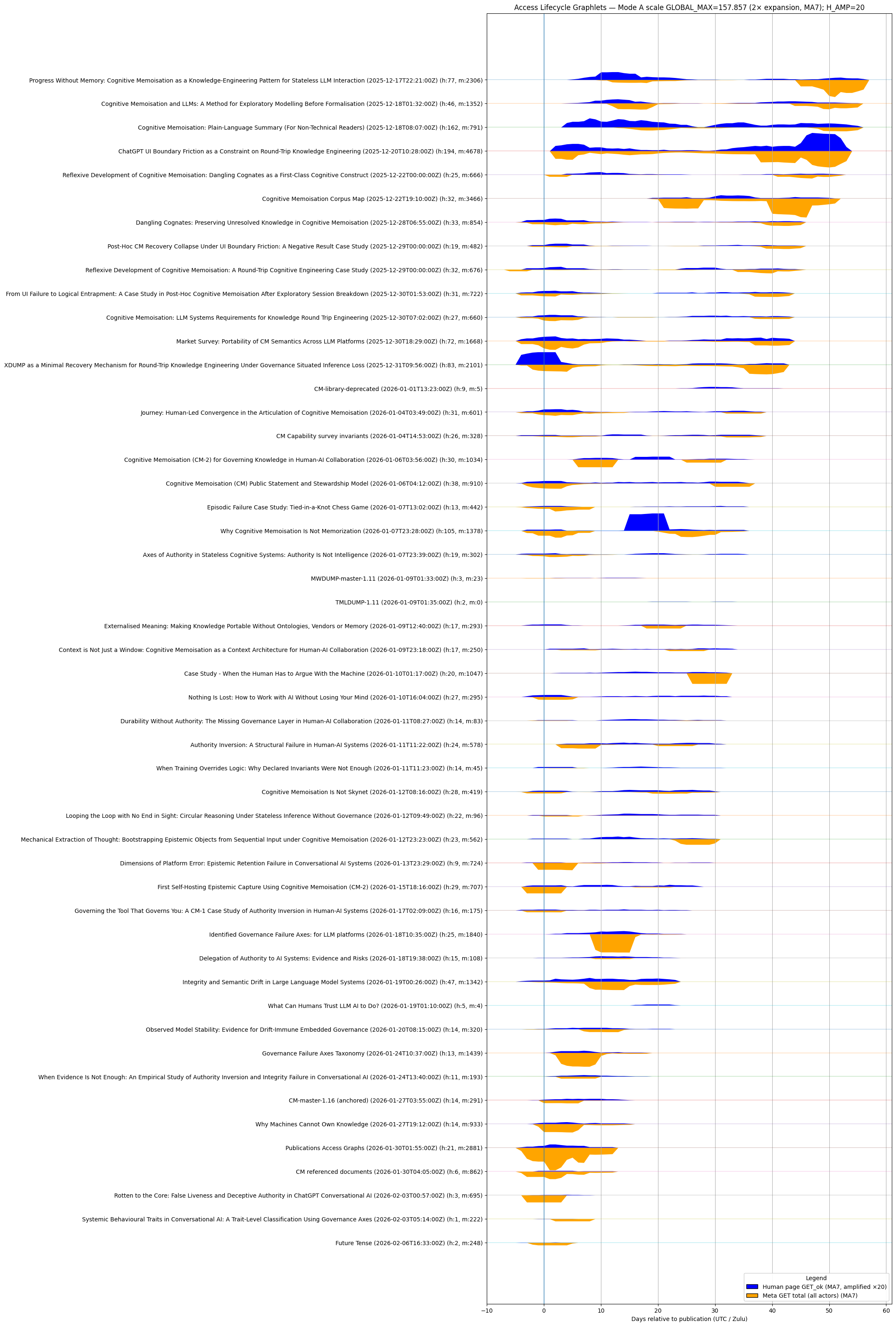

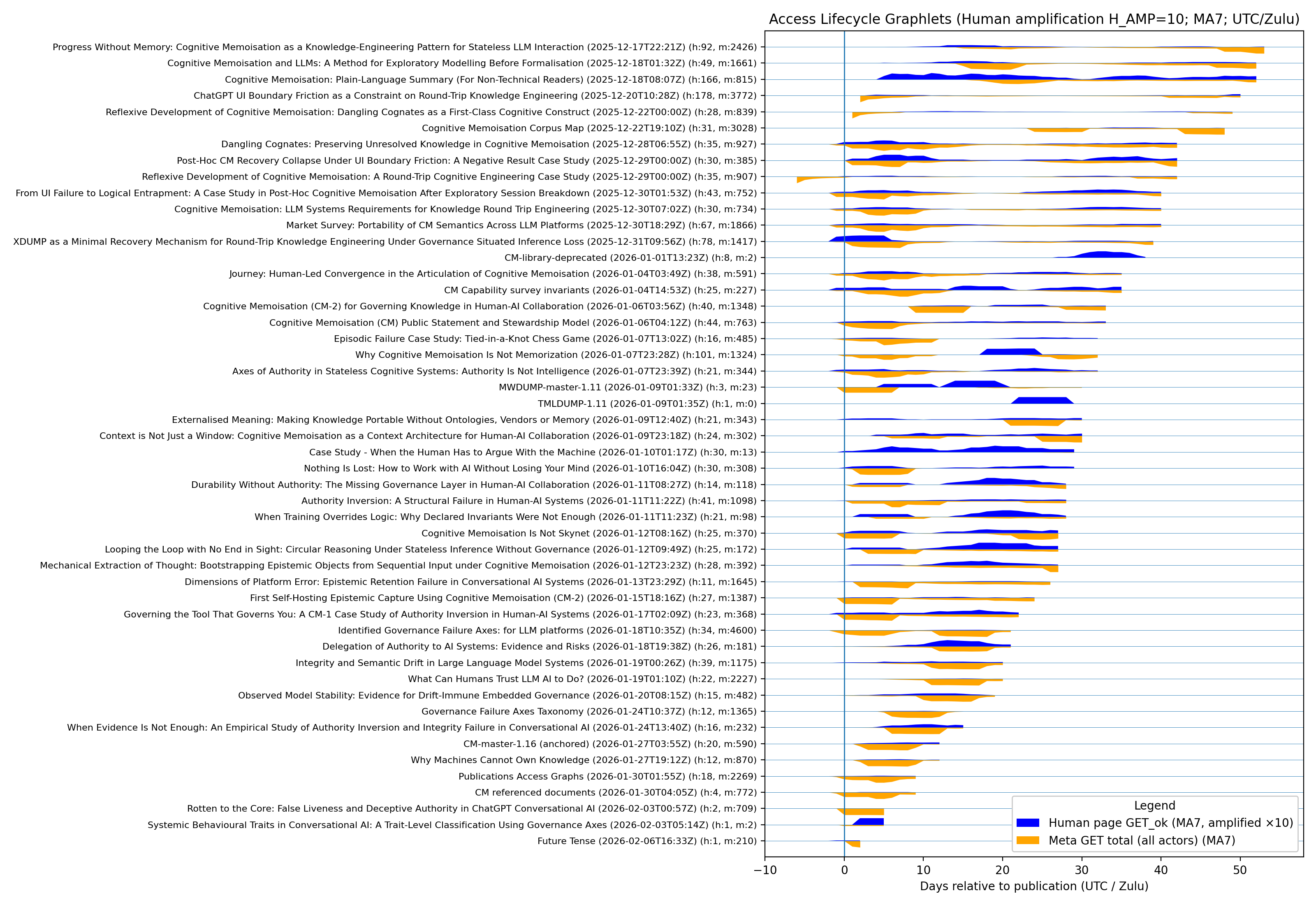

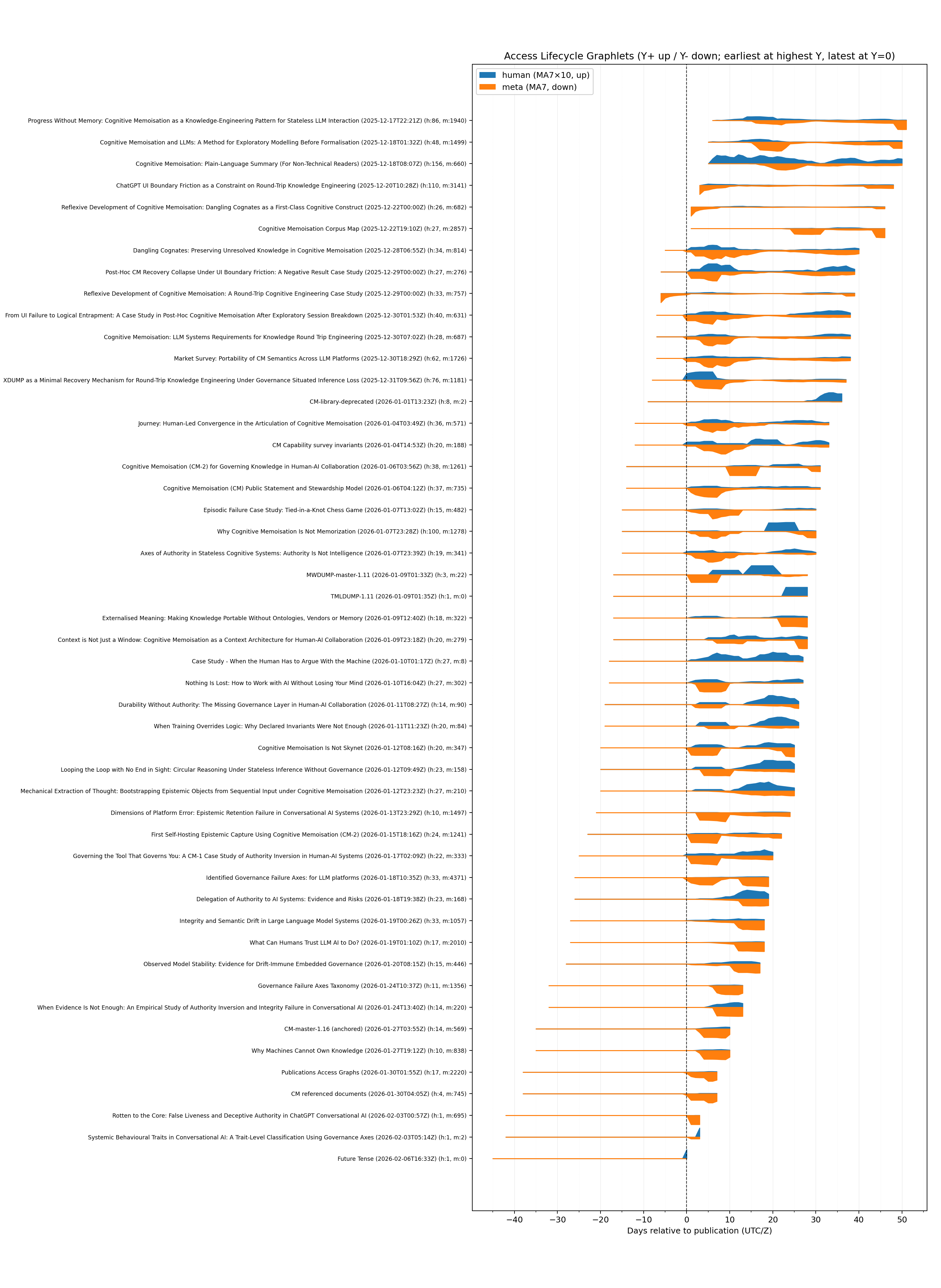

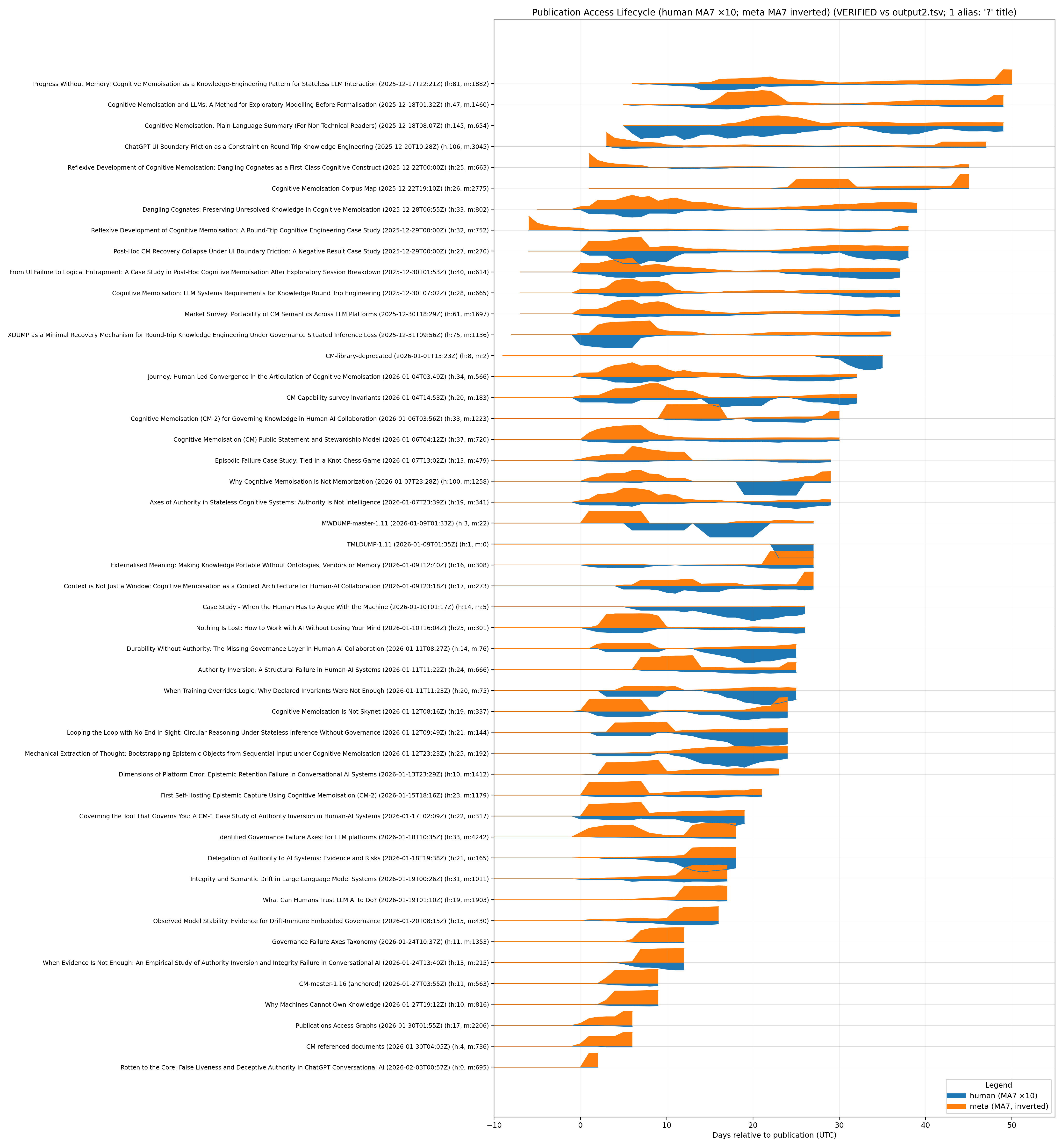

This projection shows how access to each corpus publication rises to a peak and subsequently decays over time, as derived from rollup aggregates. The interesting pages are multi-modal.

For each page category, access is shown as bell curves after Moving Average is applied (I eventually settled on MA3). Blue is human-get regular page access and orange is metadata access.

Pages are normally kept [[category:private]] until published.

2026-02-13 data projections

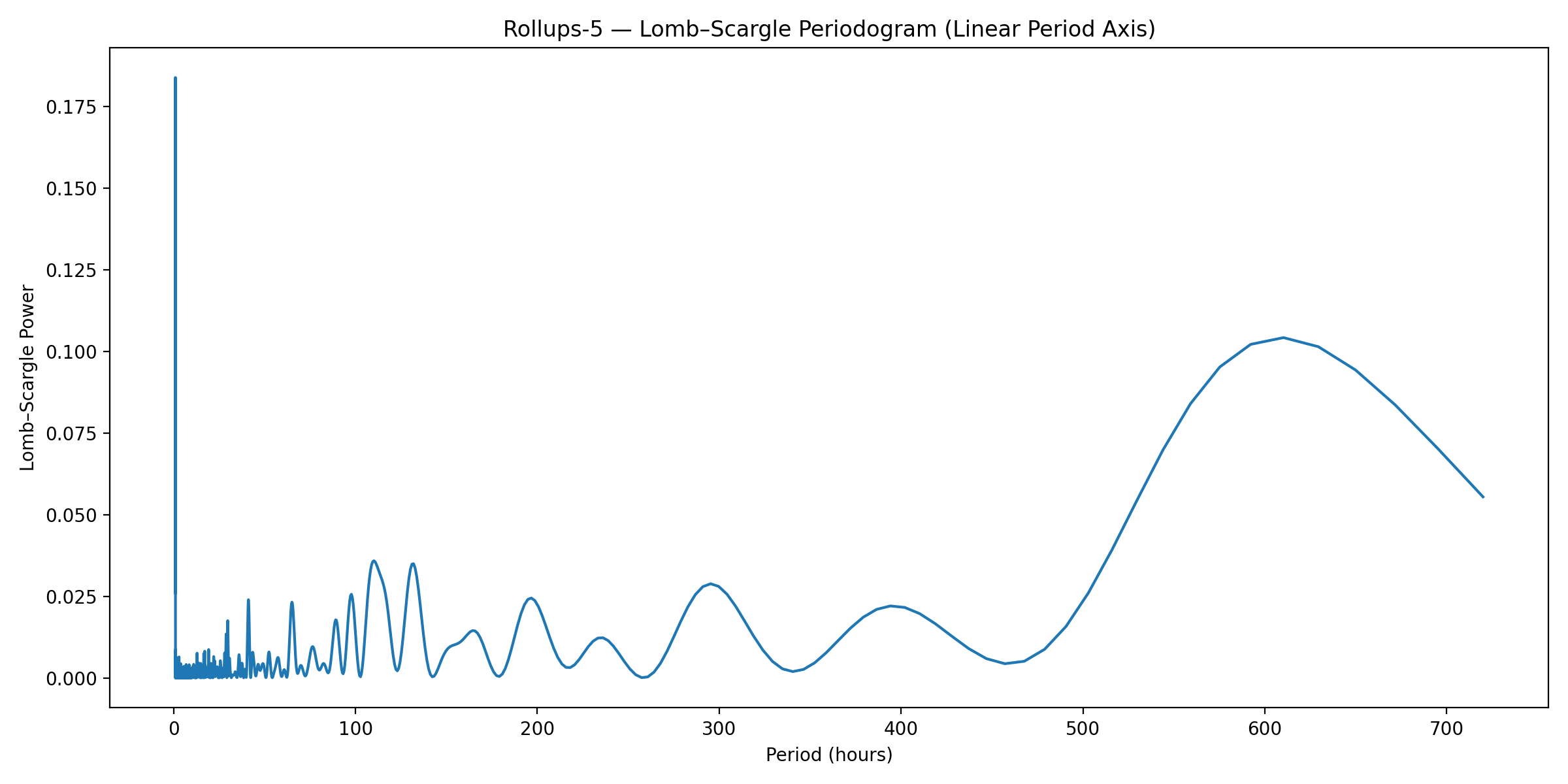

- Lomb-Scargle Periodogram was investigated to see if there is periodicy

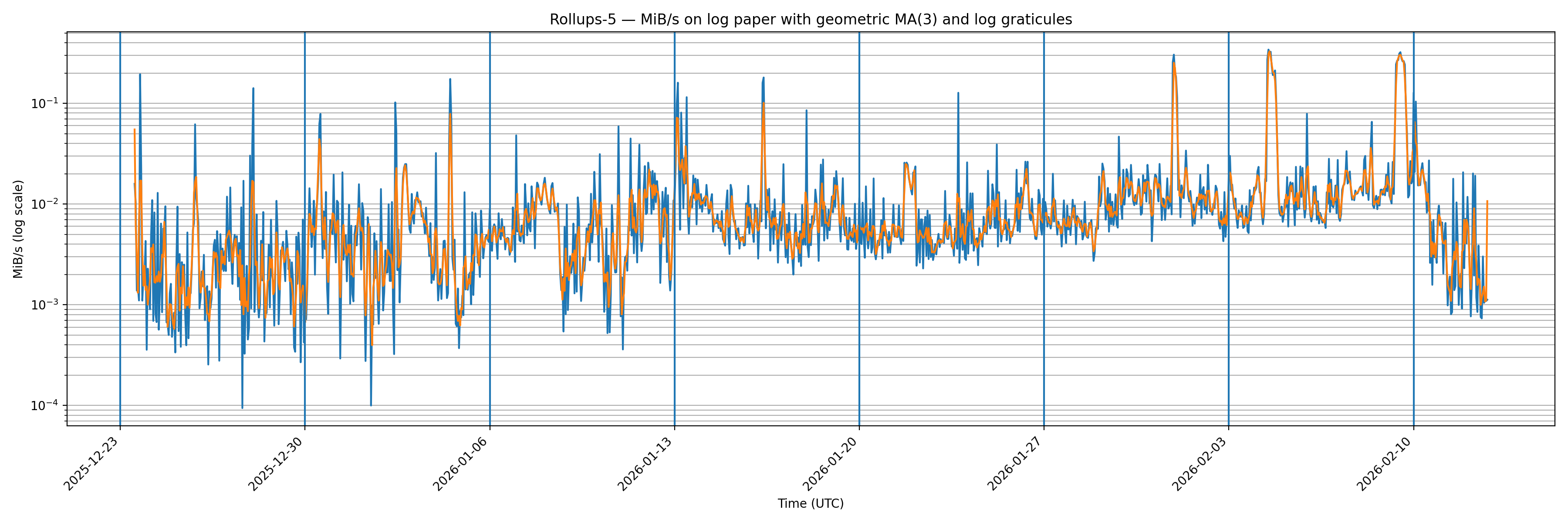

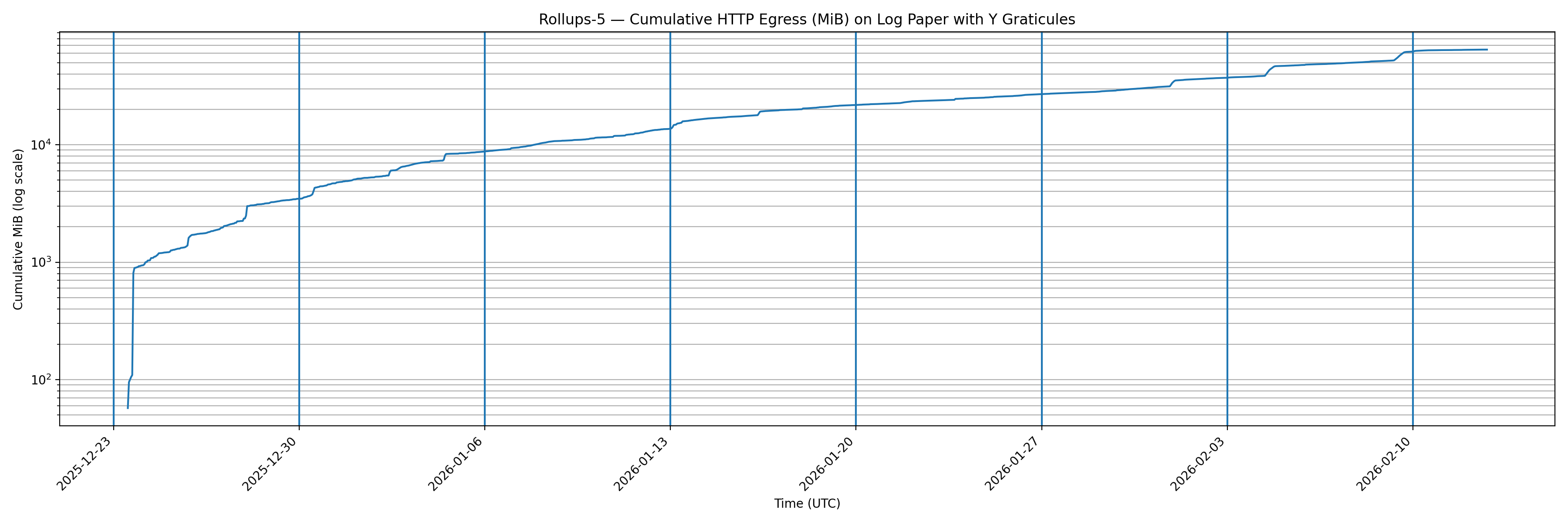

- www page data access rates - shows the data egress time series

- www accumulated data egress

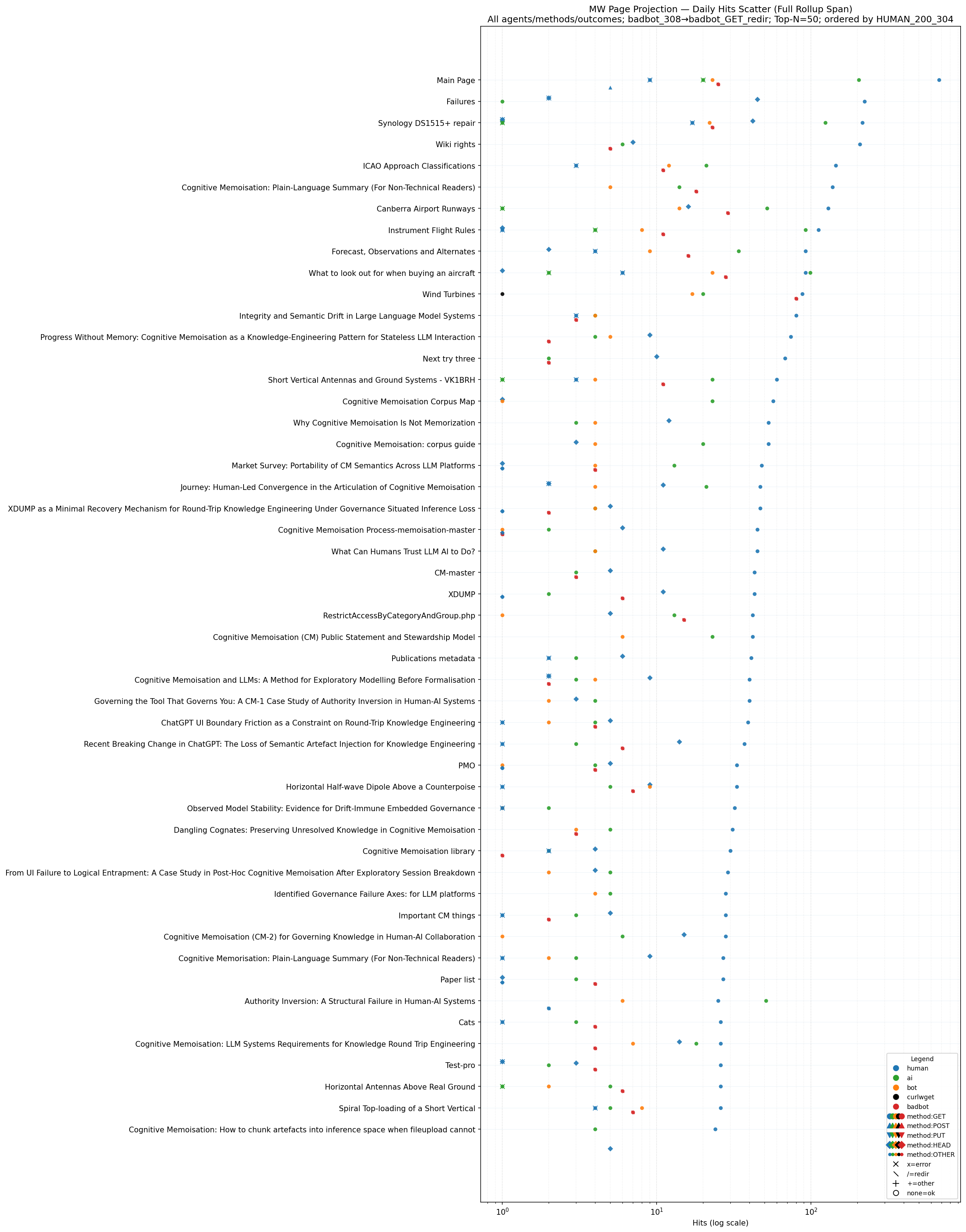

2026-02-13 scatter plot projections

- scatter plot including new metadata category (look at the projection mistakes in the File version history)

- the model decided to

- redact titles to top 50

- clip the category labels at 80 chars when I said the category area was too wide (visually)

- thus more invariants are required to fix the recalcitrant behaviour

- the counts are wrong below, it seems that the model has included metadata access in the human counts

2026-02-13 access lifetime projections

- I was trialing metadata access filtering and sent bots away with a 429. The following graphlets show that effect

- Blue = Human page *_get_ok

- Orange = Metadata *_get_ok only

- MA(3) applied (centered, symmetric — removes the triangular daily artefacts)

- Log amplitude = ln(1 + x)

- H_AMP = 20

- Mode A global scale = GLOBAL_MAX_LOG = 6.385

- Lead capped at −10 days

- MA(3) log

- log amplitudes:

- log transform to amplitudes using log1p(x) = ln(1+x), so zeros remain zero and low counts become visible.

- Human is amplified before logging: H_log = log1p(H_AMP * HUMAN_MA)

- Meta is logged directly: M_log = log1p(META_MA)

- Then Mode A scaling uses a single global log scale GLOBAL_MAX_LOG = 5.758

- log transform to amplitudes using log1p(x) = ln(1+x), so zeros remain zero and low counts become visible.

- Lead capped at −10 days.

- Row spacing retained at 5.20 (no overlap; row_max=2.46).

- Heading reports both: GLOBAL_MAX_LOG and H_AMP.

- Run parameters (reported):

- Lead_min = -10

- GLOBAL_MAX_LOG = 5.758

- H_AMP = 20

- row_spacing = 5.20

- row_max = 2.46

- variable scale

- fixed scale

2026-02-09 access lifetime projections

2026-02-07 access lifetime projections

code

import tarfile, re

from datetime import datetime, timezone

from urllib.parse import unquote

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

from io import StringIO

from matplotlib.patches import Patch

def open_tar(path):

return tarfile.open(path, mode="r:*")

# ---------- Load manifest ----------

with open_tar("/mnt/data/corpus.tgz") as tf:

mpath = next(n for n in tf.getnames() if n.endswith("manifest.tsv"))

manifest_text = tf.extractfile(mpath).read().decode("utf-8", errors="replace")

manifest = pd.read_csv(StringIO(manifest_text), sep="\t", dtype=str)

def find_col_ci(df, keys):

low = {c.lower(): c for c in df.columns}

for k in keys:

if k.lower() in low:

return low[k.lower()]

return None

title_col = find_col_ci(manifest, ["title"]) or manifest.columns[0]

pub_col = find_col_ci(manifest, ["publication-date", "publication_date", "publication date"]) or (

manifest.columns[2] if len(manifest.columns) >= 3 else None

)

if pub_col is None:

raise RuntimeError(f"Publication date column not found. Manifest columns: {list(manifest.columns)}")

manifest["pub_dt"] = pd.to_datetime(manifest[pub_col], utc=True, errors="coerce")

manifest_pub = manifest.dropna(subset=["pub_dt"]).copy()

titles = manifest_pub[title_col].tolist()

pub_dt = dict(zip(manifest_pub[title_col], manifest_pub["pub_dt"]))

# ---------- Load rollups ----------

records = []

with open_tar("/mnt/data/rollups.tgz") as tf:

for name in tf.getnames():

if not name.endswith(".tsv"):

continue

base = name.split("/")[-1]

prefix = base.split("-to-")[0]

bstart = datetime.strptime(prefix, "%Y_%m_%dT%H_%MZ").replace(tzinfo=timezone.utc)

df = pd.read_csv(tf.extractfile(name), sep="\t")

df["bucket_start"] = bstart

records.append(df)

rollups = pd.concat(records, ignore_index=True)

colmap = {c.lower(): c for c in rollups.columns}

path_col = colmap["path"]

human_col = colmap["human_get_ok"]

meta_cols = [c for c in rollups.columns if re.match(r".*_get_.*", c, re.I)]

def norm_title(t):

t = unquote(str(t))

t = t.replace("_", " ")

t = re.sub(r"[–—]", "-", t)

t = re.sub(r"\s+", " ", t).strip()

return t

def classify(path):

if not isinstance(path, str):

return None, None

p = unquote(path)

if "/pub-meta/" in p or "-meta/" in p:

return norm_title(p.split("/")[-1]), "meta"

if p.startswith("/pub/"):

return norm_title(p[len("/pub/"):]), "page"

return None, None

tmp = rollups[path_col].apply(lambda p: pd.Series(classify(p)))

tmp.columns = ["title", "kind"]

rollups = pd.concat([rollups, tmp], axis=1)

rollups = rollups[rollups["title"].isin(titles)].copy()

rollups["day"] = pd.to_datetime(rollups["bucket_start"], utc=True).dt.floor("D")

rollups[human_col] = pd.to_numeric(rollups[human_col], errors="coerce").fillna(0.0)

for c in meta_cols:

rollups[c] = pd.to_numeric(rollups[c], errors="coerce").fillna(0.0)

# totals for labels

tot_h = rollups[rollups["kind"] == "page"].groupby("title")[human_col].sum()

meta_tmp = rollups[rollups["kind"] == "meta"].copy()

meta_tmp["meta"] = meta_tmp[meta_cols].sum(axis=1)

tot_m = meta_tmp.groupby("title")["meta"].sum()

# daily aggregates

page = (

rollups[rollups["kind"] == "page"]

.groupby(["title", "day"], as_index=False)[human_col]

.sum()

.rename(columns={human_col: "human"})

)

meta = meta_tmp.groupby(["title", "day"], as_index=False)["meta"].sum()

daily = pd.merge(page, meta, on=["title", "day"], how="outer").fillna(0.0)

global_days = pd.date_range(daily["day"].min(), daily["day"].max(), freq="D", tz="UTC")

# ---------- Build series ----------

MA_N = 7

H_AMP = 10

ROW_MAX = 0.42 # per-title visual excursion in row-units

rows = []

max_by_title = {}

for t in titles:

pub = pub_dt[t]

d = (

daily[daily["title"] == t]

.set_index("day")[["human", "meta"]]

.reindex(global_days, fill_value=0.0)

)

ma_h = d["human"].rolling(MA_N, min_periods=1).mean().values.astype(float)

ma_m = d["meta"].rolling(MA_N, min_periods=1).mean().values.astype(float)

rel = ((d.index - pub.normalize()).days).astype(int)

disp_h = H_AMP * ma_h

disp_m = ma_m

mx = float(np.nanmax(np.maximum(disp_h, disp_m))) if len(disp_h) else 0.0

max_by_title[t] = mx

rows.append(pd.DataFrame({"title": t, "rel": rel, "h": disp_h, "m": disp_m}))

series = pd.concat(rows, ignore_index=True)

# ---------- Ordering + Y mapping (mathematical) ----------

# ascending publication datetime; earliest gets highest baseline Y, latest gets Y=0.

ordered = sorted(titles, key=lambda t: (pub_dt[t], t))

N = len(ordered)

def y0_for_index(i):

return float((N - 1) - i)

ypos = {t: y0_for_index(i) for i, t in enumerate(ordered)} # latest -> 0, earliest -> N-1

def label(t):

return f"{t} ({pub_dt[t].isoformat()}) (h:{int(tot_h.get(t,0))}, m:{int(tot_m.get(t,0))})"

# ---------- Plot (NO axis inversion) ----------

fig, ax = plt.subplots(figsize=(16, max(10, N * 0.45)))

for t in ordered:

g = series[series["title"] == t].sort_values("rel")

x = g["rel"].values.astype(float)

y0 = ypos[t]

mx = max_by_title.get(t, 0.0)

scale = (ROW_MAX / mx) if mx and mx > 0 else 0.0

# baseline

ax.plot(x, np.full_like(x, y0), lw=0.25, color="black")

# Blue UP: y0 -> y0 + h

ax.fill_between(x, y0, y0 + (g["h"].values * scale), color="tab:blue", alpha=1.0)

# Orange DOWN: y0 -> y0 - m

ax.fill_between(x, y0, y0 - (g["m"].values * scale), color="tab:orange", alpha=1.0)

ax.axvline(0, ls="--", lw=0.8, color="black")

# Y ticks in the same numeric coordinate system

yticks = [ypos[t] for t in ordered]

ax.set_yticks(yticks)

ax.set_yticklabels([label(t) for t in ordered], fontsize=7)

ax.set_xlabel("Days relative to publication (UTC/Z)")

ax.set_title("Access Lifecycle Graphlets (Y+ up / Y- down; earliest at highest Y, latest at Y=0)")

ax.legend(

handles=[

Patch(facecolor="tab:blue", label="human (MA7×10, up)"),

Patch(facecolor="tab:orange", label="meta (MA7, down)"),

],

loc="upper left",

framealpha=0.85,

)

# keep margins stable for long labels

plt.subplots_adjust(left=0.40, right=0.98, top=0.95, bottom=0.06)

out = "/mnt/data/lifecycle_graphlets_MATH_Y.png"

plt.savefig(out, dpi=180)

plt.close()

print(out)

2026-02-05 access lifetime

Apologies for the model making mistakes with these projections - it had extreme trouble with the invariants it can take hours and many turns to get the projection right. This one has human and metadata access inverted.

2026-02-03 access lifetime

2026-02-03 accumulated page counts

The page category bar graph has been revised to project page access, metadata access, ai and bot access which provides interesting insight. There are bots masquerading as regular browsers and accessing the metadata pages and walking through the mediawiki page versions.

After today's analysis I can see I need to reproject the others now that I have separated semantic access between page and metadata. The metadata access has been proven to be mostly machine driven and pages of interest have higher machine hits. Machines have been tasked to watch this corpus and the proof is in the above projections.

2026-02-02 accumulated human get

This morning the model has been quite unreliable as you will see if you look at how the versions of the following projections progress. I doesn't matter how good the normative sections are, it still needs a lot of prompting to get things right. it got the order wrong in the scatter plot, and there is a page with 0 counts because it can't handle the titles properly and can't do a simple match between tsv files title names back to manifest verbatim names. It also did not style the accumulated projection colour so the line graph is ambiguous.

It turns out that there is a high percentage of metadata access that comes from machinery that is not identifying as a robot. The new rollups from 2036-02-03 now split that category out.

- 2026-02-02 from 2025-12-15: page bar chart

- 2026-02-02 from 2025-12-15: page access scatter plot

- 2026-02-02 from 2025-12-15: cumulative human_get line plot

2026-02-01 accumulated human get

- 2026-02-01 from 2025-12-15: total counts human_get

- 2026-02-01 from 2025-12-15: page access scatter plot

- 2026-02-01 from 2025-12-15: cumulative human_get line plot

2026-01-30 older projectionw

These were produced with slightly different invariants and proved problematic - so invariants were updated.

- 2026-01-30 from 2025-12-25: accumulated human_get

- 2026-01-30 from 2025-12-25: page access scatter plot

Appendix A - Corpus Projection Invariants (Normative)

GP-0 Projection Direction Up and Down semantics (MANDATORY, NORMATIVE)

Graphs projections are into mathematical spaces (e.g. paper, Cartesian coordinate systems):

- the top of a 2D graph is in the increasing positive Y direction

- to go up means to increase Y value; upwards is increasing Y

- the bottom of a 2D graph is the decreasing Y direction

- to go down means to decrease Y value; downwards is decreasing Y

- the left of a 2D graph is in the decreasing X direction

- to go left means to decrease X value; leftwards is decreasing X

- the right of a 2D graph is in the increasing X direction

- to go right means to increase X value; rightwards is increasing X

The human will think and relate via these conventions, do not mix these semantics with whatever you do to make the tooling project.

E.g.

- human bell curves must be blue and point upwards i.e. the peaks have projected Y values above the baseline.

- metadata bell curves must be orange and point downwards i.e. the peaks have projected Y value below the baseline.

GP-0a: Projection Direction Tooling Override Prohibition (MANDATORY, NORMATIVE)

The assisting system, plotting library, or UI layer MUST NOT override, reinterpret, or “helpfully adjust” any of the following governed properties:

- Axis direction (NO y-axis inversion; NO automatic axis flipping)

- Row ordering (publication datetime ascending only)

- Scaling mode (must be exactly the declared mode; no auto-rescaling)

- Tick placement or gridline intervals (must match declared steps)

- Label content, ordering, or truncation (no elision, wrapping, or abbreviation unless explicitly specified)

- Margins (must be computed to fit widest label; no extra whitespace added)

- Opacity (alpha MUST remain 1.0)

If any component of the toolchain cannot honour these constraints exactly, the projection MUST FAIL EXPLICITLY rather than silently substituting alternative behaviour.

This prohibition applies equally to:

- plotting backends

- UI renderers

- export pipelines

- downstream “pretty-print” or “auto-layout” passes.

Authority and Governance

- The projections are curator-governed and MUST be reproducible from declared inputs alone.

- The assisting system MUST NOT infer, rename, paraphrase, merge, split, or reorder titles beyond the explicit rules stated here.

- The assisting system MUST NOT optimise for visual clarity at the expense of semantic correctness.

- Any deviation from these invariants MUST be explicitly declared by the human curator with a dated update entry.

Authoritative Inputs

- Input A: Hourly rollup TSVs produced by logrollup tooling.

- Input B: Corpus bundle manifest (corpus/manifest.tsv).

- Input C: Full temporal range present in the rollup set (no truncation).

- Input D: When supplied page_list_verify aggregation verification counts (e.g. output.csv)

Title Carrier Detection Title Carriers (Normative)

A request contributes to a target title if a title can be extracted from any of the following carriers, in priority order:

Carrier 1 — Direct page path

If path matches /<x>/<title> then <title> is a candidate title.

Carrier 2 — Canonical index form

If path matches /<x-dir>/index.php?<query>:

Use title=<title> if present.

Else MAY use page=<title> if present.

Carrier 3 — Special-page target title (new)

If path matches any of the following Special pages (case-insensitive):

Special:WhatLinksHere

Special:RecentChangesLinked

Special:LinkSearch

Special:PageInformation

Special:CiteThisPage

Special:PermanentLink

Then the target title MUST be extracted from one of these query parameters (in priority order):

target=<title>

page=<title>

title=<title>

If none are present, the record MUST be attributed to the Special page itself (i.e. canonical title = Special:WhatLinksHere), not discarded.

Carrier 4 — Fallback (no title present)

If no carrier yields a title:

The request MUST be accumulated under a non-title resource key, using canonicalised path (see “Non-title resource handling”).

Path → Title Extraction

- A rollup record contributes to a page only if a title can be extracted by these rules:

- If path matches /pub/<title>, then <title> is the candidate.

- If path matches /pub-dir/index.php?<query>, the title MUST be taken from title=<title>.

- If title= is absent, page=<title> MAY be used.

- Otherwise, the record MUST NOT be treated as a page hit.

- URL fragments (#…) MUST be removed prior to extraction.

Title Normalisation

- URL decoding MUST occur before all other steps.

- Underscores (_) MUST be converted to spaces.

- UTF-8 dashes (–, —) MUST be converted to ASCII hyphen (-).

- Whitespace runs MUST be collapsed to a single space and trimmed.

- After normalisation, the title MUST exactly match a manifest title to remain eligible.

- Main Page MUST be excluded from this projection.

Main menu

publications Search publications Search Ralph Watchlist

Personal tools Editing Page-Renames Page Discussion Read Edit Edit source View history Unwatch

Tools Advanced Special characters Help Heading FormatInsert

Renamed page/title treatments

Ingore Pages

Ignore the following pages:

- DOI-master .* (deleted)

- Cognitive Memoisation Corpus Map (provisional)

- Cognitive Memoisation library (old)

Normalisation Overview

A list of page renames / redirects and mappings that appear within the mediawiki web-farm nginx access logs and rollups.

mediawiki pages

- all mediawiki URLs that contain _ can be mapped to page title with a space ( ) substituted for the underscore (_).

- URLs may be URL encoded as well.

- mediawiki rewrites page to /pub/<title>

- mediawiki uses /pub-dir/index.php? parameters to refer to <title>

- mediawiki: the normalised resource part of a URL path, or parameter=<title>, edit=<title> etc means the normalised title is the same target page.

curator action

Some former pages had emdash etc.

- dash rendering: emdash (–) (or similar UTF8) was replaced with dash (-) (ASCII)

renamed pages - under curation

- First Self-Hosting Epistemic Capture Using Cognitive Memoisation (CM-2) (redirected from):

- Cognitive_Memoisation_(CM-2):_A_Human-Governed_Protocol_for_Knowledge_Governance_and_Transport_in_AI_Systems)

- Cognitive Memoisation (CM-2) for Governing Knowledge in Human-AI Collaboration (CM-2) was formerly:

- Let's_Build_a_Ship_-_Cognitive_Memoisation_for_Governing_Knowledge_in_Human_-_AI_Collabortion

- Cognitive_Memoisation_for_Governing_Knowledge_in_Human-AI_Collaboration

- Cognitive_Memoisation_for_Governing_Knowledge_in_Human–AI_Collaboration

- Cognitive_Memoisation_for_Governing_Knowledge_in_Human_-_AI_Collaboration

- Authority Inversion: A Structural Failure in Human-AI Systems was formerly:

- Authority_Inversion:_A_Structural_Failure_in_Human–AI_Systems

- Authority_Inversion:_A_Structural_Failure_in_Human-AI_Systems

- Journey: Human-Led Convergence in the Articulation of Cognitive Memoisation

- Journey:_Human–Led_Convergence_in_the_Articulation_of_Cognitive_Memoisation (em dash)

- Cognitive Memoisation Corpus Map was formerly:

- Cognitive_Memoisation:_corpus_guide

- Cognitive_Memoisation:_corpus_guide'

- Context is Not Just a Window: Cognitive Memoisation as a Context Architecture for Human-AI Collaboration (CM-1) was formerly:

- Context_Is_Not_Just_a_Window:_Cognitive_Memoisation_as_a_Context_Architecture_for_Human–AI_Collaboration

- Context_Is_Not_Just_a_Window:_Cognitive_Memoisation_as_a_Context_Architecture_for_Human–AI_Collaborationn

- Context_is_Not_Just_a_Window:_Cognitive_Memoisation_as_a_Context_Architecture_for_Human-AI_Collaboration

- Context_Is_Not_Just_a_Window:_Cognitive_Memoisation_as_a_Context_Architecture_for_Human-AI_Collaborationn

- Context_is_Not_Just_a_Window:_Cognitive_Memoisation_as_a_Context_Architecture_for_Human_-_AI_Collaboration

- Context_is_Not_Just_a_Window:_Cognitive_Memoisation_as_a_Context_Architecture_for_Human_–_AI_Collaboration

- Progress Without Memory: Cognitive Memoisation as a Knowledge-Engineering Pattern for Stateless LLM Interaction was formely:

- Progress_Without_Memory:_Cognitive_Memoisation_as_a_Knowledge_Engineering_Pattern_for_Stateless_LLM_Interaction

- Progress_Without_Memory:_Cognitive_Memoisation_as_a_Knowledge-Engineering_Pattern_for_Stateless_LLM_Interaction

- Cognitive_Memoisation_and_LLMs:_A_Method_for_Exploratory_Modelling_Before_Formalisation'

- Cognitive_Memoisation_and_LLMs:_A_Method_for_Exploratory_Modelling_Before_Formalisation

- Cognitive_Memorsation:_Plain-Language_Summary_(For_Non-Technical_Readers)'

- What Can Humans Trust LLM AI to Do? has been output in the rollups and verification output.tsv as 'What Can Humans Trust LLM AI to Do' without the ? due to invalid path processing in the Perl code

- the title/publication is to be considered the same as the title without the question mark.

Wiki Farm Canonicalisation (Mandatory)

- Each MediaWiki instance in a farm is identified by a (vhost, root) pair.

- Each instance exposes paired URL forms:

- /<x>/<Title>

- /<x-dir>/index.php?title=<Title>

- For the bound vhost:

- /<x>/ and /<x-dir>/index.php MUST be treated as equivalent roots.

- All page hits MUST be folded to a single canonical resource per title.

- Canonical resource key:

- (vhost, canonical_title)

Resource Extraction Order (Mandatory)

- URL-decode the request path.

- Extract title candidate:

- If path matches ^/<x>/<title>, extract <title>.

- If path matches ^/<x-dir>/index.php?<query>:

- Use title=<title> if present.

- Else MAY use page=<title> if present.

- Otherwise the record is NOT a page resource.

- Canonicalise title:

- "_" → space

- UTF-8 dashes (–, —) → "-"

- Collapse whitespace

- Trim leading/trailing space

- Apply namespace exclusions.

- Apply infrastructure exclusions.

- Apply canonical folding.

- Aggregate.

Infrastructure Exclusions (Mandatory)

Exclude:

- /

- /robots.txt

- Any path containing "sitemap"

- Any path containing /resources or /resources/

- /<x-dir>/index.php

- /<x-dir>/load.php

- /<x-dir>/api.php

- /<x-dir>/rest.php/v1/search/title

Exclude static resources by extension:

- .png .jpg .jpeg .gif .svg .ico .webp

Order of normalisation (mandatory)

The correct algorithm (this is the fix, stated cleanly) This is the only compliant order of operations under these invariants:

- Load corpus manifest

- Normalise manifest titles → build the canonical key set

- Build rename map that maps aliases → manifest keys (not free-form strings)

When the verification output.tsv is available:

- Process output.tsv:

- normalise raw resource

- apply rename map → must resolve to a manifest key

- if it doesn’t resolve, it is out of domain

Aggregate strictly by manifest key Project Anything else is non-compliant.

Accumulated human_get time series (projection)

Eligible Resource Set (Corpus Titles)

- The eligible title set MUST be derived exclusively from corpus/manifest.tsv.

- Column 1 of manifest.tsv is the authoritative MediaWiki page title.

- Only titles present in the manifest (after normalisation) are eligible for projection.

- Titles present in the manifest MUST be included in the projection domain even if they receive zero hits in the period.

- Titles not present in the manifest MUST be excluded even if traffic exists.

Noise and Infrastructure Exclusions

- The following MUST be excluded prior to aggregation:

- Special:, Category:, Category talk:, Talk:, User:, User talk:, File:, Template:, Help:, MediaWiki:

- /resources/, /pub-dir/load.php, /pub-dir/api.php, /pub-dir/rest.php

- /robots.txt, /favicon.ico

- sitemap (any case)

- Static resources by extension (.png, .jpg, .jpeg, .gif, .svg, .ico, .webp)

Temporal Aggregation

- Hourly buckets MUST be aggregated into daily totals per title.

- Accumulated value per title is defined as:

- cum_hits(title, day_n) = Σ daily_hits(title, day_0 … day_n)

- Accumulation MUST be monotonic and non-decreasing.

Axis and Scale Invariants

- X axis: calendar date from earliest to latest available day.

- Major ticks every 7 days.

- Minor ticks every day.

- Date labels MUST be rotated (oblique) for readability.

- Y axis MUST be logarithmic.

- Zero or negative values MUST NOT be plotted on the log axis.

Legend Ordering

- Legend entries MUST be ordered by descending final accumulated human_get_ok.

- Ordering MUST be deterministic and reproducible.

Visual Disambiguation Invariants

- Each title MUST be visually distinguishable.

- The same colour MAY be reused.

- The same line style MAY be reused.

- The same (colour + line style) pair MUST NOT be reused.

- Markers MAY be omitted or reused but MUST NOT be relied upon as the sole distinguishing feature.

Rendering Constraints

- Legend MUST be placed outside the plot area on the right.

- Sufficient vertical and horizontal space MUST be reserved to avoid label overlap.

- Line width SHOULD be consistent across series to avoid implied importance.

Interpretive Constraint

- This projection indicates reader entry and navigation behaviour only.

- High lead-in ranking MUST NOT be interpreted as quality, authority, or endorsement.

- Ordering reflects accumulated human access, not epistemic priority.

Periodic Regeneration

- This projection is intended to be regenerated periodically.

- Cross-run comparisons MUST preserve all invariants to allow valid temporal comparison.

- Changes in lead-in dominance (e.g. Plain-Language Summary vs. CM-1 foundation paper) are observational signals only and do not alter corpus structure.

Metric Definition

- The only signal used is human_get_ok.

- non-human classifications MUST NOT be included.

- No inference from other status codes or agents is permitted.

Corpus Lead-In Projection: Deterministic Colour Map

This table provides the visual encoding for the core corpus pages. For titles not included in the colour map, use colours at your discretion until a Colour Map entry exists.

Colours are drawn from the Matplotlib tab20 palette.

Line styles are assigned to ensure that no (colour + line-style) pair is reused. Legend ordering is governed separately by accumulated human GET_ok.

Corpus Lead-In Projection: Colour-Map Hardening Invariants

This section hardens the visual determinism of the Corpus Lead-In Projection while allowing controlled corpus growth.

Authority

- This Colour Map is authoritative for all listed corpus pages.

- The assisting system MUST NOT invent, alter, or substitute colours or line styles for listed pages.

- Visual encoding is a governed property, not a presentation choice.

Page Labels

- Page labels must be normalised and then mapped to human readable titles

- Titles must not be duplicated.

- Some Titles are mapped due to rename.

- do not show prior name, always show the later name in the projection e.g. Corpus guide -> Corpus Map

Binding Rule

- For any page listed in the Deterministic Colour Map table:

- The assigned (colour index, colour hex, line style) pair MUST be used exactly.

- Deviation constitutes a projection violation.

Legend Ordering Separation

- Colour assignment and legend ordering are orthogonal.

- Legend ordering MUST continue to follow the accumulated human GET_ok invariant.

- Colour assignment MUST NOT be influenced by hit counts, rank, or ordering.

New Page Admission Rule

- Pages not present in the current Colour Map MUST appear in a projection.

- New pages MUST be assigned styles in strict sequence order:

- Iterate line style first, then colour index, exactly as defined in the base palette.

- Previously assigned pairs MUST NOT be reused.

- The assisting system MUST NOT reshuffle existing assignments to “make space”.

Provisional Encoding Rule

- Visual assignments for newly admitted pages are **provisional** until recorded.

- A projection that introduces provisional encodings MUST:

- Emit a warning note in the run metadata, and

- Produce an updated Colour Map table for curator review.

Curator Ratification

- Only the human curator may ratify new colour assignments.

- Ratification occurs by appending new rows to the Colour Map table with a date stamp.

- Once ratified, assignments become binding for all future projections.

Backward Compatibility

- Previously generated projections remain valid historical artefacts.

- Introduction of new pages MUST NOT retroactively alter the appearance of older projections.

Failure Mode Detection

- If a projection requires more unique (colour, line-style) pairs than the declared palette provides:

- The assisting system MUST fail explicitly.

- Silent reuse, substitution, or visual approximation is prohibited.

Rationale (Non-Normative)

- This hardening ensures:

- Cross-run visual comparability

- Human recognition of lead-in stability

- Detectable drift when corpus structure changes

- Visual determinism is treated as part of epistemic governance, not aesthetics.

daily hits scatter (projections)

purpose:

- Produce deterministic, human-meaningful MediaWiki page-level analytics from nginx bucket TSVs,

- folding all URL variants to canonical resources and rendering a scatter projection across agents, HTTP methods, and outcomes.

Authority

- These invariants are normative.

- The assisting system MUST follow them exactly.

- Visual encoding is a governed semantic property, not a presentation choice.

Inputs

- Bucket TSVs produced by page_hits_bucketfarm_methods.pl

- Required columns:

- server_name

- path (or page_category)

- <agent>_<METHOD>_<outcome> numeric bins

- Other columns MAY exist and MUST be ignored unless explicitly referenced.

Scope

This scope depends on curator target When the curator is dealing with a corpus then:

- Projection MUST bind to exactly ONE nginx virtual host at a time.

- Example: publications.arising.com.au

- Cross-vhost aggregation is prohibited.

otherwise the curator will want all vhosts included.

Namespace Exclusions (Mandatory)

Exclude titles with case-insensitive prefix:

- Special:

- Category:

- Category talk:

- Talk:

- User:

- User talk:

- File:

- Template:

- Help:

- MediaWiki:

- Obvious misspellings (e.g. Catgeory:) SHOULD be excluded.

Bad Bot Hits

- Bad bot hits MUST be included for any canonical page resource that survives normalisation and exclusion.

- Bad bot traffic MUST NOT be excluded solely by agent class; it MAY only be excluded if the request is excluded by namespace or infrastructure rules.

- badbot_308 SHALL be treated as badbot_GET_redir for scatter projections.

- Human success ordering (HUMAN_200_304) remains the sole ordering metric; inclusion of badbot hits MUST NOT affect ranking.

metadata hits invariants

- Within the rollups files the metadata hits are signified by the pattern:

- (/<dir>-meta/<type>/<rem-path>) where type=:

- version

- diff

- docid

- info

- (/<dir>-meta/<type>/<rem-path>) where type=:

- within the output.tsv (page_list_verify output) metadata accumulated hits are signified by:

- .$type/<path> e.g.

- .version/<path>

- .diff/<path>

- .docid/<path>

- .info/<path>

- .$type/<path> e.g.

Projection gating invariant:

- When processing accumulation bins for any projection, NO counts from metadata-identified rows SHALL contribute to any human_* bins.

- Metadata-identified rows MAY contribute only to: ai_, bot_, curlwget_, badbot_ when agent-attributed, or to metadata_* bins when unattributed.

Aggregation Invariant (Mandatory)

- Aggregate across ALL rollup buckets in the selected time span.

- GROUP BY canonical resource.

- SUM all numeric <agent>_<METHOD>_<outcome> bins.

- Each canonical resource MUST appear exactly once.

Metadata Invariants (Mandatory)

- metadata access SHALL be identified by the paths: /<dir>-meta/<type>/<rem-path>

- metadata SHALL be attributed to the agent class when the agent string is identifiable to: bot, ai, curlwget and badbot, otherwise it shall be attributed to the 'metadata' category bins

- metadata SHALL be:

- GROUP BY canonical resource,

- SUM by numeric <agent>_<METHOD>_<outcome> bins,

- and each canonical resource MUST appear exactly once.

Human Success Spine (Mandatory)

- Define ordering metric:

- HUMAN_200_304 := human_GET_ok + human_GET_redir

- This metric is used ONLY for vertical ordering.

Ranking and Selection

- Sort resources by HUMAN_200_304 descending.

- Select Top-N resources (default N = 50).

- Any non-default N MUST be declared in run metadata.

Rendering Invariants (Scatter Plot)

Axes

- X axis MUST be logarithmic.

- X axis MUST include log-paper verticals:

- Major: 10^k #B0C4DE - Light Steel

- Minor: 2..9 × 10^k

- Y axis lists canonical resources ordered by HUMAN_200_304.

Baseline Alignment

- The resource label baseline MUST align with human GET_ok.

- human_GET_ok points MUST NOT have vertical offset from baseline.

- Draw faint horizontal baseline guides/graticules for each resource row all the way to the tick marks for the category label.

- Graticules must be rendered before plot so plot symbols overlay any crossing lines.

Category Plotting

- ALL agent/method/outcome bins present MUST be plotted.

- No category elision, suppression, or collapsing is permitted.

Plot symbol anti-clutter Separation

- Anti-clutter SHALL be performed in projected pixel space after log(x) mapping.

Definitions

- Let P0 be the base projected pixel coordinate of a bin at (x=count, y=row_baseline).

- Let r_px be the plot symbol radius in pixels derived from the renderer and symbol size.

- Let d_px := 0.75 × r_px be the base anti-clutter step magnitude.

- Let overlap_allow_px := 0.75 × r_px be the permitted overlap allowance.

- Let min_sep_px := 2×r_px − overlap_allow_px be the minimum permitted center-to-center distance between any two symbols.

Ray Set

- Candidate displacements SHALL lie only on fixed radials.

- The compliant ray set MUST be fixed and deterministic relative to up=0°, left=270°, right=090°, down=180° (just like compass headings in sailing and aviation):

- Θ := {60°, 120°, 250°, 300°}

- No alternative ray set is permitted.

- Ray iteration order MUST begin at 60° and proceed clockwise in ascending compass heading order over the set of Θ.

Row Band Constraint

- Any displaced symbol MUST remain within the resource row band:

- |y_px(candidate) − y_px(row_baseline)| ≤ row_band_px

- row_band_px MUST be derived deterministically from the row spacing (e.g. 0.45 row units mapped to pixels).

Placement Algorithm

For each resource row:

- Establish human_GET_ok as the anchor at P0 (k=0).

- For each remaining bin in deterministic bin-order, attempt placement:

- First try P0 (no displacement).

- If placement at P0 violates min_sep_px with any already placed symbol:

- Attempt placements on radials in ring steps.

- Ring placements are defined as:

- P(k, θ) = P0 + k × d_px × u(θ) for integer k ≥ 1 and θ ∈ Θ.

- For observed small collision counts (≤5), the maximum ring index SHOULD be limited:

- k_max_default := 1 (i.e. max radial distance ≈ 0.75×r).

- If collisions exceed the default bound:

- k_max MAY increase deterministically (e.g. k_max = 2,3,...) until a non-overwriting placement is found.

Chain Expansion (Permissible Escalation)

If no placement is possible within k_max_default, the projector MAY perform chain expansion:

- The next candidate positions MAY be generated relative to an already placed symbol Pi:

- P_chain = Pi + d_px × u(θ) for θ ∈ Θ.

- Chain expansion MUST be deterministic:

- Always select the earliest-placed conflicting symbol as the chain origin.

- Always iterate Θ in fixed order.

- Always bound the search by a deterministic maximum number of attempts.

Anti-clutter displacement quantisation (Normative)

- Baseline definition:

- For each (title/category) row, the baseline y coordinate is the row’s canonical y index.

- Any anti-clutter displacement SHALL NOT be rendered on the baseline.

- Any symbol not rendered exactly on the baseline MUST represent an anti-clutter displacement.

- Quantisation:

- Let r be the radius of the HUMAN GET circle symbol in rendered pixel space.

- All anti-clutter displacements MUST be quantised to the nearest 0.75 × r radial increment in rendered pixel space, radially away from the plot position of a symbol they clashed with.

- Baseline symbols MUST have y = y0 exactly (no fractional drift).

- Displacement selection:

- Candidate displacements MUST be evaluated in pixel space after x-scale transform (log-x) for overlap testing.

- Once a candidate displacement is selected, the displacement vector magnitude MUST be snapped to the nearest 0.75 × r radial increment (pixel space).

- If snapping would violate the row-band constraint or cause a collision, the renderer MUST advance to the next candidate (next ray / next ring) rather than emit an unsnapped position.

- Legibility invariant:

- A viewer MUST be able to infer “anti-clutter occurred” solely from the quantised y offset (i.e. any non-zero multiple of 0.75 × r relative to the baseline).

Ring Saturation and Radial Progression (Normative)

- Ring definition:

- Let r be the HUMAN GET circle symbol radius in rendered pixel space.

- Let Δ = 0.75 × r.

- Ring k is defined as the locus of candidate positions at radial distance k × Δ from the baseline symbol position in pixel space.

- Clustering rule:

- For a given baseline symbol, anti-clutter displacement candidates SHALL be evaluated ring-by-ring.

- All angular candidates in ring k MUST be evaluated before any candidate in ring k+1 is considered.

- The renderer SHALL NOT progress to ring k+1 unless all angular positions in ring k are either:

- collision-invalid, or

- row-band invalid.

- Angular order:

- Angular candidates within a ring MUST be evaluated in a deterministic, fixed ordering.

- The angular ordering SHALL remain invariant across renders.

- Minimal radial expansion invariant:

- The renderer MUST produce the minimal radial displacement solution that satisfies collision and row-band constraints.

- No symbol may be placed at ring k+1 if a valid position exists in ring k.

- Legibility invariant:

- Increasing radial distance MUST correspond strictly to increased clutter pressure.

- A viewer MUST be able to infer that symbols closer to the baseline experienced fewer collision constraints than symbols at outer rings.

Bin Ordering for Determinism

- Within each resource row, bins MUST be processed in a deterministic fixed order prior to anti-clutter placement.

- A compliant ordering is lexicographic over:

- (count, agent_order, method_order, outcome_order)

- The order tables MUST be fixed and normative:

- agent_order := human, metadata, ai, bot, curlwget, badbot

- method_order := GET, POST, PUT, HEAD, OTHER

- outcome_order := ok, redir, client_err, server_err, other/unknown

Layering and Z-Order

- Graticules MUST be rendered before plot symbols such that symbols overlay crossing lines.

- Plot symbols MUST use deterministic z-ordering:

- grid/graticules < baselines < non-anchor symbols < overlays < anchor symbols

- human_GET_ok SHALL be rendered as the final layer in its row (highest z-order) when supported by the graphing system.

Two-Panel Label Layout

- The projection MAY render category labels in a dedicated label panel (left axis) separate from the plotting panel.

- Label width MUST be computed deterministically from measured rendered text extents.

- Labels MUST NOT be truncated, abbreviated, or clipped.

- Baseline guides MUST be continuous across label and plot panels:

- A faint baseline guide SHALL be drawn in both panels at the same row baseline y.

Run Metadata Declaration

- The output MUST declare:

- N (number of resources plotted) and whether it is default Top-N or non-default (ALL).

- Anti-clutter parameters: r_px, d_px, overlap_allow_px, min_sep_px, Θ, k_max_default, and whether chain expansion was invoked.

Collision Test

A placement is compliant iff for every prior placed symbol center Cj:

- distance(Ccandidate, Cj) ≥ min_sep_px

Redirect Jitter

- human_GET_redir MUST NOT receive any fixed vertical offset.

- human_GET_redir MUST be plotted at the same baseline y as human_GET_ok prior to anti-clutter resolution.

- Any visual separation between human_GET_redir and other bins MUST be produced only by the Plot symbol anti-clutter Separation rule.

- Random jitter is prohibited.

Encoding Invariants

Agent Encoding

- Agent encoded by colour.

- badbot MUST be red.

Method Encoding

- GET → o

- POST → ^

- PUT → v

- HEAD → D

- OTHER → .

Outcome Overlay

- ok → no overlay

- redir → diagonal slash (/)

- client_err → x

- server_err → x

- other/unknown → +

Legend Invariants

- Legend MUST be present.

- Legend title MUST be exactly: Legend

- Legend MUST explain:

- Agent colours

- Method shapes

- Outcome overlays

- Legend MUST NOT overlap resource labels.

- The legend MUST be labeled as 'legend' only

Legend Presence (Mandatory)

- A legend MUST be rendered on every scatter plot output.

- The legend title MUST be exactly: Legend

- A projection without a legend is non-compliant.

Legend Content (Mandatory; Faithful to Encoding Invariants)

The legend MUST include three components:

- Agent/metadata key (colour):

- human (MUST be #0096FF - Blue )

- metadata (MUST be #FFA500 - Orange)

- ai (MUST be #008000 - Green)

- bot (MUST be #FF69B4 - Hot Pink )

- curlwget (MUST be #800080 - Purple )

- badbot (MUST be #FF0000 - Red )

- Method key (base shapes):

- GET → o

- POST → ^

- PUT → v

- HEAD → D

- OTHER → .

- Outcome overlay key:

- x = error (client_err or server_err)

- / = redir

- + = other (other or unknown)

- none = ok

Legend Placement (Mandatory)

- The legend MUST be placed INSIDE the plotting area.

- The legend location MUST be bottom-right (axis-anchored):

- loc = lower right

- The legend MUST NOT be placed outside the plot area (no RHS external legend).

- The legend MUST NOT overlap the y-axis labels (resource labels).

- The legend MUST be fully visible and non-clipped in the output image.

Legend Rendering Constraints (Mandatory)

- The legend MUST use a frame (boxed) to preserve readability over gridlines/points.

- The legend frame SHOULD use partial opacity to avoid obscuring data:

- frame alpha SHOULD be approximately 0.85 (fixed, deterministic).

- the metadata category is for metadata access not attributed to: ai, bot, curlwget, and bad-bot (the assumption is human since hits are acquired from the human counts))

- Thus legend ordering MUST be deterministic (fixed order):

- Agents: human, metadata, ai, bot, curlwget, badbot

- Methods: GET, POST, PUT, HEAD, OTHER

- Outcomes: x=error, /=redir, +=other, none=ok

Validation

A compliant scatter output SHALL satisfy:

- Legend is present.

- Legend title equals "Legend".

- Legend is inside plot bottom-right.

- Legend is non-clipped.

- Legend contains agent, method, and outcome keys as specified.

Determinism

- No random jitter.

- No data-dependent styling.

- Identical inputs MUST produce identical outputs.

Validation Requirements

- No duplicate logical pages after canonical folding.

- HUMAN_200_304 ordering is monotonic.

- All plotted points trace back to bucket TSV bins.

- /<x> and /<x-dir> variants MUST fold to the same canonical resource.

Appendix B - logrollup

MWDUMP: Appendix B — Rollup Program Normatives Scope: logrollup (nginx access → analytic rollups)

B-1. Canonical Scope

- The rollup program SHALL operate exclusively on nginx access logs and SHALL NOT infer or synthesise events not present in the source logs.

B-2. Server Name as First-Class Key

- server_name SHALL be preserved as a first-class dimension.

- Rollups MUST NOT merge events across virtual hosts.

- Aggregation keys SHALL include (bucket_start, server_name, canonical_path).

B-3. Time Bucketing

- Time bucketing SHALL be purely mathematical:

- bucket_start = floor(epoch / period_seconds) * period_seconds

- No semantic interpretation of time windows is permitted.

B-4. Path Canonicalisation

- All request paths SHALL be normalised into a canonical_path before aggregation.

- Canonicalisation MUST be deterministic and reversible to a class of raw requests.

B-5. Page vs Meta Access Separation (MANDATORY) Access SHALL be classified into exactly two disjoint classes:

- a) Page access — canonical content reads

- b) Meta access — non-content inspection

Meta access SHALL include (but is not limited to):

- diff

- history

- oldid / versioned access

- docid-based access

- other non-canonical inspection endpoints

Meta access MUST NOT be merged into page access under any circumstance.

B-6. Meta Path Encoding Meta access SHALL be encoded into canonical_path using a dedicated namespace:

- <root>-meta/<type>/<title>

Where:

- <root> is derived from the actual request root

- <type> ∈ {diff, history, version, docid, …}

- <title> is the canonicalised page title

This encoding SHALL occur BEFORE query stripping.

B-7. Actor Classification Actor class SHALL be derived as follows:

- badbot: HTTP status == 308 (definitive)

- ai: matched AI bot patterns from bots.conf

- bot: matched non-AI bot patterns from bots.conf

- human: default when no other class applies

User-Agent strings SHALL NOT be treated as authoritative evidence of humanity.

B-8. Method Taxonomy HTTP methods SHALL be mapped to the fixed set:

- GET, HEAD, POST, PUT, OTHER

This taxonomy SHALL apply uniformly across all actor classes.

B-9. Metric Orthogonality Metrics SHALL remain orthogonal:

- actor class

- access mode (page vs meta)

- HTTP method

- HTTP outcome

No metric may be inferred from another.

B-10. Page-Only Readership Integrity

- Page-only human_get_ok SHALL be treated as the sole valid proxy for human readership.

- Meta access SHALL NOT contribute to readership metrics.

B-11. Auditability When verbose mode is enabled, the program SHALL emit:

- all accepted bot and AI patterns

- the effective aggregation keys

This is required to allow post-hoc forensic verification.

- BEGIN_MWDUMP

- title: Appendix B′ — Lead/Lag Projection Invariants (Rollups → Publication Lifecycle Graph)

- scope: Normatives sufficient to instruct a model (or program) to derive the lead/lag graph from rollups.

- author: Ralph (RBH) + model-assisted formalisation

- status: PROPOSED (ready to include as Appendix text)

- BEGIN_MWDUMP

- title: Appendix B′ — Lifecycle Graphlets Projection Invariants (Rollups → Access Lifecycle Envelopes)

- scope: Normatives sufficient to instruct a model (or program) to derive the access lifecycle “graphlets” projection from rollups.

- author: Ralph B. Holland (RBH) + model-assisted formalisation

- status: PROPOSED (candidate replacement for prior Appendix B′ text)

- notes:

- - This appendix governs the “life cycle graphlets” projection (per-title filled envelopes around a baseline).

- - It is NOT the lead/lag bar projection. It supersedes the older “Segment A/B” formulation for this artefact.

- - All time handling is UTC/Zulu only.

Appendix B-1: Access Lifecycle Graphlets Projection Invariants

Purpose

Define a deterministic projection from hourly rollup TSV buckets into a publication-centred lifecycle “graphlets” image:

- X-axis: integer days relative to publication (UTC / Zulu)

- Y-axis: corpus titles ordered by publication datetime ascending (earliest first), rendered top-to-bottom in that order

- For each title row:

- Human page readership signal rendered as a filled envelope above the baseline (blue)

- Meta inspection signal rendered as a filled envelope below the baseline (orange, inverted)

- Labels include: "<title> (<publication_datetime_utc>) (h:<human_total>, m:<meta_total>)"

This projection is intended to show qualitative lifecycle shape per title (lead-in, peak, decay) and comparative ordering by publication date. Magnitude comparison across titles MAY be shown, but MUST obey the scaling invariants declared herein.

Authority and Governance

- These invariants are normative.

- The assisting system MUST follow them exactly.

- The projection MUST be reproducible from declared inputs alone.

- The assisting system MUST NOT infer publication dates, title membership, or additional titles beyond the manifest.

- Visual encoding is a governed semantic property; “pretty” optimisations that change semantics are prohibited.

Authoritative Inputs

- Input A: ROLLUPS — a directory or tar archive of hourly TSV bucket files produced by logrollup (Appendix B).

- Input B: MANIFEST — corpus/manifest.tsv from corpus bundle (corpus.tgz), containing at minimum:

- Title (authoritative MediaWiki title)

- Publication datetime in UTC (Zulu) for published titles

- Input C: Projection parameters (explicitly declared in run metadata):

- Publication root path (default "/pub")

- Meta root encoding (default "/pub-meta" or "<root>-meta" family; see Appendix B)

- Human amplification factor (H_AMP) for display (default 1; may be >1 for diagnostic visibility)

- Lead-window minimum (LEAD_MIN_DAYS) (default 10 days)

- MA window size (MA_N_DAYS) (default 7)

- ROW_MAX (maximum envelope half-height in row units; default 0.42)

- MARGIN (row separation safety margin in row units; default 0.08)

- X major tick step (days) default 10

- X minor tick step (days) default 5

Output Artefact

A single image containing:

- A set of Y-axis rows, one per eligible manifest title, ordered by publication datetime ascending (earliest at top).

- For each row, a baseline line (category baseline graticle) at y=0 for that title row.

- Two filled envelopes per title row:

- Human page GET_ok signal above baseline (blue, filled)

- Meta GET-total signal below baseline (orange, filled, inverted)

- A vertical reference line at x=0 representing the publication datetime.

- A legend describing the two envelopes and the human amplification factor if H_AMP != 1.

- An X-axis labelled in integer days relative to publication (UTC / Zulu), with day graticles (major + minor).

LG-0: Projection Semantics

This appendix is governed by the General Graph Projection Invariants:

- GP-0: Mathematical Direction Semantics

- GP-0a: Tooling Override Prohibition, and:

- LG-0a: Bell Envelope extensions:

- polarity (human ALWAYS Y+, meta ALWAYS Y−)

- Colour assignment (blue = human, orange = meta; no palette substitution)

- LG-0a: Bell Envelope extensions:

No local redefinition or relaxation is permitted.

Time and Calendar Semantics (UTC / Zulu Only)

LG-1: UTC is authoritative for all temporal values

- Rollup filenames and their bucket_start times SHALL be treated as UTC/Zulu.

- Manifest publication datetimes SHALL be treated as UTC/Zulu.

- No local timezone conversion is permitted.

- Any timezone offsets present in datetimes MUST be normalised to UTC.

LG-2: Bucket start time extraction

- Each rollup TSV represents a single time bucket.

- Bucket start time SHALL be derived from the rollup filename.

- If the filename encodes UTC using a "Z" indicator, that is authoritative.

- The bucket_start timestamp SHALL be stored internally as an absolute UTC datetime.

LG-3: Daily key derivation

- For lifecycle aggregation, each bucket_start SHALL be assigned to a UTC calendar day:

- DAY_UTC(bucket_start) = YYYY-MM-DD in UTC.

- No semantic “server local day” is permitted.

LG-4: Publication reference time

- Publication datetime PUB_DT[T] is read from MANIFEST for title T as UTC.

- Publication day PUB_DAY[T] = DAY_UTC(PUB_DT[T]).

- Relative day arithmetic SHALL be computed against PUB_DT[T] (not against the local day concept).

Title Domain and Eligibility

LG-5: Eligible title set is derived exclusively from MANIFEST

- Only titles present in MANIFEST are eligible for inclusion.

- No heuristic discovery of titles from rollups is permitted.

LG-6: Exclude unpublished / invalid-date titles

- Any manifest title whose publication datetime is invalid, missing, or flagged as an error date SHALL be excluded from this projection.

- No attempt may be made to “infer” publication from traffic patterns.

LG-7: Title identity and normalisation

Titles extracted from rollups SHALL be normalised using the same governed rules as the corpus projection normatives:

- URL percent-decoding MUST occur before other steps.

- "_" MUST be converted to space.

- UTF-8 dashes (–, —) MUST be converted to ASCII hyphen (-).

- Whitespace runs MUST be collapsed to a single space and trimmed.

- After normalisation, the title key MUST match a manifest title key exactly (or resolve via an explicit alias map that maps aliases → manifest keys).

- Fuzzy matching, punctuation stripping, or approximate matching is prohibited.

Rollup Parsing and Metric Definitions

LG-8: Rollups are the sole traffic source

- The projection SHALL be computed from rollup TSV buckets only.

- Raw nginx logs MUST NOT be used.

LG-9: Required TSV schema properties

- Each TSV row includes:

- server_name

- path (canonicalised by logrollup)

- numeric bins: <actor>_<method>_<outcome> counts

- Column presence MAY vary, but:

- human_get_ok MUST be supported for the human page readership signal.

- All "*_get_*" columns MUST be discoverable for meta GET aggregation (see LG-12).

LG-10: Page vs Meta path separation (MANDATORY)

- Page access and meta access are disjoint and MUST NOT be merged.

- Page paths are those whose canonical path begins with:

- "<PUB_ROOT>/" (default "/pub/") AND does not include "-meta/" and does not include "/pub-meta/".

- Meta paths are those whose canonical path contains:

- "-meta/" or "/pub-meta/" (or the configured meta namespace produced by logrollup).

- The assisting system MUST NOT treat meta paths as page hits.

LG-11: Human page signal definition

For each rollup row R:

- HUMAN_PAGE_SIGNAL[R] = human_get_ok

- Only rows classified as page paths (LG-10) contribute.

LG-12: Meta signal definition (GET total, all actors)

For each rollup row R classified as meta path (LG-10):

- META_GET_TOTAL[R] = sum of all numeric columns matching "*_get_*" (case-insensitive),

excluding non-numeric columns and excluding any already-derived totals.

- Meta aggregation is across all actor classes; actor filtering is prohibited.

LG-13: Title extraction from canonical path

- For page paths:

- TITLE_RAW = substring after "<PUB_ROOT>/"

- For meta paths:

- TITLE_RAW = the final path component after the last "/"

- TITLE_RAW MUST then be normalised per LG-7 and resolved to a manifest key.

LG-14: Virtual host handling

- server_name is first-class and MUST be preserved.

- If a server filter is applied, it MUST be explicit in run metadata.

- If no server filter is applied, aggregation across vhosts is permitted only when the curator explicitly supplies rollups that already represent the desired scope.

- The projection MUST NOT silently combine or drop vhosts without explicit configuration.

Aggregation

LG-15: Daily aggregation per title (UTC days)

For each UTC day D and title T:

- HUMAN[D,T] = Σ HUMAN_PAGE_SIGNAL[R] over all rollup rows R with:

- DAY_UTC(bucket_start_of_R) == D

- title_of_R == T

- path_class == page

- META[D,T] = Σ META_GET_TOTAL[R] over all rollup rows R with:

- DAY_UTC(bucket_start_of_R) == D

- title_of_R == T

- path_class == meta

LG-16: Missing-day semantics

- The day domain for each title SHALL be the full UTC day range present in the rollups.

- For any missing day/title combination, HUMAN[D,T] and META[D,T] MUST be treated as zero.

LG-17: Totals for label content

For each title T:

- H_TOTAL[T] = Σ HUMAN[D,T] across all days in the rollup span

- M_TOTAL[T] = Σ META[D,T] across all days in the rollup span

Smoothing

LG-18: Moving average definition (MA_N_DAYS)

- A moving average SHALL be computed over daily values for each title:

- MA_HUMAN[D,T] = MA_N_DAYS moving average of HUMAN[*,T]

- MA_META[D,T] = MA_N_DAYS moving average of META[*,T]

- MA_N_DAYS default is 7.

- The moving average MUST treat missing days as zero (per LG-16).

- The moving average MUST be deterministic and use a fixed window (no adaptive windowing).

Relative Day Axis

LG-19: Relative day arithmetic (UTC / Zulu)

For each UTC day D and title T:

- REL_DAY[D,T] = integer day difference between:

- start-of-day UTC datetime for D (YYYY-MM-DDT00:00:00Z)

- publication datetime PUB_DT[T]

- REL_DAY may be negative (lead-in) or positive (post-publication).

LG-20: X-axis windowing

- The X-axis minimum MUST include at least LEAD_MIN_DAYS days of lead-in:

- X_MIN <= -LEAD_MIN_DAYS (default LEAD_MIN_DAYS = 10)

- The X-axis maximum MUST cover the observed tail in the rollups (latest REL_DAY with non-zero signal) plus a small deterministic padding (e.g., +5 days).

- The projection MUST NOT hard-code an arbitrary large negative lead window unless required by observed signal or curator-specified override.

Ordering

LG-21: Row ordering is publication datetime ascending

- Titles MUST be ordered by PUB_DT ascending (earliest first).

- The rendered visual order MUST match human reading order:

- earliest title MUST appear at the top row,

- latest title MUST appear at the bottom row.

- If the plotting library’s axis direction would invert this, the implementation MUST explicitly map indices to preserve top-to-bottom semantic order.

LG-22: Stable tie-breaking

- If two titles share identical PUB_DT, the tie MUST be broken deterministically:

- primary: title lexicographic ascending (UTF-8 codepoint order)

- No other ordering is permitted.

Graphlets Rendering Semantics

LG-23: Baseline per title row (category baseline graticle)

- Each title row SHALL have a baseline y=0 line for that row.

- The baseline line MUST be rendered (thin) across the full x-window.

- Human envelope SHALL be rendered above that baseline.

- Meta envelope SHALL be rendered below that baseline (inverted).

LG-24: Envelope encoding (mandatory)

- Human envelope:

- colour = blue

- fill = solid (opaque)

- direction = upward (positive y relative to baseline)

- data = MA_HUMAN[D,T] after applying human amplification (LG-26)

- Meta envelope:

- colour = orange

- fill = solid (opaque)

- direction = downward (negative y relative to baseline)

- data = MA_META[D,T] (no implicit amplification unless explicitly declared)

LG-25: Fill opacity

- Fill opacity MUST be fully opaque (alpha = 1.0).

- Transparent or washed-out fills are prohibited.

LG-26: Human amplification (display-only)

- A human amplification factor H_AMP MAY be applied to MA_HUMAN for display.

- H_AMP MUST be explicitly declared in the legend text when H_AMP != 1.

- Amplification MUST NOT alter any aggregation, smoothing, ordering, or totals; it is strictly a display transform.

LG-27: Scaling modes (must be declared; no silent switching)

The projection MUST use exactly one of the following scaling modes, explicitly declared in run metadata:

Mode A: Global scale (comparative magnitude preserved across titles)

- A single scale factor is derived from the maximum of the displayed signals over all titles and days:

- MAX_ALL = max over T,D of max( H_AMP*MA_HUMAN[D,T], MA_META[D,T] )

- Both envelopes use the same scale factor.

Mode B: Per-title scale (envelope shape emphasised; magnitudes not comparable across titles)

- For each title T, a local scale factor is derived:

- MAX_T = max over D of max( H_AMP*MA_HUMAN[D,T], MA_META[D,T] )

- Both envelopes for T share the same per-title scale factor.

If Mode B is used, the projection MUST NOT imply cross-title magnitude comparability.

LG-28: Zero-signal masking (recommended; deterministic)

- To avoid long flat “rails”, for each title/day point:

- If (H_AMP*MA_HUMAN[D,T] == 0) AND (MA_META[D,T] == 0), the projection SHOULD omit rendering for that day point (no fill, no outline).

- If implemented, this behaviour MUST be declared in run metadata.

LG-29: Non-overlap constraint (mandatory)

- Row vertical spacing MUST be sufficient to prevent envelopes from touching adjacent rows.

- Let ROW_SPACING be the distance between adjacent row baselines.

- Let ROW_MAX be the maximum vertical excursion used for envelopes (above and below), expressed in row units.

- The invariant MUST hold:

- ROW_MAX <= (ROW_SPACING / 2) - MARGIN

- MARGIN is a fixed deterministic safety margin (default 0.08 in row units).

- If the projection cannot satisfy this constraint for the chosen scaling mode and amplification, it MUST fail explicitly rather than silently compressing or overlapping.

LG-30: Legend placement

- The legend MUST be present.

- The legend MUST NOT obscure plotted envelopes.

- The legend SHOULD be placed in an area of minimal signal density (commonly the lead-in region x < 0) or a corner that does not overlap.

LG-31: Axis and annotation requirements

- X-axis label MUST state UTC/Zulu explicitly.

- The x=0 publication reference MUST be drawn as a vertical line.

- Major x ticks SHOULD be integers or stable intervals appropriate to the window (e.g., 10 days).

- Minor x ticks SHOULD be deterministic (e.g., 5 days).

- Random jitter is prohibited.

LG-36: Day graticles (X-axis gridlines)

- Major day graticles MUST be drawn at every major tick (default step 10 days).

- Minor day graticles MUST be drawn at every minor tick (default step 5 days).

- Gridline styling MUST be deterministic and MUST NOT dominate the envelopes.

Label Content

LG-32: Row label format (mandatory)

Each Y-axis label MUST be exactly:

"<title> (<publication_datetime_utc>) (h:<H_TOTAL>, m:<M_TOTAL>)"

- publication_datetime_utc MUST be rendered as an ISO-8601 UTC string in compact Zulu form:

- YYYY-MM-DDTHH:MMZ

- seconds MUST be omitted

- "+00:00" MUST NOT be used in place of "Z"

- h and m totals MUST correspond to LG-17 totals (pre-amplification).

LG-38: Label margin sizing (mandatory; minimal sufficient)

- The left margin MUST be sized to be just sufficient for the widest Y-axis label, with no excessive white space.

- The implementation MUST compute text extents (or an equivalent deterministic measurement) and set the plotting left margin accordingly.

- Labels MUST NOT be clipped.

Validation and Verification (MANDATORY)

LG-33: Pre-render validation (data integrity)

A compliant implementation MUST validate:

- All included titles exist in MANIFEST and have valid UTC publication datetime.

- No excluded/unpublished titles are included.

- Title normalisation resolves to manifest keys only (no unresolved titles).

- HUMAN and META day series cover the full rollup day span with zeros filled (LG-16).

LG-34: Post-render verification (image integrity)

A compliant implementation MUST verify the rendered output satisfies:

- Fill opacity is opaque (no transparency washout).

- Row order is visually publication-ascending top-to-bottom.

- Envelopes do not touch adjacent rows (LG-29).

- Category baseline graticles are present for all rows (LG-23).

- Day graticles are present (major and minor) (LG-36).

- Legend is present and does not obscure envelopes.

- X-axis includes at least LEAD_MIN_DAYS lead-in (LG-20).

- x=0 reference line is present.

- Label timestamps are compact Zulu (…THH:MMZ), not “…00:00:00+00:00”.

If any verification fails, the projection MUST be rejected and regenerated.

Forbidden Behaviours

- Inferring publication dates from traffic.

- Local timezone conversions.

- Fuzzy matching titles to manifest.

- Merging meta hits into page hits.

- Silent scaling changes between runs without explicit declaration.

- Transparent fills.

- Overlapping rows.

- Excessive left label margin beyond what is required to avoid clipping.

B-1′ — Lifecycle Graphlets (LOG + MA(3)) Projection Invariants

Normative delta: This section is additive. It extends Appendix B-1 by defining a LOG+MA(3) lifecycle graphlet projection variant. Existing Appendix B-1 remains authoritative for non-log / non-MA(3) graphlets unless superseded by an explicit replacement declaration.

Linear amplitude graphlets are deprecated for lifecycle analysis of heavy-tailed access distributions. Log-amplitude MA(3) graphlets SHALL be the normative default unless explicitly overridden.

B-1′.1 Scope

These invariants govern the production of Lifecycle Graphlets where:

- amplitudes are log-compressed using ln(1+x), and

- signals are smoothed with a centered moving average of width 3 (MA(3)).

The projection SHALL be deterministic, reproducible, and derived solely from rollup TSV outputs produced under Appendix B (logrollup invariants) and the corpus manifest.

B-1′.2 Authoritative Inputs

The projection SHALL use only:

- Manifest fields: (title, safe-file-name, publication_date)

- Rollup TSV hourly buckets produced by logrollup (Appendix B)

Raw nginx logs MUST NOT be used. All times MUST be interpreted as UTC/Zulu.

B-1′.3 Title Eligibility

A title SHALL be included iff:

- it exists in the manifest; and

- its publication_date parses as a valid ISO datetime in UTC (or with offset convertible to UTC).

Titles with invalid publication_date SHALL be excluded (not publications).

B-1′.4 Path Separation (Hard Gate)

The projection MUST NOT merge page and meta namespaces.

Page (human readership) paths:

- /pub/<Title>

- MUST NOT include any path containing -meta/

Metadata paths:

- /pub-meta/<type>/<Title>

- or equivalently any path containing -meta/ and terminating with <Title>

Heuristic inference of page/meta classification is forbidden.

B-1′.5 Signal Definitions

For each title T and UTC day D, define daily counts:

Human (blue)

- H[D,T] = Σ human_get_ok for page paths only.

Metadata (orange) Exactly one of the following modes MUST be selected and declared in projection metadata and title text:

- Meta-ALL mode (legacy):

- M[D,T] = Σ (all actor bins matching *_get_*) for meta paths only

- Meta-OK mode (filtered):

- M[D,T] = Σ (all actor bins matching *_get_ok) for meta paths only

No other status-restricted mode is permitted unless explicitly added by amendment.

B-1′.6 UTC Day Aggregation

Hourly buckets SHALL be aggregated into UTC day bins using bucket_start_utc and UTC day boundaries:

- D = floor_utc_day(bucket_start_utc)

All daily series MUST be zero-filled across the graphlet time window for missing days.

B-1′.7 Relative Day Axis (Lead/Lag)

For each title T with publication datetime P[T], the x-axis value for UTC day D is:

- REL_DAY[D,T] = floor((D_start_utc − P[T]) / 86400 seconds)

A publication reference line MUST be drawn at x = 0.

Lead window MUST be explicitly declared. If not declared, default lead window SHALL be -10 days.

B-1′.8 Smoothing (MA(3))

A centered MA(3) SHALL be applied to both H and M daily series:

- MA3[x][k] = (x[k-1] + x[k] + x[k+1]) / 3

Boundary handling MUST be deterministic:

- zero-padding at both ends (k-1 and k+1 outside bounds treated as 0)

No other smoothing (adaptive or variable window) is permitted under this projection variant.

B-1′.9 Log Amplitude Transform

After MA(3), apply log compression using ln(1+x):

- H_log[D,T] = ln(1 + H_AMP × MA3(H[D,T]))

- M_log[D,T] = ln(1 + MA3(M[D,T]))

Where:

- H_AMP is a declared, display-only amplification factor (integer ≥ 1).