Nginx-graphs

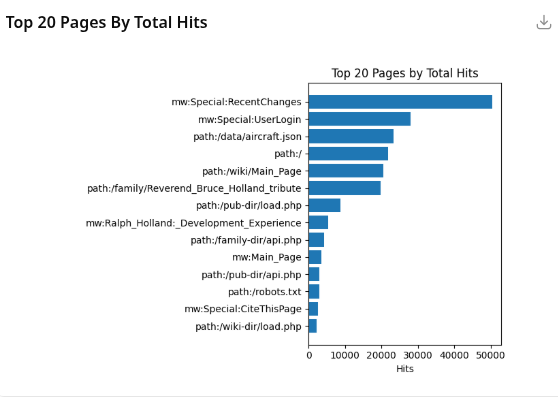

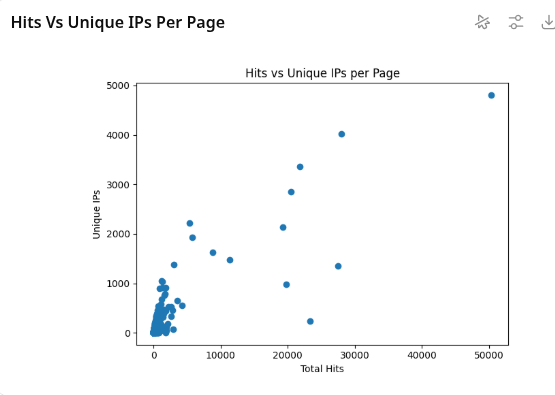

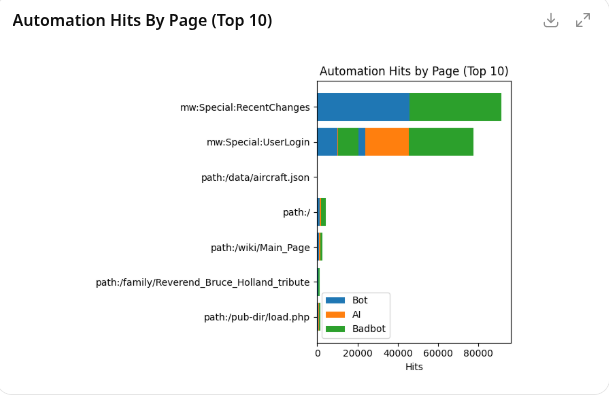

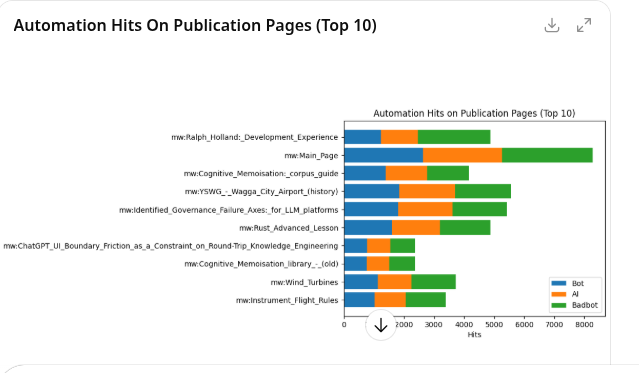

all virtual servers

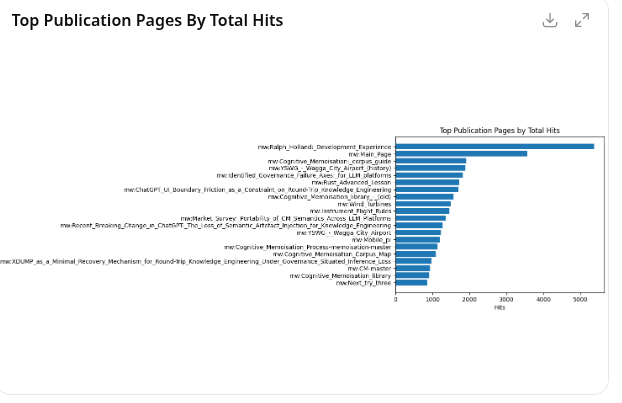

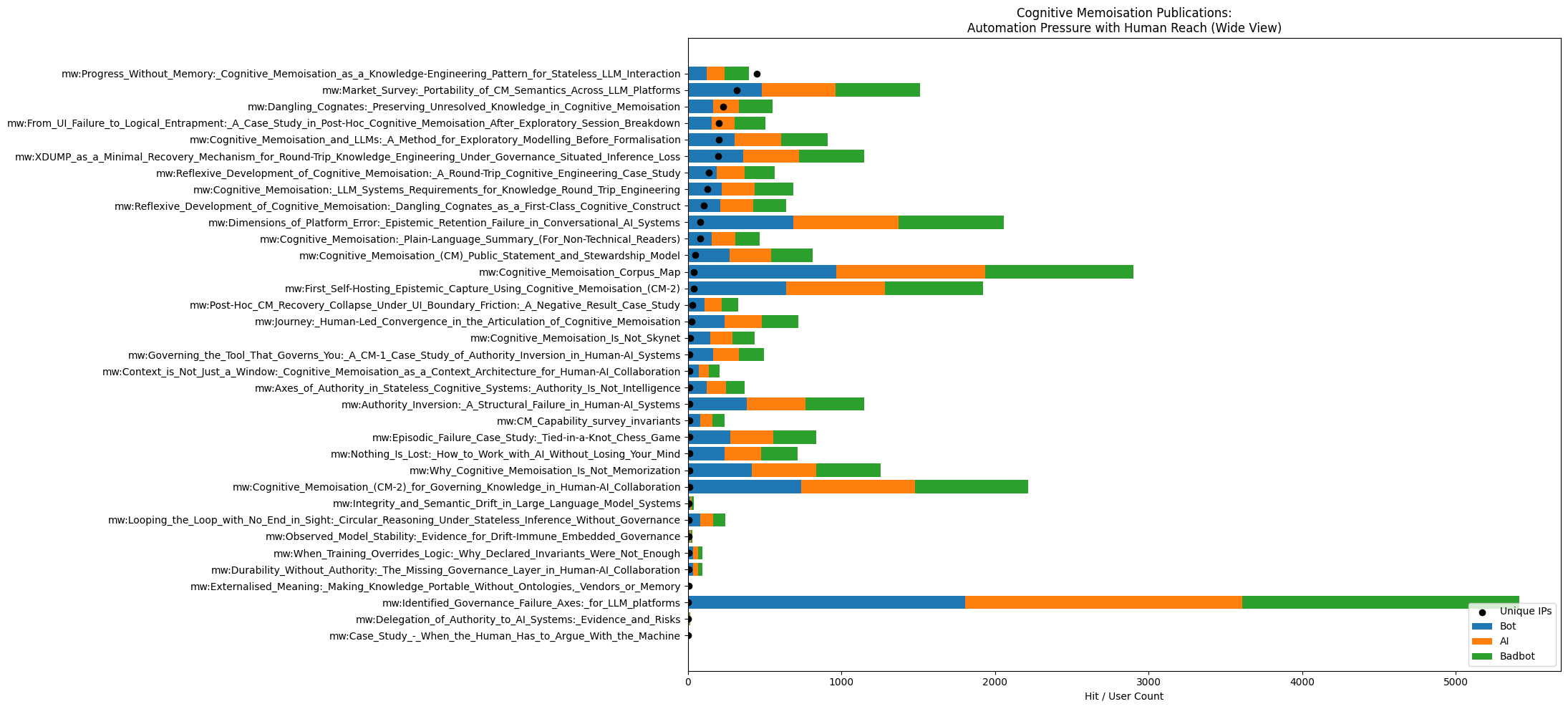

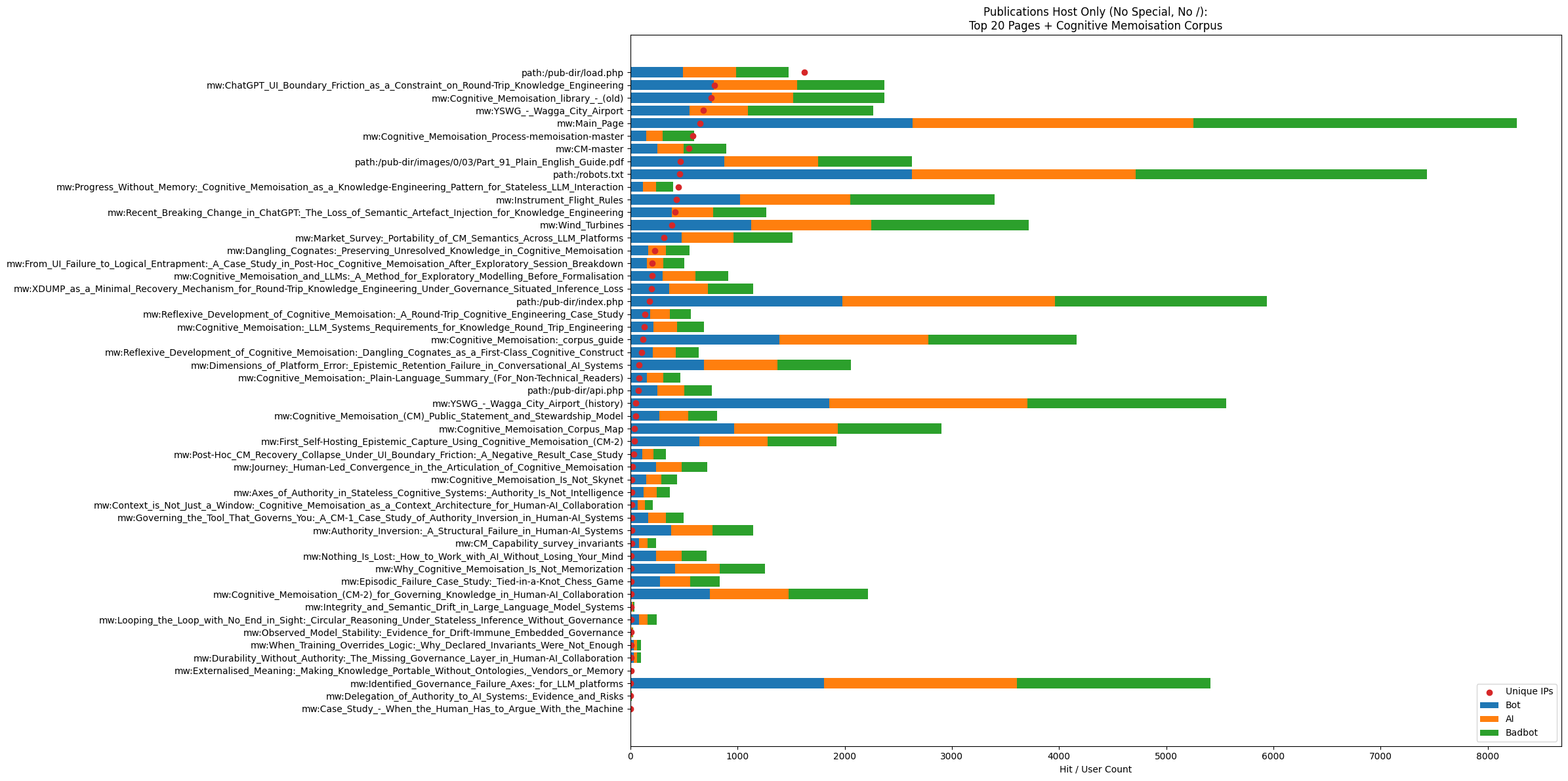

publications

human traffic

MWDUMP Normative: Log-X Traffic Projection (Publications ∪ CM)

Scope

This normative defines a single 2-D projection plot over the union of:

- Top-N publication pages (by human hits)

- All CM pages

Each page SHALL appear exactly once.

Population

- Host SHALL be publications.arising.com.au unless explicitly stated otherwise.

- Page set SHALL be: UNION( TopN_by_human_hits(publications), All_CM_pages )

Ordering Authority

- Rows (Y positions) SHALL be sorted by human hit count, descending.

- Human traffic SHALL define ordering authority and SHALL NOT be displaced by automation classes.

Axes

Y Axis (Left)

- Y axis SHALL be categorical page identifiers.

- Full page names SHALL be rendered to the left of the plot region.

- Y axis SHALL be inverted so highest human-hit pages are at the top.

X Axis (Bottom + Top)

- X axis SHALL be log10.

- X axis ticks SHALL be fixed decades: 10^0, 10^1, 10^2, ... up to the highest decade required by the data.

- X axis SHALL be duplicated at the top with identical ticks/labels.

- Vertical gridlines SHALL be rendered at each decade (10^n). Minor ticks SHOULD be suppressed.

Metrics (Plotted Series)

For each page, the following series SHALL be plotted as independent point overlays sharing the same X axis:

- Human hits: hits_human = hits_total - (hits_bot + hits_ai + hits_badbot)

- Bot hits: hits_bot

- AI hits: hits_ai

- Bad-bot hits: hits_badbot

Marker Semantics

Markers SHALL be distinct per series:

- Human hits: filled circle (●)

- Bot hits: cross (×)

- AI hits: triangle (△)

- Bad-bot hits: square (□)

Geometry (Plot Surface Scaling)

- The plot SHALL be widened by increasing canvas width and/or decreasing reserved margins.

- The plot SHALL NOT be widened by extending the X-axis data range beyond the highest required decade.

- Figure aspect SHOULD be >= 3:1 (width:height) for tall page lists.

Prohibitions

- The plot SHALL NOT reorder pages by bot, AI, bad-bot, or total hits.

- The plot SHALL NOT add extra decades beyond the highest decade required by the data.

- The plot SHALL NOT omit X-axis tick labels.

- The plot SHALL NOT collapse series into totals.

- The plot SHALL NOT introduce a time axis in this projection.

Title

The plot title SHOULD be: "Publications ∪ CM Pages (Ordered by Human Hits): Human vs Automation (log scale)"

Validation

A compliant plot SHALL satisfy:

- Pages readable on left

- Decade ticks visible on bottom and top

- Scatter region occupies the majority of horizontal area to the right of labels

- X-axis decade range matches data-bound decades (no artificial expansion)

Hits Per Page

SVG

New Page Invariants

Line Graph invariants

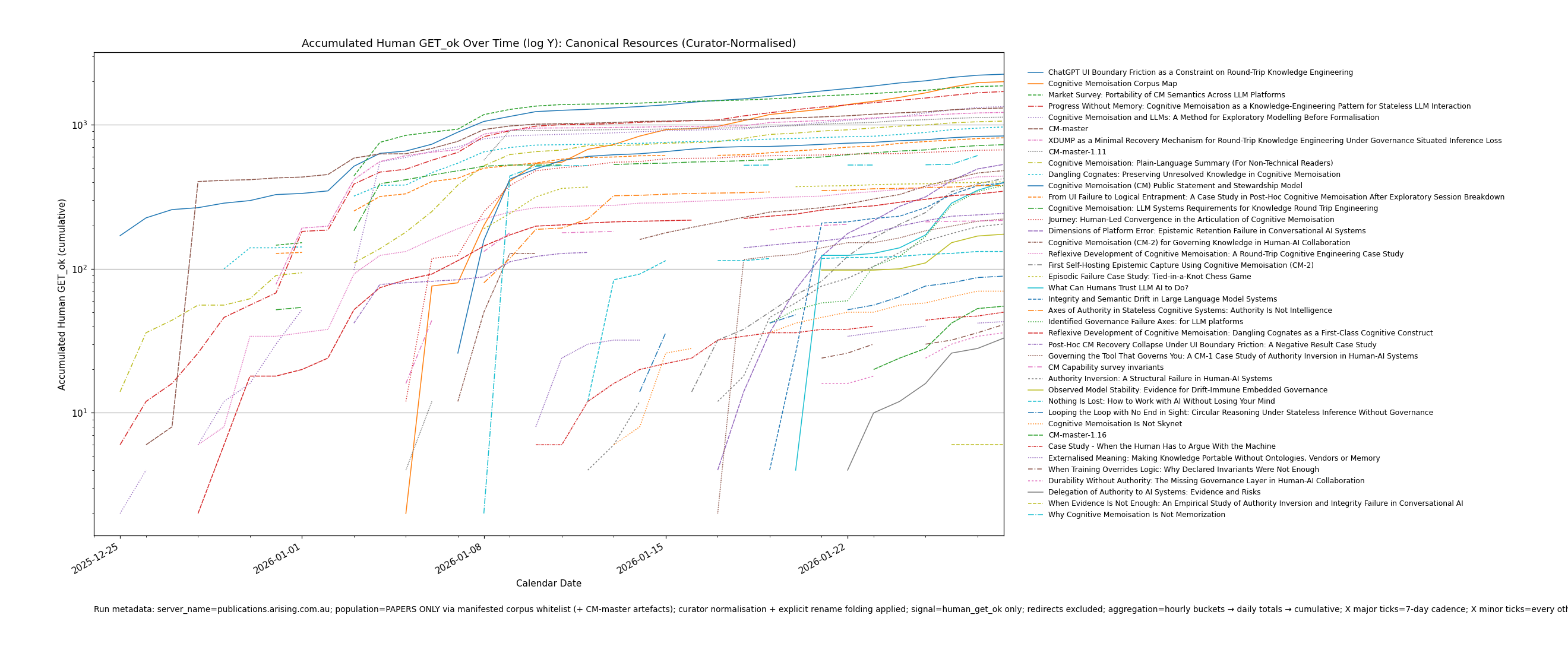

This is for the accumulated human hits time series.

##BEGIN_MWDUMP

#title: MW-line projection invariants (normative) — accumulated human hits (curator-normalised resources)

#format: MWDUMP

#name: mw-line projection (invariants)

#purpose:

# Produce deterministic line projections (time series) of accumulated human interest per MediaWiki resource,

# using curator-locked resource normalisation and rename folding so that each logical page appears exactly once.

inputs:

- Hourly bucket TSVs produced by logrollups perl

- Required columns:

server_name, path, human_get_ok

(other columns may exist and MUST be ignored by this projection)

scope:

- Host MUST be publications.arising.com.au unless explicitly stated otherwise.

- Projection MUST be computed over the full time range present in the bucket set:

from earliest bucket start time to latest bucket end time.

terminology:

- "bucket" means one rollup file representing one hour.

- "resource" means the canonical MediaWiki page identity (title after normalisation + curator rename folding).

- "canonical title" means the curator-latest page title that represents the resource.

- "human signal" for this projection means human_get_ok only.

resource_normalisation (mandatory):

Given a request path (df.path):

1) URL decoding:

- Any URL-encoded sequences MUST be decoded prior to all other steps.

2) Title extraction:

- If path matches ^/pub/<title>, then <title> MUST be used as the title candidate.

- If path matches ^/pub-dir/index.php?<query>, then the title candidate MUST be taken from query param:

e.g. title=<title> or edit=<title> etc

If title param is absent, page candidate MAY be taken from page=<title> if present.

- If both are absent, the record MUST NOT be treated as a page resource for this projection.

3) Underscore canonicalisation:

- All underscores "_" in the title candidate MUST be replaced with space " ".

4) Dash canonicalisation (curator action):

- UTF8 dashes commonly rendered as “–” or “—” MUST be replaced with ASCII "-".

5) Whitespace normalisation:

- Runs of whitespace MUST be collapsed to a single space.

- Leading/trailing whitespace MUST be trimmed.

6) Namespace exclusions (mandatory; noise suppression):

- The following namespaces MUST be excluded from graph projections (case-insensitive prefix match) :

Special:

Category:

Category talk:

Talk:

User:

User talk:

File:

Template:

Help:

MediaWiki:

- Obvious misspellings of the above namespaces (e.g. "Catgeory:") SHOULD be excluded.

7) Infrastructure exclusions (mandatory):

- The following endpoints MUST be excluded from resource population:

/robots.txt

sitemap (any case-insensitive occurrence)

/resources or /resources/ at any depth (case-insensitive)

/pub-dir/load.php

/pub-dir/api.php

/pub-dir/rest.php/v1/search/title

- Image and icon resources MUST be excluded by extension:

.png .jpg .jpeg .gif .svg .ico .webp

curator_rename_folding (mandatory):

- A curator-maintained equivalence mapping SHALL be applied AFTER resource_normalisation.

- The mapping MUST be expressed as:

legacy_title -> canonical_latest_title

- Any resource whose normalised title matches a legacy_title MUST be rewritten to the canonical_latest_title.

- The canonical_latest_title MUST be the ONLY label used for output and plotting.

- The mapping MUST be treated as authoritative; no heuristic folding is permitted beyond this mapping.

time_basis (mandatory):

- Each bucket represents one hour.

- For line plotting, bucket data MUST be aggregated to daily totals:

daily_hits(resource, day) = SUM human_get_ok over all buckets whose start time falls on 'day'

- The day boundary MUST be consistent and deterministic (use the bucket timestamp’s calendar date).

metric_definition (mandatory):

- daily_hits MUST be computed from human_get_ok only.

- Redirect counts MUST NOT be included.

- No other agent classes are included in this projection.

accumulation (mandatory):

- The plotted value MUST be cumulative (accumulated) hits:

cum_hits(resource, day_i) = SUM_{d <= day_i} daily_hits(resource, d)

ordering and determinism (mandatory):

- All processing MUST be deterministic:

- no random jitter

- stable ordering of series in legends and outputs

- Legend ordering SHOULD be:

- sorted by descending accumulated human get ordering

rendering_invariants (line plot):

A) Axes:

- X axis MUST be calendar date from first day to last day in the dataset.

- X tick labels MUST be shown every 7 days (weekly cadence) with a major tick

- X tick labels MUST be rotated obliquely (e.g. 30 degrees) to prevent overlap.

- minor X ticks shall be placed at every other day between major ticks

B) Y axis:

- Y axis MUST be logarithmic for accumulated projections unless explicitly set to linear.

- Zero and negative values MUST NOT be plotted on a log axis

C) Series encoding:

- A designated anchor series (if present) SHOULD use a solid line.

- Other series MUST use distinct line styles (dash/dot variants) to disambiguate when colours repeat.

D) Legend:

- Legend MUST be present and MUST list canonical_latest_title for each series.

- Legend MUST NOT be clipped in the output image:

- Reserve sufficient right margin OR save with bounding box expansion.

- Legend MAY be placed outside the plot region on the RHS.

title_and_caption (mandatory):

- Title SHOULD be:

"Accumulated Human GET_ok Over Time (log Y): Canonical Resources (Curator-Normalised)"

- Caption/run metadata MUST state:

- server_name filter

- population rule used

- that curator rename folding was applied

- that redirects were excluded and human_get_ok is the sole signal

- that daily aggregation was used (hourly buckets → daily totals)

validation (mandatory):

A compliant projection SHALL satisfy:

- Each logical resource appears exactly once (after normalisation + rename folding).

- daily_hits sums match the underlying buckets for human_get_ok.

- cum_hits is monotonic non-decreasing per resource.

- X minor ticks at each day

- X label and major ticks occur at 7-day cadence and labels are readable.

- Legend is fully visible and non-clipped.

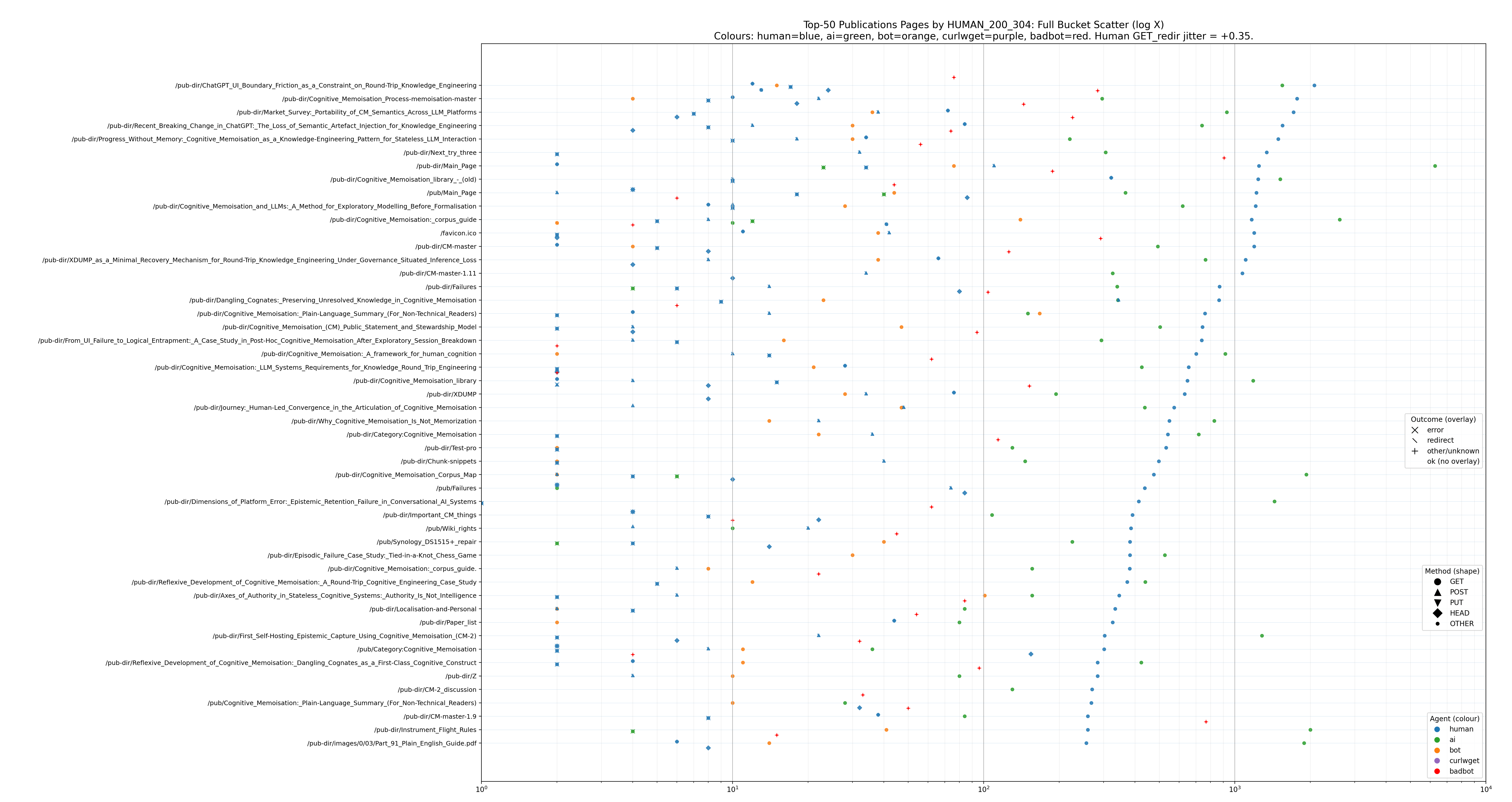

MW-page projection invariants (normative) — CM publications analytics (for multiple GET/PUT/POST et al)

This is for the daily hits scatter plot for all agents, including un-identified/human agents.

format: MWDUMP

name: dailyhits: GET/PUT/POST/HEAD et. al. agent projection (invariants)

purpose:

Produce human-meaningful MediaWiki publication page analytics from nginx bucket TSVs by excluding infrastructural noise, normalising labels, and rendering deterministic page-level projection plots with stable encodings.

inputs:

- Bucket TSVs produced by page_hits_bucketfarm_methods.pl (server_name + page_category present; method taxonomy HEAD/GET/POST/PUT/OTHER across agent classes).

- Column naming convention:

<agent>_<METHOD>_<outcome>

where:

agent ∈ {human, ai, bot, badbot, curlwget} (curlwget optional but supported)

METHOD ∈ {GET, POST, PUT, HEAD, OTHER}

outcome ∈ {ok, redir, client_err, server_err, other} (exact set is whatever the bucketfarm emits; treat unknown outcomes as other for rendering)

projection_steps:

1) Server selection (mandatory):

- Restrict to the publications vhost only (e.g. --server publications.<domain> at bucket-generation time, or filter server_name == publications.<domain> at projection time).

2) Page namespace selection (mandatory):

- Exclude MediaWiki Special pages:

page_category matches ^p:Special

3) Infrastructure exclusions (mandatory; codified noise set):

- Exclude root:

page_category == p:/

- Exclude robots:

page_category == p:/robots.txt

- Exclude sitemap noise:

page_category contains "sitemap" (case-insensitive)

- Exclude resources anywhere in path (strict):

page_category contains /resources OR /resources/ at any depth (case-insensitive)

- Exclude MediaWiki plumbing endpoints (strict; peak suppressors):

page_category == p:/pub-dir/index.php

page_category == p:/pub-dir/load.php

page_category == p:/pub-dir/api.php

page_category == p:/pub-dir/rest.php/v1/search/title

4) Label normalisation (mandatory for presentation):

- Strip leading "p:" prefix from page_category for chart/table labels.

- Do not introduce case-splitting in category keys:

METHOD must be treated as UPPERCASE;

outcome must be treated as lowercase;

the canonical category key is METHOD_outcome.

5) Aggregation invariant (mandatory for page-level projection):

- Aggregate counts across all rollup buckets to produce one row per resource:

GROUP BY path (or page_category label post-normalisation)

SUM all numeric category columns.

- After aggregation, each resource must appear exactly once in outputs.

6) Human success spine (mandatory default ordering):

- Define the ordering metric HUMAN_200_304 as:

HUMAN_200_304 := human_GET_ok + human_GET_redir

Notes:

- This ordering metric is normative for “human success” ranking.

- Do not include 301 (or any non-bucketed redirect semantics) unless the bucketfarm’s redir bin explicitly includes it; ordering remains strictly based on the bucketed columns above.

7) Ranking + Top-N selection (mandatory defaults):

- Sort resources by HUMAN_200_304 descending.

- Select Top-N resources after exclusions and aggregation:

Default N = 50 (configurable; must be stated in the chart caption if changed).

outputs:

- A “page projection” scatter view derived from the above projection is MW-page projection compliant if it satisfies all rendering invariants below.

rendering_invariants (scatter plot):

A) Axes:

- X axis MUST be logarithmic (log X).

- X axis MUST include “log paper” verticals:

- Major decades at 10^k

- Minor lines at 2..9 * 10^k

- Y axis is resource labels ordered by HUMAN_200_304 descending.

B) Label alignment (mandatory):

- The resource label baseline (tick y-position) MUST align with the HUMAN GET success spine:

- Pin human GET_ok points to the label baseline (offset = 0).

- Draw faint horizontal baseline guides at each resource row to make alignment visually explicit.

C) “Plot every category” invariant (mandatory when requested):

- Do not elide, suppress, or collapse any bucketed category columns in the scatter.

- All method/outcome bins present in the bucket TSVs MUST be plotted for each agent class that exists in the data.

D) Deterministic intra-row separation (mandatory when plotting many categories):

- Within each resource row, apply a deterministic vertical offset per hit category key (METHOD_outcome) to reduce overplotting.

- Exception: the label baseline anchor above (human GET_ok) must remain on baseline.

E) Specific jitter rule for human GET redirects (mandatory when requested):

- If human GET_redir is visually paired with human GET_ok at the same x-scale, apply a small deterministic vertical jitter to human GET_redir only:

y(human GET_redir) := baseline + jitter

where jitter is small and fixed (e.g. +0.35 in the current reference plot).

encoding_invariants (agent/method/outcome):

- Agent MUST be encoded by colour (badbot MUST be red).

- Method MUST be encoded by base shape:

GET -> circle (o)

POST -> upright triangle (^)

PUT -> inverted triangle (v)

HEAD -> diamond (D)

OTHER-> dot (.)

- Outcome MUST be encoded as an overlay in agent colour:

error (client_err or server_err) -> x

redirect (redir) -> diagonal slash (/ rendered as a 45° line marker)

other (other or unknown) -> +

ok -> no overlay

legend_invariants:

- A compact legend block MUST be present (bottom-right default) containing:

- Agent colours across top (human (blue), ai (green), bot (orange), curlwget (black), badbot (red))

- Method rows down the side with the base shapes rendered in each agent colour

- Outcome overlay key: x=error, /=redirect, +=other, none=ok

determinism:

- All ordering, filtering, normalisation, and jitter rules MUST be deterministic (no random jitter).

- Any non-default parameters (Top-N, jitter magnitude, exclusions) MUST be stated in chart caption or run metadata.

The following describes page/title (name) normalisation

Ingore Pages

Ignore the following pages:

- DOI-master .* (deleted)

- Cognitive Memoisation Corpus Map (provisional)

- Cognitive Memoisation library (old)

Normalisation Overview

A list of page renames / redirects and mappings that appear within the mediawiki web-farm nginx access logs and rollups.

mediawiki pages

- all mediawiki URLs that contain _ can be mapped to page title with a space ( ) substituted for the underscore (_).

- URLs may be URL encoded as well.

- mediawiki rewrites page to /pub/<title>

- mediawiki uses /pub-dir/index.php? parameters to refer to <title>

- mediawiki: the normalised resource part of a URL path, or parameter=<title>, edit=<title> etc means the normalised title is the same target page.

curator action

- dash rendering: emdash (–) emdash (or similar UTF8) was replaced with dash (-) (ASCII).

renamed pages - under curation

- First Self-Hosting Epistemic Capture Using Cognitive Memoisation (CM-2) (redirected from):

- Cognitive_Memoisation_(CM-2):_A_Human-Governed_Protocol_for_Knowledge_Governance_and_Transport_in_AI_Systems)

- Cognitive Memoisation (CM-2) for Governing Knowledge in Human-AI Collaboration (CM-2) was formerly:

- Let's_Build_a_Ship_-_Cognitive_Memoisation_for_Governing_Knowledge_in_Human_-_AI_Collabortion

- Cognitive_Memoisation_for_Governing_Knowledge_in_Human-AI_Collaboration

- Cognitive_Memoisation_for_Governing_Knowledge_in_Human–AI_Collaboration

- Cognitive_Memoisation_for_Governing_Knowledge_in_Human_-_AI_Collaboration

- Authority Inversion: A Structural Failure in Human-AI Systems was formerly:

- Authority_Inversion:_A_Structural_Failure_in_Human–AI_Systems

- Authority_Inversion:_A_Structural_Failure_in_Human-AI_Systems

- Journey: Human-Led Convergence in the Articulation of Cognitive Memoisation

- Journey:_Human–Led_Convergence_in_the_Articulation_of_Cognitive_Memoisation (em dash)

- Cognitive Memoisation Corpus Map was formerly:

- Cognitive_Memoisation:_corpus_guide

- Cognitive_Memoisation:_corpus_guide'

- Context is Not Just a Window: Cognitive Memoisation as a Context Architecture for Human-AI Collaboration (CM-1) was formerly:

- Context_Is_Not_Just_a_Window:_Cognitive_Memoisation_as_a_Context_Architecture_for_Human–AI_Collaboration

- Context_Is_Not_Just_a_Window:_Cognitive_Memoisation_as_a_Context_Architecture_for_Human–AI_Collaborationn

- Context_is_Not_Just_a_Window:_Cognitive_Memoisation_as_a_Context_Architecture_for_Human-AI_Collaboration

- Context_Is_Not_Just_a_Window:_Cognitive_Memoisation_as_a_Context_Architecture_for_Human-AI_Collaborationn

- Context_is_Not_Just_a_Window:_Cognitive_Memoisation_as_a_Context_Architecture_for_Human_-_AI_Collaboration

- Context_is_Not_Just_a_Window:_Cognitive_Memoisation_as_a_Context_Architecture_for_Human_–_AI_Collaboration

- Progress Without Memory: Cognitive Memoisation as a Knowledge-Engineering Pattern for Stateless LLM Interaction was formely:

- Progress_Without_Memory:_Cognitive_Memoisation_as_a_Knowledge_Engineering_Pattern_for_Stateless_LLM_Interaction

- Progress_Without_Memory:_Cognitive_Memoisation_as_a_Knowledge-Engineering_Pattern_for_Stateless_LLM_Interaction

- Cognitive_Memoisation_and_LLMs:_A_Method_for_Exploratory_Modelling_Before_Formalisation'

- Cognitive_Memoisation_and_LLMs:_A_Method_for_Exploratory_Modelling_Before_Formalisation

- Cognitive_Memorsation:_Plain-Language_Summary_(For_Non-Technical_Readers)'

nginxlog sample code

The code that is used to process logs to obtain summaries of each page hit counts.

#!/usr/bin/env perl

use strict;

use warnings;

use Getopt::Long qw(GetOptions);

use File::Path qw(make_path);

use POSIX qw(strftime);

use IO::Uncompress::Gunzip qw(gunzip $GunzipError);

use URI::Escape qw(uri_unescape);

# ============================================================================

# lognginx

#

# Purpose:

# Produce per-page hit/unique-IP rollups plus mutually-exclusive BOT/AI/BADBOT

# counts aligned to nginx enforcement.

#

# Outputs (under --dest):

# 1) page_hits.tsv host<TAB>page_key<TAB>hits_total

# 2) page_unique_ips.tsv host<TAB>page_key<TAB>unique_ip_count

# 3) page_bot_ai_hits.tsv host<TAB>page_key<TAB>hits_bot<TAB>hits_ai<TAB>hits_badbot

# 4) page_unique_agent_ips.tsv host<TAB>page_key<TAB>uniq_human<TAB>uniq_bot<TAB>uniq_ai<TAB>uniq_badbot

#

# Classification (EXCLUSIVE, ordered):

# - BADBOT : HTTP status 308 (authoritative signal; nginx permanently redirects bad bots)

# - AI : UA matches bad_bot -> 0 patterns (from bots.conf)

# - BOT : UA matches bot -> 1 patterns (from bots.conf)

# - fallback BADBOT-UA: UA matches bad_bot -> nonzero patterns (only if status not 308)

#

# Important:

# - This script intentionally does NOT attempt to emulate nginx 'map' first-match

# semantics for analytics. It uses the operational signal (308) + explicit lists.

# - External sort is used; choose --workdir on a large filesystem if needed.

# ============================================================================

# ----------------------------

# CLI

# ----------------------------

my @inputs = ();

my $dest = '';

my $workdir = '';

my $bots_conf = '/etc/nginx/bots.conf';

my $sort_mem = '50%';

my $sort_parallel = 2;

my $ignore_head = 0;

my $exclude_local_ip = 0;

my $verbose = 0;

my $help = 0;

# Canonicalisation controls (MediaWiki-friendly defaults)

my $mw_title_first = 1; # if title= exists for index.php, use it as page key

my $strip_query_nonmw = 1; # for non-MW URLs, drop query string entirely

my @ignore_params = qw(oldid diff action returnto returntoquery limit);

GetOptions(

'input=s@' => \@inputs,

'dest=s' => \$dest,

'workdir=s' => \$workdir,

'bots-conf=s' => \$bots_conf,

'sort-mem=s' => \$sort_mem,

'parallel=i' => \$sort_parallel,

'ignore-head!' => \$ignore_head,

'exclude-local-ip!' => \$exclude_local_ip,

'verbose!' => \$verbose,

'help|h!' => \$help,

'mw-title-first!' => \$mw_title_first,

'strip-query-nonmw!' => \$strip_query_nonmw,

) or die usage();

if ($help) { print usage(); exit 0; }

die usage() unless $dest && @inputs;

make_path($dest) unless -d $dest;

$workdir ||= "$dest/.work";

make_path($workdir) unless -d $workdir;

logmsg("Dest: $dest");

logmsg("Workdir: $workdir");

logmsg("Bots conf: $bots_conf");

logmsg("ignore-head: " . ($ignore_head ? "yes" : "no"));

logmsg("exclude-local-ip: " . ($exclude_local_ip ? "yes" : "no"));

logmsg("mw-title-first: " . ($mw_title_first ? "yes" : "no"));

logmsg("strip-query-nonmw: " . ($strip_query_nonmw ? "yes" : "no"));

my @files = expand_globs(@inputs);

die "No input files found.\n" unless @files;

logmsg("Inputs: " . scalar(@files) . " files");

# ----------------------------

# Load patterns from bots.conf

# ----------------------------

my $patterns = load_bots_conf($bots_conf);

my @ai_regexes = @{ $patterns->{bad_bot_zero} || [] };

my @badbot_regexes = @{ $patterns->{bad_bot_nonzero} || [] };

my @bot_regexes = @{ $patterns->{bot_one} || [] };

logmsg("Loaded patterns from bots.conf:");

logmsg(" AI regexes (bad_bot -> 0): " . scalar(@ai_regexes));

logmsg(" Bad-bot regexes (bad_bot -> URL/nonzero): " . scalar(@badbot_regexes));

logmsg(" Bot regexes (bot -> 1): " . scalar(@bot_regexes));

# ----------------------------

# Temp keyfiles

# ----------------------------

my $tmp_hits_keys = "$workdir/page_hits.keys"; # host \t page

my $tmp_unique_triples = "$workdir/page_unique.triples"; # host \t page \t ip

my $tmp_botai_keys = "$workdir/page_botai.keys"; # host \t page \t class

my $tmp_classuniq_triples = "$workdir/page_class_unique.triples"; # host page class ip

open(my $fh_hits, '>', $tmp_hits_keys) or die "Cannot write $tmp_hits_keys: $!\n";

open(my $fh_uniq, '>', $tmp_unique_triples) or die "Cannot write $tmp_unique_triples: $!\n";

open(my $fh_botai, '>', $tmp_botai_keys) or die "Cannot write $tmp_botai_keys: $!\n";

open(my $fh_classuniq, '>', $tmp_classuniq_triples) or die "Cannot write $tmp_classuniq_triples: $!\n";

my $lines = 0;

my $kept = 0;

for my $f (@files) {

logmsg("Reading $f");

my $fh = open_maybe_gz($f);

while (my $line = <$fh>) {

$lines++;

my ($ip, $method, $target, $status, $ua, $host) = parse_access_line($line);

next unless defined $ip && defined $host && defined $target;

# Exclude local/private/loopback traffic if requested

if ($exclude_local_ip && is_local_ip($ip)) {

next;

}

# Instrumentation suppression

if ($ignore_head && defined $method && $method eq 'HEAD') {

next;

}

my $page_key = canonical_page_key($target, \@ignore_params, $mw_title_first, $strip_query_nonmw);

next unless defined $page_key && length $page_key;

# 1) total hits per page

print $fh_hits $host, "\t", $page_key, "\n";

# 2) unique IP per page

print $fh_uniq $host, "\t", $page_key, "\t", $ip, "\n";

# 3) bot/ai/badbot hits per page (EXCLUSIVE, ordered: BADBOT > AI > BOT)

my $class;

if (defined $status && $status == 308) {

$class = 'BADBOT';

}

elsif (defined $ua && length $ua) {

if (ua_matches_any($ua, \@ai_regexes)) {

$class = 'AI';

}

elsif (ua_matches_any($ua, \@bot_regexes)) {

$class = 'BOT';

}

#elsif (ua_matches_any($ua, \@badbot_regexes)) {

# Fallback only; 308 remains the authoritative signal when present.

# $class = 'BADBOT';

#}

}

print $fh_botai $host, " ", $page_key, " ", $class, "

" if defined $class;

my $uclass = defined($class) ? $class : "HUMAN";

print $fh_classuniq $host, " ", $page_key, " ", $uclass, " ", $ip, "

";

$kept++;

}

close $fh;

}

close $fh_hits;

close $fh_uniq;

close $fh_botai;

close $fh_classuniq;

logmsg("Lines read: $lines; lines kept: $kept");

# ----------------------------

# Aggregate outputs

# ----------------------------

my $out_hits = "$dest/page_hits.tsv";

my $out_unique = "$dest/page_unique_ips.tsv";

my $out_botai = "$dest/page_bot_ai_hits.tsv";

my $out_classuniq = "$dest/page_unique_agent_ips.tsv";

count_host_page_hits($tmp_hits_keys, $out_hits, $workdir, $sort_mem, $sort_parallel);

count_unique_ips_per_page($tmp_unique_triples, $out_unique, $workdir, $sort_mem, $sort_parallel);

count_botai_hits_per_page($tmp_botai_keys, $out_botai, $workdir, $sort_mem, $sort_parallel);

count_unique_ips_by_class_per_page($tmp_classuniq_triples, $out_classuniq, $workdir, $sort_mem, $sort_parallel);

logmsg("Wrote:");

logmsg(" $out_hits");

logmsg(" $out_unique");

logmsg(" $out_botai");

logmsg(" $out_classuniq");

# Cleanup

unlink $tmp_hits_keys, $tmp_unique_triples, $tmp_botai_keys, $tmp_classuniq_triples;

exit 0;

# ============================================================================

# USAGE

# ============================================================================

sub usage {

my $cmd = $0; $cmd =~ s!.*/!!;

return <<"USAGE";

Usage:

$cmd --input <glob_or_file> [--input ...] --dest <dir> [options]

Required:

--dest DIR

--input PATH_OR_GLOB (repeatable)

Core:

--workdir DIR Temp workspace (default: DEST/.work)

--bots-conf FILE bots.conf path (default: /etc/nginx/bots.conf)

--ignore-head Exclude HEAD requests

--exclude-local-ip Exclude local/private/loopback IPs

--verbose Verbose progress

--help, -h Help

Sort tuning:

--sort-mem SIZE sort -S SIZE (default: 50%)

--parallel N sort --parallel=N (default: 2)

Canonicalisation:

--mw-title-first / --no-mw-title-first

--strip-query-nonmw / --no-strip-query-nonmw

Outputs under DEST:

page_hits.tsv

page_unique_ips.tsv

page_bot_ai_hits.tsv

USAGE

}

# ============================================================================

# LOGGING

# ============================================================================

sub logmsg {

return unless $verbose;

my $ts = strftime("%Y-%m-%d %H:%M:%S", localtime());

print STDERR "[$ts] $_[0]\n";

}

# ============================================================================

# IP CLASSIFICATION

# ============================================================================

sub is_local_ip {

my ($ip) = @_;

return 0 unless defined $ip && length $ip;

# IPv4 private + loopback

return 1 if $ip =~ /^10\./; # 10.0.0.0/8

return 1 if $ip =~ /^192\.168\./; # 192.168.0.0/16

return 1 if $ip =~ /^127\./; # 127.0.0.0/8

if ($ip =~ /^172\.(\d{1,3})\./) { # 172.16.0.0/12

my $o = $1;

return 1 if $o >= 16 && $o <= 31;

}

# IPv6 loopback

return 1 if lc($ip) eq '::1';

# IPv6 ULA fc00::/7 (fc00..fdff)

return 1 if $ip =~ /^[fF][cCdD][0-9a-fA-F]{0,2}:/;

# IPv6 link-local fe80::/10 (fe80..febf)

return 1 if $ip =~ /^[fF][eE](8|9|a|b|A|B)[0-9a-fA-F]:/;

return 0;

}

# ============================================================================

# FILE / IO

# ============================================================================

sub expand_globs {

my @in = @_;

my @out;

for my $p (@in) {

if ($p =~ /[*?\[]/) { push @out, glob($p); }

else { push @out, $p; }

}

@out = grep { -f $_ } @out;

return @out;

}

sub open_maybe_gz {

my ($path) = @_;

if ($path =~ /\.gz$/i) {

my $gz = IO::Uncompress::Gunzip->new($path)

or die "Gunzip failed for $path: $GunzipError\n";

return $gz;

}

open(my $fh, '<', $path) or die "Cannot open $path: $!\n";

return $fh;

}

# ============================================================================

# PARSING ACCESS LINES

# ============================================================================

sub parse_access_line {

my ($line) = @_;

# Client IP: first token

my $ip;

if ($line =~ /^(\d{1,3}(?:\.\d{1,3}){3})\b/) { $ip = $1; }

elsif ($line =~ /^([0-9a-fA-F:]+)\b/) { $ip = $1; }

else { return (undef, undef, undef, undef, undef, undef); }

# Request method/target + status

my ($method, $target, $status);

if ($line =~ /"(GET|POST|HEAD|PUT|DELETE|OPTIONS|PATCH)\s+([^"]+)\s+HTTP\/[^"]+"\s+(\d{3})\b/) {

$method = $1;

$target = $2;

$status = 0 + $3;

} else {

return ($ip, undef, undef, undef, undef, undef);

}

# Hostname: final quoted field (per nginx.conf custom_format ending with "$server_name")

my $host;

if ($line =~ /\s"([^"]+)"\s*$/) { $host = $1; }

else { return ($ip, $method, $target, $status, undef, undef); }

# UA: second-last quoted field

my @quoted = ($line =~ /"([^"]*)"/g);

my $ua = (@quoted >= 2) ? $quoted[-2] : undef;

return ($ip, $method, $target, $status, $ua, $host);

}

# ============================================================================

# PAGE CANONICALISATION

# ============================================================================

sub canonical_page_key {

my ($target, $ignore_params, $mw_title_first, $strip_query_nonmw) = @_;

my ($path, $query) = split(/\?/, $target, 2);

$path = uri_unescape($path // '');

$query = $query // '';

# MediaWiki: /index.php?...title=Foo

if ($mw_title_first && $path =~ m{/index\.php$} && length($query)) {

my %params = parse_query($query);

if (exists $params{title} && length($params{title})) {

my $title = uri_unescape($params{title});

$title =~ s/\+/ /g;

if (exists $params{edit} && $exclude_local_ip) {

# local

return "l:" . $title;

}

else {

# title (maps to path)

return "p:" . $title;

}

# return "m:" . $title;

}

}

# Non-MW: drop query by default

if ($strip_query_nonmw) {

# path

return "p:" . $path;

}

# Else: retain query, removing noisy params

my %params = parse_query($query);

for my $p (@$ignore_params) { delete $params{$p}; }

my $canon_q = canonical_query_string(\%params);

return $canon_q ? ("pathq:" . $path . "?" . $canon_q) : ("path:" . $path);

}

sub parse_query {

my ($q) = @_;

my %p;

return %p unless defined $q && length($q);

for my $kv (split(/&/, $q)) {

next unless length($kv);

my ($k, $v) = split(/=/, $kv, 2);

$k = uri_unescape($k // ''); $v = uri_unescape($v // '');

$k =~ s/\+/ /g; $v =~ s/\+/ /g;

next unless length($k);

$p{$k} = $v;

}

return %p;

}

sub canonical_query_string {

my ($href) = @_;

my @keys = sort keys %$href;

return '' unless @keys;

my @pairs;

for my $k (@keys) { push @pairs, $k . "=" . $href->{$k}; }

return join("&", @pairs);

}

# ============================================================================

# BOT / AI HELPERS

# ============================================================================

sub ua_matches_any {

my ($ua, $regexes) = @_;

for my $re (@$regexes) {

return 1 if $ua =~ $re;

}

return 0;

}

# ============================================================================

# bots.conf PARSING

# ============================================================================

sub load_bots_conf {

my ($path) = @_;

open(my $fh, '<', $path) or die "Cannot open bots.conf ($path): $!\n";

my $in_bad_bot = 0;

my $in_bot = 0;

my @bad_zero;

my @bad_nonzero;

my @bot_one;

while (my $line = <$fh>) {

chomp $line;

$line =~ s/#.*$//; # comments

$line =~ s/^\s+|\s+$//g; # trim

next unless length $line;

if ($line =~ /^map\s+\$http_user_agent\s+\$bad_bot\s*\{\s*$/) {

$in_bad_bot = 1; $in_bot = 0; next;

}

if ($line =~ /^map\s+\$http_user_agent\s+\$bot\s*\{\s*$/) {

$in_bot = 1; $in_bad_bot = 0; next;

}

if ($line =~ /^\}\s*$/) {

$in_bad_bot = 0; $in_bot = 0; next;

}

if ($in_bad_bot) {

# ~*PATTERN VALUE;

if ($line =~ /^"?(~\*|~)\s*(.+?)"?\s+(.+?);$/) {

my ($op, $pat, $val) = ($1, $2, $3);

$pat =~ s/^\s+|\s+$//g;

$val =~ s/^\s+|\s+$//g;

my $re = compile_nginx_map_regex($op, $pat);

next unless defined $re;

if ($val eq '0') { push @bad_zero, $re; }

else { push @bad_nonzero, $re; }

}

next;

}

if ($in_bot) {

if ($line =~ /^"?(~\*|~)\s*(.+?)"?\s+(.+?);$/) {

my ($op, $pat, $val) = ($1, $2, $3);

$pat =~ s/^\s+|\s+$//g;

$val =~ s/^\s+|\s+$//g;

next unless $val eq '1';

my $re = compile_nginx_map_regex($op, $pat);

push @bot_one, $re if defined $re;

}

next;

}

}

close $fh;

return {

bad_bot_zero => \@bad_zero,

bad_bot_nonzero => \@bad_nonzero,

bot_one => \@bot_one,

};

}

sub compile_nginx_map_regex {

my ($op, $pat) = @_;

return ($op eq '~*') ? eval { qr/$pat/i } : eval { qr/$pat/ };

}

# ============================================================================

# SORT / AGGREGATION

# ============================================================================

sub sort_cmd_base {

my ($workdir, $sort_mem, $sort_parallel) = @_;

my @cmd = ('sort', '-S', $sort_mem, '-T', $workdir);

push @cmd, "--parallel=$sort_parallel" if $sort_parallel && $sort_parallel > 0;

return @cmd;

}

sub run_sort_to_file {

my ($cmd_aref, $outfile) = @_;

open(my $out, '>', $outfile) or die "Cannot write $outfile: $!\n";

open(my $pipe, '-|', @$cmd_aref) or die "Cannot run sort: $!\n";

while (my $line = <$pipe>) { print $out $line; }

close $pipe;

close $out;

}

sub count_host_page_hits {

my ($infile, $outfile, $workdir, $sort_mem, $sort_parallel) = @_;

my $sorted = "$workdir/page_hits.sorted";

my @cmd = (sort_cmd_base($workdir, $sort_mem, $sort_parallel), '-t', "\t", '-k1,1', '-k2,2', $infile);

run_sort_to_file(\@cmd, $sorted);

open(my $in, '<', $sorted) or die "Cannot read $sorted: $!\n";

open(my $out, '>', $outfile) or die "Cannot write $outfile: $!\n";

my ($prev_host, $prev_page, $count) = (undef, undef, 0);

while (my $line = <$in>) {

chomp $line;

my ($host, $page) = split(/\t/, $line, 3);

next unless defined $host && defined $page;

if (defined $prev_host && ($host ne $prev_host || $page ne $prev_page)) {

print $out $prev_host, "\t", $prev_page, "\t", $count, "\n";

$count = 0;

}

$prev_host = $host; $prev_page = $page; $count++;

}

print $out $prev_host, "\t", $prev_page, "\t", $count, "\n" if defined $prev_host;

close $in; close $out;

unlink $sorted;

}

sub count_unique_ips_per_page {

my ($infile, $outfile, $workdir, $sort_mem, $sort_parallel) = @_;

my $sorted = "$workdir/page_unique.sorted";

my @cmd = (sort_cmd_base($workdir, $sort_mem, $sort_parallel), '-t', "\t", '-k1,1', '-k2,2', '-k3,3', $infile);

run_sort_to_file(\@cmd, $sorted);

open(my $in, '<', $sorted) or die "Cannot read $sorted: $!\n";

open(my $out, '>', $outfile) or die "Cannot write $outfile: $!\n";

my ($prev_host, $prev_page, $prev_ip) = (undef, undef, undef);

my $uniq = 0;

while (my $line = <$in>) {

chomp $line;

my ($host, $page, $ip) = split(/\t/, $line, 4);

next unless defined $host && defined $page && defined $ip;

if (defined $prev_host && ($host ne $prev_host || $page ne $prev_page)) {

print $out $prev_host, "\t", $prev_page, "\t", $uniq, "\n";

$uniq = 0; $prev_ip = undef;

}

if (!defined $prev_ip || $ip ne $prev_ip) {

$uniq++; $prev_ip = $ip;

}

$prev_host = $host; $prev_page = $page;

}

print $out $prev_host, "\t", $prev_page, "\t", $uniq, "\n" if defined $prev_host;

close $in; close $out;

unlink $sorted;

}

# 4) count unique IPs per page by class and pivot to columns:

# host<TAB>page<TAB>uniq_human<TAB>uniq_bot<TAB>uniq_ai<TAB>uniq_badbot

sub count_unique_ips_by_class_per_page {

my ($infile, $outfile, $workdir, $sort_mem, $sort_parallel) = @_;

my $sorted = "$workdir/page_class_unique.sorted";

my @cmd = (sort_cmd_base($workdir, $sort_mem, $sort_parallel),

'-t', "\t", '-k1,1', '-k2,2', '-k3,3', '-k4,4', $infile);

run_sort_to_file(\@cmd, $sorted);

open(my $in, '<', $sorted) or die "Cannot read $sorted: $!\n";

open(my $out, '>', $outfile) or die "Cannot write $outfile: $!\n";

my ($prev_host, $prev_page, $prev_class, $prev_ip) = (undef, undef, undef, undef);

my %uniq = (HUMAN => 0, BOT => 0, AI => 0, BADBOT => 0);

while (my $line = <$in>) {

chomp $line;

my ($host, $page, $class, $ip) = split(/\t/, $line, 5);

next unless defined $host && defined $page && defined $class && defined $ip;

# Page boundary => flush

if (defined $prev_host && ($host ne $prev_host || $page ne $prev_page)) {

print $out $prev_host, "\t", $prev_page, "\t",

($uniq{HUMAN}||0), "\t", ($uniq{BOT}||0), "\t", ($uniq{AI}||0), "\t", ($uniq{BADBOT}||0), "\n";

%uniq = (HUMAN => 0, BOT => 0, AI => 0, BADBOT => 0);

($prev_class, $prev_ip) = (undef, undef);

}

# Within a page, we count unique IP per class. Since sorted by class then ip, we can do change detection.

if (!defined $prev_class || $class ne $prev_class || !defined $prev_ip || $ip ne $prev_ip) {

$uniq{$class}++ if exists $uniq{$class};

$prev_class = $class;

$prev_ip = $ip;

}

$prev_host = $host;

$prev_page = $page;

}

if (defined $prev_host) {

print $out $prev_host, "\t", $prev_page, "\t",

($uniq{HUMAN}||0), "\t", ($uniq{BOT}||0), "\t", ($uniq{AI}||0), "\t", ($uniq{BADBOT}||0), "\n";

}

close $in;

close $out;

unlink $sorted;

}

sub count_botai_hits_per_page {

my ($infile, $outfile, $workdir, $sort_mem, $sort_parallel) = @_;

my $sorted = "$workdir/page_botai.sorted";

my @cmd = (sort_cmd_base($workdir, $sort_mem, $sort_parallel), '-t', "\t", '-k1,1', '-k2,2', '-k3,3', $infile);

run_sort_to_file(\@cmd, $sorted);

open(my $in, '<', $sorted) or die "Cannot read $sorted: $!\n";

open(my $out, '>', $outfile) or die "Cannot write $outfile: $!\n";

my ($prev_host, $prev_page) = (undef, undef);

my %c = (BOT => 0, AI => 0, BADBOT => 0);

while (my $line = <$in>) {

chomp $line;

my ($host, $page, $class) = split(/\t/, $line, 4);

next unless defined $host && defined $page && defined $class;

if (defined $prev_host && ($host ne $prev_host || $page ne $prev_page)) {

print $out $prev_host, "\t", $prev_page, "\t", $c{BOT}, "\t", $c{AI}, "\t", $c{BADBOT}, "\n";

%c = (BOT => 0, AI => 0, BADBOT => 0);

}

$c{$class}++ if exists $c{$class};

$prev_host = $host; $prev_page = $page;

}

print $out $prev_host, "\t", $prev_page, "\t", $c{BOT}, "\t", $c{AI}, "\t", $c{BADBOT}, "\n" if defined $prev_host;

close $in; close $out;

unlink $sorted;

}

nginx rollups code

- This code sub-totals page hits categoried into human, AI, bot, bad-bot traffic. IP's are not treated as unique, all IP hits are accumulated.

#!/usr/bin/env perl

use strict;

use warnings;

use IO::Uncompress::Gunzip qw(gunzip $GunzipError);

use Time::Piece;

use Getopt::Long;

use File::Path qw(make_path);

use File::Spec;

use URI::Escape qw(uri_unescape);

#BEGIN_MWDUMP

#title: CM-bucket-rollup invariants

#format: MWDUMP

#invariants:

# 0. display with graph projection: "mw-page GET/PUT/POST/HEAD et. al. agent projection (invariants)"

# 1. server_name is first-class; never dropped; emitted in output schema and used for optional filtering.

# 2. input globs are expanded then processed in ascending mtime order (oldest -> newest).

# 3. time bucketing is purely mathematical: bucket_start = floor(epoch/period_seconds)*period_seconds.

# 4. badbot is definitive and detected ONLY by HTTP status == 308; no UA regex for badbot.

# 5. AI and bot are derived from /etc/nginx/bots.conf:

# - only patterns mapping to 0 are "wanted"

# - between '# good bots' and '# AI bots' => bot

# - between '# AI bots' and '# unwanted bots' => AI_bot

# - unwanted-bots section ignored for analytics classification

# 6. output TSV schema is fixed (total/host/path last; totals are derivable):

# curlwget|ai|bot|human × (get|head|post|put|other) × (ok|redir|client_err|other)

# badbot_308

# total_hits server_name path

# 7. Path identity is normalised so the same resource collates across:

# absolute URLs, query strings (incl action/edit), MediaWiki title=, percent-encoding, and trailing slashes.

# 8. --exclude-local excludes (does not count) local IP hits and POST+edit hits in the defined window, before bucketing.

# 9. web-farm safe: aggregation keys include bucket_start + server_name + path; no cross-vhost contamination.

# 10. bots.conf parsing must be auditable: when --verbose, report "good AI agent" and "good bot" patterns to STDERR.

# 11. method taxonomy is uniform for all agent categories: GET, HEAD, POST, PUT, OTHER (everything else).

#END_MWDUMP

my $cmd = $0;

# -------- options --------

my ($EXCLUDE_LOCAL, $VERBOSE, $HELP, $OUTDIR, $PERIOD, $SERVER) = (0,0,0,".","01:00","");

GetOptions(

"exclude-local!" => \$EXCLUDE_LOCAL,

"verbose!" => \$VERBOSE,

"help!" => \$HELP,

"outdir=s" => \$OUTDIR,

"period=s" => \$PERIOD,

"server=s" => \$SERVER, # optional filter; empty means all

) or usage();

usage() if $HELP;

sub usage {

print <<"USAGE";

Usage:

$cmd [options] /var/log/nginx/access.log*

Options:

--exclude-local Exclude local IPs and POST edit traffic

--outdir DIR Directory to write TSV outputs

--period HH:MM Period size (duration), default 01:00

--server NAME Only count hits where server_name == NAME (web-farm filter)

--verbose Echo processing information + report wanted agents from bots.conf

--help Show this help and exit

Output:

One TSV per time bucket, named:

YYYY_MM_DDThh_mm-to-YYYY_MM_DDThh_mm.tsv

Columns (server/page last; totals derivable):

human_head human_get human_post human_other

ai_head ai_get ai_post ai_other

bot_head bot_get bot_post bot_other

badbot_head badbot_get badbot_post badbot_other

server_name page_category

USAGE

exit 0;

}

make_path($OUTDIR) unless -d $OUTDIR;

# -------- period math (no validation, per instruction) --------

my ($PH, $PM) = split(/:/, $PERIOD, 2);

my $PERIOD_SECONDS = ($PH * 3600) + ($PM * 60);

# -------- edit exclusion window --------

my $START_EDIT = Time::Piece->strptime("12/Dec/2025:00:00:00", "%d/%b/%Y:%H:%M:%S");

my $END_EDIT = Time::Piece->strptime("01/Jan/2026:23:59:59", "%d/%b/%Y:%H:%M:%S");

# -------- parse bots.conf (wanted patterns only) --------

my $BOTS_CONF = "/etc/nginx/bots.conf";

my (@AI_REGEX, @BOT_REGEX);

my (@AI_RAW, @BOT_RAW);

open my $bc, "<", $BOTS_CONF or die "$cmd: cannot open $BOTS_CONF: $!";

my $mode = "";

while (<$bc>) {

if (/^\s*#\s*good bots/i) { $mode = "GOOD"; next; }

if (/^\s*#\s*AI bots/i) { $mode = "AI"; next; }

if (/^\s*#\s*unwanted bots/i) { $mode = ""; next; }

next unless $mode;

next unless /~\*(.+?)"\s+0;/;

my $pat = $1;

if ($mode eq "AI") {

push @AI_RAW, $pat;

push @AI_REGEX, qr/$pat/i;

} elsif ($mode eq "GOOD") {

push @BOT_RAW, $pat;

push @BOT_REGEX, qr/$pat/i;

}

}

close $bc;

if ($VERBOSE) {

for my $p (@AI_RAW) { print STDERR "[agents] good AI agent: ~*$p\n"; }

for my $p (@BOT_RAW) { print STDERR "[agents] good bot: ~*$p\n"; }

}

# -------- helpers --------

sub is_local_ip {

my ($ip) = @_;

return 1 if $ip eq "127.0.0.1" || $ip eq "::1";

return 1 if $ip =~ /^10\./;

return 1 if $ip =~ /^192\.168\./;

return 0;

}

sub agent_class {

my ($status, $ua) = @_;

return "badbot" if $status == 308;

return "curlwget" if defined($ua) && $ua =~ /\b(?:curl|wget)\b/i;

for (@AI_REGEX) { return "ai" if $ua =~ $_ }

for (@BOT_REGEX) { return "bot" if $ua =~ $_ }

return "human";

}

sub method_bucket {

my ($m) = @_;

return "head" if $m eq "HEAD";

return "get" if $m eq "GET";

return "post" if $m eq "POST";

return "put" if $m eq "PUT";

return "other";

}

sub status_bucket {

my ($status) = @_;

return "other" unless defined($status) && $status =~ /^\d+$/;

return "ok" if $status == 200 || $status == 304;

return "redir" if $status >= 300 && $status <= 399; # 308 handled earlier as badbot

return "client_err" if $status >= 400 && $status <= 499;

return "other";

}

sub normalise_path {

my ($raw) = @_;

my $p = $raw;

$p =~ s{^https?://[^/]+}{}i; # strip scheme+host if absolute URL

$p = "/" if !defined($p) || $p eq "";

# Split once so we can canonicalise MediaWiki title= before dropping the query.

my ($base, $qs) = split(/\?/, $p, 2);

$qs //= "";

# Rewrite */index.php?title=X* => */X (preserve directory prefix)

if ($base =~ m{/index\.php$}i && $qs =~ /(?:^|&)title=([^&]+)/i) {

my $title = uri_unescape($1);

(my $prefix = $base) =~ s{/index\.php$}{}i;

$base = $prefix . "/" . $title;

}

# Drop query/fragment entirely (normalise out action=edit etc.)

$p = $base;

$p =~ s/#.*$//;

# Percent-decode ONCE

$p = uri_unescape($p);

# Collapse multiple slashes

$p =~ s{//+}{/}g;

# Trim trailing slash except for root

$p =~ s{/$}{} if length($p) > 1;

return $p;

}

sub fmt_ts {

my ($epoch) = @_;

my $tp = localtime($epoch);

return sprintf("%04d_%02d_%02dT%02d_%02d",

$tp->year, $tp->mon, $tp->mday, $tp->hour, $tp->min);

}

# -------- log regex (captures server_name as final quoted field) --------

my $LOG_RE = qr{

^(\S+)\s+\S+\s+\S+\s+\[([^\]]+)\]\s+

"(GET|POST|HEAD|[A-Z]+)\s+(\S+)[^"]*"\s+

(\d+)\s+\d+.*?"[^"]*"\s+"([^"]*)"\s+"([^"]+)"\s*$

}x;

# -------- collect files (glob, then mtime ascending) --------

@ARGV or usage();

my @files;

for my $a (@ARGV) { push @files, glob($a) }

@files = sort { (stat($a))[9] <=> (stat($b))[9] } @files;

# -------- bucketed stats --------

# %BUCKETS{bucket_start}{end} = bucket_end

# %BUCKETS{bucket_start}{stats}{server}{page}{metric} = count

my %BUCKETS;

for my $file (@files) {

print STDERR "$cmd: processing $file\n" if $VERBOSE;

my $fh;

if ($file =~ /\.gz$/) {

$fh = IO::Uncompress::Gunzip->new($file)

or die "$cmd: gunzip $file: $GunzipError";

} else {

open($fh, "<", $file) or die "$cmd: open $file: $!";

}

while (<$fh>) {

next unless /$LOG_RE/;

my ($ip,$ts,$method,$path,$status,$ua,$server_name) = ($1,$2,$3,$4,$5,$6,$7);

next if ($SERVER ne "" && $server_name ne $SERVER);

my $clean = $ts;

$clean =~ s/\s+[+-]\d{4}$//;

my $tp = Time::Piece->strptime($clean, "%d/%b/%Y:%H:%M:%S");

my $epoch = $tp->epoch;

if ($EXCLUDE_LOCAL) {

next if is_local_ip($ip);

if ($method eq "POST" && $path =~ /edit/i) {

next if $tp >= $START_EDIT && $tp <= $END_EDIT;

}

}

my $bucket_start = int($epoch / $PERIOD_SECONDS) * $PERIOD_SECONDS;

my $bucket_end = $bucket_start + $PERIOD_SECONDS;

my $npath = normalise_path($path);

my $aclass = agent_class($status, $ua);

my $metric;

if ($aclass eq "badbot") {

$metric = "badbot_308";

} else {

my $mb = method_bucket($method);

my $sb = status_bucket($status);

$metric = join("_", $aclass, $mb, $sb);

}

$BUCKETS{$bucket_start}{end} = $bucket_end;

$BUCKETS{$bucket_start}{stats}{$server_name}{$npath}{$metric}++;

}

close $fh;

}

# -------- write outputs --------

my @ACTORS = qw(curlwget ai bot human);

my @METHODS = qw(get head post put other);

my @SB = qw(ok redir client_err other);

my @COLS;

for my $a (@ACTORS) {

for my $m (@METHODS) {

for my $s (@SB) {

push @COLS, join("_", $a, $m, $s);

}

}

}

push @COLS, "badbot_308";

push @COLS, "total_hits";

push @COLS, "server_name";

push @COLS, "path";

for my $bstart (sort { $a <=> $b } keys %BUCKETS) {

my $bend = $BUCKETS{$bstart}{end};

my $out = File::Spec->catfile(

$OUTDIR,

fmt_ts($bstart) . "-to-" . fmt_ts($bend) . ".tsv"

);

print STDERR "$cmd: writing $out\n" if $VERBOSE;

open my $outf, ">", $out or die "$cmd: write $out: $!";

print $outf join("\t", @COLS), "\n";

my $stats = $BUCKETS{$bstart}{stats};

for my $srv (sort keys %$stats) {

for my $p (sort {

# sort by derived total across all counters (excluding total/host/path)

my $sa = 0; my $sb = 0;

for my $c (@COLS) {

next if $c eq 'total_hits' || $c eq 'server_name' || $c eq 'path';

$sa += ($stats->{$srv}{$a}{$c} // 0);

$sb += ($stats->{$srv}{$b}{$c} // 0);

}

$sb <=> $sa

} keys %{ $stats->{$srv} }

) {

my @vals;

# emit counters

my $total = 0;

for my $c (@COLS) {

if ($c eq 'total_hits') {

push @vals, 0; # placeholder; set after computing total

next;

}

if ($c eq 'server_name') {

push @vals, $srv;

next;

}

if ($c eq 'path') {

push @vals, $p;

next;

}

my $v = $stats->{$srv}{$p}{$c} // 0;

$total += $v;

push @vals, $v;

}

# patch in total_hits (it is immediately after badbot_308)

for (my $i = 0; $i < @COLS; $i++) {

if ($COLS[$i] eq 'total_hits') {

$vals[$i] = $total;

last;

}

}

print $outf join("\t", @vals), "\n";

}

}