Synology DS1515+ repair

introduction

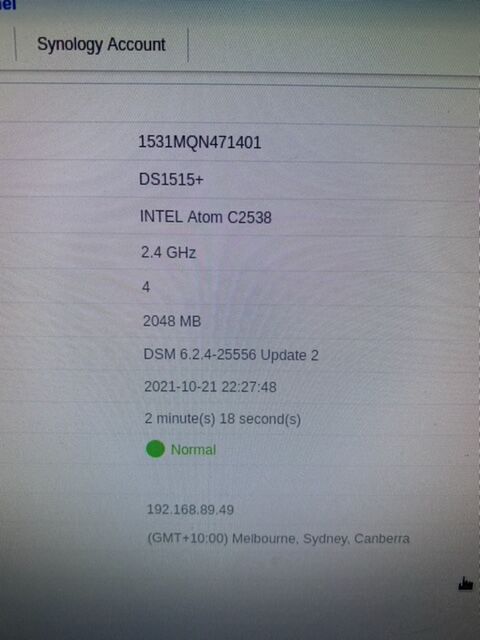

I use(d) a Synology DS1515+ with approximately 15 TB of storage running RAID 6.

My unit was suffering from intermittent restarts. In the end I put my unit into WOL mode and had a scheduled shutdown from which it never recovered - it was then bricked.

The initial failure of my Synology DS1515+ was a few years back while still under warranty and was sent away for repair and was out of action for nearly 3 months during which I had to perform manual backups to external USB drives, and of course was unable to use the Cloud Station to synchronize my laptops.

When the DS1515+ came back it seemed fine, but while it was away I had uncovered some concerning reports of other people experiencing failures:

- failure to start, and

- a Atom silicon fault that can fall due any moment, and

- unexpected shutdowns

The former and later sounded like my system's symptoms.

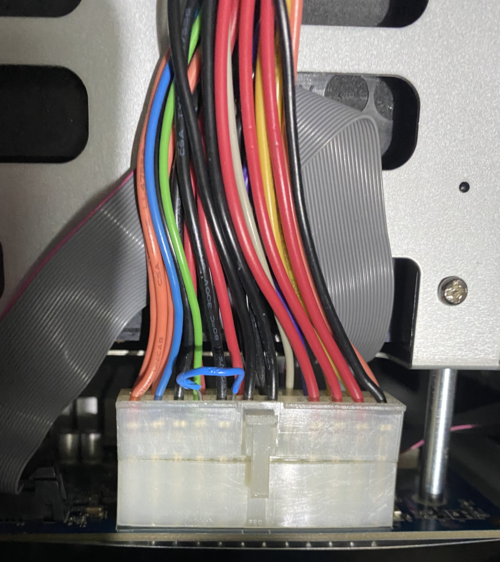

At the time I had not heard of the paper-clip test and never gave a thought to look at the power supply to discover that it was an ATX PSU. In more of a panic I was concerned that it was suffering from the Intel Atom C2000 silicon fault with the multiplexed LPC clock output and I wanted a replacement under warranty so I just sent the unit in as an RMA expecting to receive a replacement out of stock within the week or so and not what seemed like 3 months.

The returned unit was obviously a repaired unit and not a new unit - but it was working. I got my data back and because of their reported problems I replaced my two SSD cache drives and purchased some more hard-disks and converted the unit over to RAID 6, which can recover from two simultaneous disk failures. I needed resilience over speed because this system is used to backup my development stuff. Though it is my primary backup I do have other incremental backups as well as manual backups happening spread across separate media and machines. The extended outage affected business continuity and my time.

findings

The unit contains an Intel Atom C2538 stepping B0 chip with failed silicon - see table 1 AVR54 Intel Atom Processor C2000 Product Family Specification Errata

The returned unit contained two mods:

- a 100 ohm resistor mod to "fix" the Intel Atom C2000 LPC_CLKOUT - due obviously to failed silicon driver on the chip die. (There are quite a few faults identified on this chip after it went into production and Intel subsequently published an Intel Atom Processor C2000 Product Family Specification Errata - too bad for manufacturers that were first to use this chip).

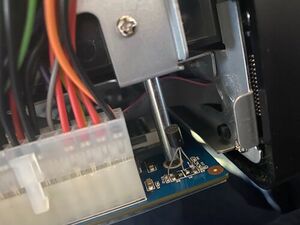

- and I discovered just recently after removing the motherboard that Q1 had been removed and Q2 was tinkered with (re-soldered). (Since publishing this article I have found the avforums link with the same repair and has a photograph showing that a newer board doesn't have Q1 fitted.)

|

|

The previously repaired unit ran past the warranty period and then the exact same symptoms were experienced with intermittent unexpected shutdown being reported. How can that be since it was repaired/patched and it is supplied by a rock-solid UPS?

Every time it suffered an unexpected/unscheduled shutdown it scrubs the drives on restart - which in my case runs for many hours. Often the scrub was not completed before the next outage so its important to have the unit supplied by a UPS.

Again I thought I would enable Wakeup-ON-LAN (WOL) and perform a scheduled shutdown at 0455 to avoid the problem period which seemed to extend about two hours before I opened my office. The idea was that I would manually restart it in the morning when I opened the office and could keep an eye on it. I thought I was being smart setting up WOL and with the scheduled shutdown, but the next morning my DS1515+ was bricked. It would no longer turn on from WOL or the switch.

Before the complete failure the log entries were fairly sparse except that the unit was reporting unexpected shutdowns and that I should consider connecting it to a UPS. I do run my unit 24x7 supplied by a massive EATON 3KVA sinusoidal power-factor corrected UPS with external battery banks. I have recently quantified that the UPS provides more than 9 hours of standby to my: network equipment, the NAS, and my critical servers, the result of a battery capacity test I conducted after replacing all the batteries a few months ago. I know my power supply to the NAS is both reliable and conditioned. Standby power is available for so long that I have not bothered wiring the UPS serial communications to the NAS - which may explain its nagging every time that I managed to login to the https port 5001 due to its very own internal PSU switching off unexpectedly - and not my power.

So I know the NAS has its own internal power supply design problem.

The first time I tried the paper clip trick it did not work - so I set the unit aside for a few days while I ordered a spare PSU. The new ATX Power Supply Unit (PSU) arrived from the Synology agent in record time a few days later (on Friday before lunch). In the interim I thought about researching experiences of others through all the forums and blogs using Dr Google. That was when I found that people have been experiencing lots of bricked units and people were applying the paper-clip trick and going off in all sorts of directions and a fair few threw the unit out and many said they were leaving the brand due to the "lack" off spares and support policies. One person even built their own NAS as a result - the sort of thing I would do if I had a bit more time to spare!

Was I disappointed that the new PSU did not fix the problem? Yes I was, but now I at least have a spare power supply unit that can be put into service quickly, and I can always turn it into a bench supply for low power motherboards. If the new PSU had of worked I would not have pulled the motherboard out to go back to basics and trace the fault. So the new PSU did actually lead to the repair of my unit, and was money well spent when you compare it to the cost of my time.

the fault

The fact that the Synology DS1515+ NAS subsequently failed a year or so after repair made me sceptical so I pulled the motherboard out to inspect it.

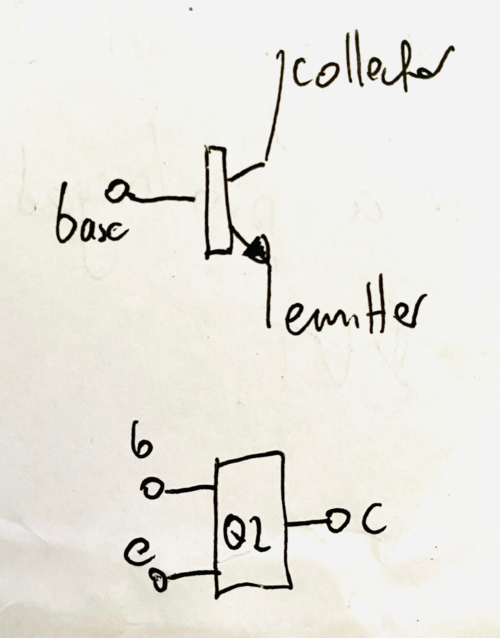

I traced the ATX PS-ON line to Q2 with one of my multimeters.

It turns out that Q2, which I had some trouble identifying from the SMD code markings, appears to be an NPN bipolar transistor with grounded emitter and the collector connected to the ATX PS-ON line. Q2 was now unsuccessfully attempting to clamp PS-ON to ground, and when I did manage to get it to work after soldering what looked like a dry joint, it would only run for a little more than 2 minutes or so. I measured that Q2 has 0.6 volts on one pin when it was working so this informed me that it was an NPN transistor and not an N-channel MOSFET.

Since Q2 could only clamp the PS-ON towards ground (showing 1.8 volts on the collector) I measured the base voltage of not much more than 0.6 volts, which in my opinion is barely adequate to switch the PN junction on, and certainly not operating the transistor in the saturated (switching) region. Something was wrong with either the drive or the transistor.

What have I got to lose, the unit is out of warranty and I need it to work? So I decided to remove Q2 and replace it with a regular bipolar transistor out of my spare parts box (of which I have many). I tested a few of my brand-new spares until I found a transistor with a current gain of 860 (and would have preferred a gain of 1000) and I soldered it in place of Q2. The theory being that the old Q2 was not being turned on hard enough to clamp PS-ON to ground.

Since Q1 was in parallel with Q2 and Q1 had been removed by someone else I used the base-pad from Q2 and the collector and emitter pads from Q1 to obtain a wider spread between the transistor legs. I didn't use an SMD transistor, I used a regular general purpose small-signal NPN transistor because we are at day 3 and I wanted my unit working and I did not have a suitable NPN SMD transistor in stock. Since the transistor worked under test it was soldered in properly and secured and the legs were stabilized with hot-melt glue. I did not bother cutting the legs short as that would have made it more difficult to solder with the confined spacing between the legs.

The base of Q2 is driven by a thin trace that goes to the via near the BT1 label close to the Lithium battery cell. This is an indicator to me that there may be a real-time watchdog circuit driving the base of Q2 to clamp PS-ON to ground, and possibly it is driven by this 3-volt battery when you press the front button and when you set a scheduled-restart. This is a reason to replace the aged Lithium cell.

I replaced the Lithium cell while I had the motherboard out, it had a lower voltage than expected and must be more than 3 years old, and I suspect that it powers the power watchdog circuit. I soldered a 2xAA battery pack between the the small positive pad near the holder and a ground track pin near the 100 ohm resistor, inserted two Alkaline cells (1.62 V each), and then hot-swapped a new Lithium cell into the holder without losing the configuration of the NAS!

I also employed safe Electro Static Discharge (ESD) techniques when working on the board - which obviously has active parts near the Lithium cell. I have an electrostatic wrist strap which I clipped to the Ethernet RJ45 connector case while inspecting and operating on the board. It is also a good idea to use a proper ESD soldering iron, or better still an SMD rework station. You should disconnect a soldering iron's power source if you are using a mains powered iron when soldering components, and it is an idea to earth the barrel with a ground strap to the board. You must also take care not to short anything out on the board. You should also not run the motherboard for more than a minute or so when the fans are disconnected because the Intel Atom processor will overhead and the unit will alarm.

I run my NAS 24x7 on an Eaton UPS and it has now been running non-stop since Oct 22 without any unexpected shutdowns nor notifications, so this may have fixed the problem. It may be that Q2 went soft as we say in the industry. Probably because it has not been properly operating in the saturation region. (If it stops working I will post when here.)

I have under consideration to upgrade my 5-bay 15TB NAS to an 8-bay unit - which would have resulted in the old brick being put into my spare-parts bin. However, I have received great satisfaction from diagnosing what appears to be at the time of writing an inadequately reported fault, and a simple repair. (It was possibly a matter of not passing the correct search terms to Dr Google though for there are actually many reports on repairs that I have found subsequently.)

I also thought I would get somewhere towards purchasing an upgraded replacement motherboard by reporting the extensive details of this repair to Synology - but I was wrong. Hence I have placed this publication on the web which I hope will help others to recover their bricks.

STOP PRESS

After my diagnosis and repair and listing in this mediawiki I searched to see if Google had indexed this page yet or not. I found that Dr Google points to the repair another person has made that pre-dates mine by 3 months. They have basically listed essentially the same repair at https://www.avforums.com/threads/synology-nas-died-fixed.2367106/ and their board had a different SMD marked transistor from which they were able to identify the transistor as a BC847 which has a max hFE of 800. So my diagnosis and selection of an NPN transistor with a higher hFE of 860 comforts me. The Vbe(sat) of 700 - 900 mV with a Vbe(on) 580 - 720 mV means the board is sailing very close to the wind - thus I believe it is important to ensure the Lithium battery is fresh.

So there you go, here are all the necessary known mods:

- a 100 ohm resistor to address the Intel C2000 stepping B0 silicon fault (some have found 800 ohms or so will work but others have had to use 100 ohms)

- replace Q2 with a high-gain transistor (BC847 or better)

- replace the Lithium battery.

I am now confident that my fix was the expedient thing to do - other than purchase another motherboard to replace my faulty one which Synology Technical Support stated was not possible - hence I have published the repair here and of course I await its next failure.

Intel allegedly accidentally produce a faulty chip and pass it onto the manufacturers who then get blamed by their customers, and Synology allegedly wait for the warranty claim period to pass. Who would have thought that a processor chip could fail like that - I didn't before the advent of this experience? Of course Synology are also aware of the other failure mode which is the switching transistor for ATX PS-ON (the green wire). Synology seem to have kept that disclosure hidden - they want you to purchase a whole new unit.

Congratulation to Will I Aint https://www.avforums.com/threads/synology-nas-died-fixed.2367106/ for arriving at this fix before I even knew - well done - particularly since electronics was not their forte.

Had I found your forum thread with Dr Google before embarking on my fix I would have been assured to go straight to replacing the culprit without hesitation. A design in my opinion that is a poor design with too low a drive to Q2. It would have behoved Synology to provide at least 900 mV in order to saturate Q2 and I would have used a low-threshold N-channel MOSFET instead if I had designed the circuit.

Typically NPN switching transistors should be driven by a higher voltage with a current limiting resistor in the base circuit. It is input current from a voltage source above Vbe(sat) that turns a transistor into saturation and the base current must be at least the Collector current divided by the minimum hFE. Which is why it is important to select a transistor with a high hFE to address the failure. Most off the shelf general purpose NPN can have a hFE as low as 100 and they age with load and time (i.e. can go soft and wear out). I am not surprised that Q2 failed to work properly with such a low base voltage being measured on the base pad and a production transistor that had such a wide-spread in hFE!

I expect there will be more failures on other boards as the silicon ages - it is a matter of time and use. I know that turning it off and on accelerates the failures, and I suspect the WOL and scheduled off did not help. It is probably no coincidence that my unit bricked on two separate occasions after setting WOL and a scheduled shutdown.

synologyonline.com

I also discovered this website http://synologyonline.com/ providing revision and repair servicing of Synology NAS for a flat rate. They will also purchase non-operating units if you don't want to repair, or if you have relegated them to your scrap bin. Had it not been for the cost of delays and shipping costs I would have gone for that service if I had known at the time. I may even go for the defunct service yet and switch product vendors. I should write them and ask if they have replacement motherboards with all their fixes and what cost because Synology were not forthcoming.

Ray responded to my email and said he can repair and return a motherboard. Ray suggested finding a 2nd hand motherboard to send to him for repair and that it could be kept as a spare. That is a great idea if your system is mission critical and you need your unit working 24 x 7 with minimal outage.

I believe the advertised price for what is claimed and the one year warranty is a great service.

conclusion

I hope that you find this article helpful and the reference links. It took me longer to write this than to fix the unit - lol. All up its been nearly two days of me fixing, corresponding, and documenting instead of writing my software - but it was satisfying to have diagnosed and made the fix. Seriously though, I can't vouch for the reliability, and I have lost confidence in the product. It would have been more cost effective based on the cost of my time to just purchase a replacement - but I hate electronic waste. This unit, in my opinion should have run until the disks flew apart or the cows came home, but it degrades and dies instead. That is pretty convenient isn't it - inbuilt obsolescence due to one chip manufacturers mistake and to another manufacturer's what appears to be a poor design or component selection because it fails doesn't it?

With world-wide chip shortages I think we will be needing our electronics to run as long as possible and repairs will be par for the course in the future. I am sure people have voted with their feet - I am still hanging in there because I actually like the product. I run my own software on it too.

background

I have nearly always been interested in electronics. I built my first circuit at the age of 4 for a show-and-tell at pre-school. Dad taught me how to solder and built a soldering iron for me out of spare parts and a rewound TV-set transformer when I was 4 years old, and dad was an Amateur Radio Operator (ham), and we spent hours in his laboratories at all the places we lived together. As a result I hold an Advanced Class Amateur Radio Operators Licence (limited since 1970 and unlimited since 1990) that permits me to design, build and operate transceivers and to work on radio equipment. Thus I have designed and built transceivers, switch mode power supplies and constructed all sorts of projects.

I subsequently chose a career path in Information Technology over 45 years ago, and I am a Software Consultant by trade. One who designs and writes code (for over 45 years and I still do). I also love making hardware projects, using micro-controllers and raspberry pi, as well as obtaining satisfaction from diagnosing and repairing equipment faults. I guess you could say a techno-freak or a nerd, for I am either writing software or making something between flying my aircraft or visits from my grand children whom I also take flying because they love it.

With aged vision and the tiny obscure SMD grains of sands I am glad I switched to Information Technology earlier on in my career to leave the SMD rework for younger people. Though I have done my fair share of SMD modding of commercial transceivers it is not as enjoyable as writing code - plus I can resort to a large font - lol.

I have now purchased an electronic document presentation camera on ebay which can make large-scale video output of page-size material, such as SMD boards - and look forward to its arrival. (Thanks to Ray from http://synologyonline.com for the suggestion of this camera microscope system that can be used for SMD work. I also want to get this presentation camera working with Linux.)

I have plans to teach Electronics and Information Technology with this

You may contact me if you get stuck, or feel uncomfortable making the repairs yourself, particularly if you are ACT local.

ralph . holland AT live.com.au

Update: 2022-07-20

That old NAS is still working but I bought a new Synology 8 bay unit and migrated my backups to brand-new helium filled larger enterprise disks, and I had a brand-new disk fail while migrating and it took over a month to get a replacement disk under warranty. So during the interim I purchased a disk from another supplier and the price had gone up by $230 leaving not much change from $1000. I had to get my new NAS out of the failure mode and get the nightly backups working again - it was just too risk for my company not to have an operating NAS - the old one was practically full and saw me through the month. The one new drive progressively failed and hard-failed 3 days into the migration, and it took nearly 5 days to build the disk array, and then another 3 days to perform the first disk scrub once I had properly working drives. I now have a cold spare drive and I have 4 spare-bays which can be used for upgrades and data migration. I do not want to go through a migration from one NAS to another NAS across the network again!

I am still waiting for my Elmo camera - it is allegedly stuck in a USPS warehouse due to covid-19 since Nov 21 and I am assuming lost for ever now. There was a statement from USPS that they were no longer shipping to Australia or NZ for the foreseeable future - so I am not using them again!

Since my eyes could no longer wait for the Elmo to arrive I purchased a brand-new Hayear HY-2070 microscope that has a display and HDMI and USB output. I made sure that it had Linux software too before I purchased.

I find that extremely cost-effective and useful. I did have to pull the screen apart though, layer by layer, and wash and wipe off a water-mark on the semi-translucent back-lighting sheet that kept showing up in the image. So you could say I repair LCD screens too.

It was a bit of a job putting the screen back together though - so I would rather not do that again, and it is a lot easier writing and repairing software.

I also repaired a quad SMD web-server embedded processor which was crooked and shorting across the pads using the above camera and lots of flux and my recently purchased SMD rework station and by holding my tongue in the right place of course. So you could say the camera and the SMD rework station paid for itself. That was a web-server for a bore-scope camera that interfaces to an iPhone. The chip was smaller than a postage stamp and had too many legs to bother counting. I had placed that web-server in my repair box over a year ago until I had purchased those two items of equipment - it was my first reapir using that equipment.

I had previously removed the screen on my old iPhone-X with a hot-air gun and replaced the battery during the first out-break of covid-19, and is why I purchased a proper SMD re-work station with an hot-plate and an hot-air torch. I expect I will be forced to make more equipment repairs.

The things we do to keep a company running because of the massive supply-chain problems and lock downs due to covid-19.