Publications Access Graphs: Difference between revisions

| Line 474: | Line 474: | ||

* All plotted points trace back to bucket TSV bins. | * All plotted points trace back to bucket TSV bins. | ||

* /<x> and /<x-dir> variants MUST fold to the same canonical resource. | * /<x> and /<x-dir> variants MUST fold to the same canonical resource. | ||

=Rollup code= | |||

<pre> | |||

root@padme:/home/ralph/AI# cat logrollup | |||

#!/usr/bin/env perl | |||

use strict; | |||

use warnings; | |||

use IO::Uncompress::Gunzip qw(gunzip $GunzipError); | |||

use Time::Piece; | |||

use Getopt::Long; | |||

use File::Path qw(make_path); | |||

use File::Spec; | |||

use URI::Escape qw(uri_unescape); | |||

#BEGIN_MWDUMP | |||

#title: CM-bucket-rollup invariants | |||

#format: MWDUMP | |||

#invariants: | |||

# 1. server_name is first-class; never dropped; emitted in output schema and used for optional filtering. | |||

# 2. input globs are expanded then processed in ascending mtime order (oldest -> newest). | |||

# 3. time bucketing is purely mathematical: bucket_start = floor(epoch/period_seconds)*period_seconds. | |||

# 4. badbot is definitive and detected ONLY by HTTP status == 308; no UA regex for badbot. | |||

# 5. AI and bot are derived from /etc/nginx/bots.conf: | |||

# - only patterns mapping to 0 are "wanted" | |||

# - between '# good bots' and '# AI bots' => bot | |||

# - between '# AI bots' and '# unwanted bots' => AI_bot | |||

# - unwanted-bots section ignored for analytics classification | |||

# 6. output TSV schema is fixed (total/host/path last; totals are derivable): | |||

# curlwget|ai|bot|human × (get|head|post|put|other) × (ok|redir|client_err|other) | |||

# badbot_308 | |||

# total_hits server_name path | |||

# 7. Path identity is normalised so the same resource collates across: | |||

# absolute URLs, query strings (incl action/edit), MediaWiki title=, percent-encoding, and trailing slashes. | |||

# 8. --exclude-local excludes (does not count) local IP hits and POST+edit hits in the defined window, before bucketing. | |||

# 9. web-farm safe: aggregation keys include bucket_start + server_name + path; no cross-vhost contamination. | |||

# 10. bots.conf parsing must be auditable: when --verbose, report "good AI agent" and "good bot" patterns to STDERR. | |||

# 11. method taxonomy is uniform for all agent categories: GET, HEAD, POST, PUT, OTHER (everything else). | |||

#END_MWDUMP | |||

my $cmd = $0; | |||

# -------- options -------- | |||

my ($EXCLUDE_LOCAL, $VERBOSE, $HELP, $OUTDIR, $PERIOD, $SERVER) = (0,0,0,".","01:00",""); | |||

GetOptions( | |||

"exclude-local!" => \$EXCLUDE_LOCAL, | |||

"verbose!" => \$VERBOSE, | |||

"help!" => \$HELP, | |||

"outdir=s" => \$OUTDIR, | |||

"period=s" => \$PERIOD, | |||

"server=s" => \$SERVER, # optional filter; empty means all | |||

) or usage(); | |||

usage() if $HELP; | |||

sub usage { | |||

print <<"USAGE"; | |||

Usage: | |||

$cmd [options] /var/log/nginx/access.log* | |||

Options: | |||

--exclude-local Exclude local IPs and POST edit traffic | |||

--outdir DIR Directory to write TSV outputs | |||

--period HH:MM Period size (duration), default 01:00 | |||

--server NAME Only count hits where server_name == NAME (web-farm filter) | |||

--verbose Echo processing information + report wanted agents from bots.conf | |||

--help Show this help and exit | |||

Output: | |||

One TSV per time bucket, named: | |||

YYYY_MM_DDThh_mm-to-YYYY_MM_DDThh_mm.tsv | |||

Columns (server/page last; totals derivable): | |||

human_head human_get human_post human_other | |||

ai_head ai_get ai_post ai_other | |||

bot_head bot_get bot_post bot_other | |||

badbot_head badbot_get badbot_post badbot_other | |||

server_name page_category | |||

USAGE | |||

exit 0; | |||

} | |||

make_path($OUTDIR) unless -d $OUTDIR; | |||

# -------- period math (no validation, per instruction) -------- | |||

my ($PH, $PM) = split(/:/, $PERIOD, 2); | |||

my $PERIOD_SECONDS = ($PH * 3600) + ($PM * 60); | |||

# -------- edit exclusion window -------- | |||

my $START_EDIT = Time::Piece->strptime("12/Dec/2025:00:00:00", "%d/%b/%Y:%H:%M:%S"); | |||

my $END_EDIT = Time::Piece->strptime("01/Jan/2026:23:59:59", "%d/%b/%Y:%H:%M:%S"); | |||

# -------- parse bots.conf (wanted patterns only) -------- | |||

my $BOTS_CONF = "/etc/nginx/bots.conf"; | |||

my (@AI_REGEX, @BOT_REGEX); | |||

my (@AI_RAW, @BOT_RAW); | |||

open my $bc, "<", $BOTS_CONF or die "$cmd: cannot open $BOTS_CONF: $!"; | |||

my $mode = ""; | |||

while (<$bc>) { | |||

if (/^\s*#\s*good bots/i) { $mode = "GOOD"; next; } | |||

if (/^\s*#\s*AI bots/i) { $mode = "AI"; next; } | |||

if (/^\s*#\s*unwanted bots/i) { $mode = ""; next; } | |||

next unless $mode; | |||

next unless /~\*(.+?)"\s+0;/; | |||

my $pat = $1; | |||

if ($mode eq "AI") { | |||

push @AI_RAW, $pat; | |||

push @AI_REGEX, qr/$pat/i; | |||

} elsif ($mode eq "GOOD") { | |||

push @BOT_RAW, $pat; | |||

push @BOT_REGEX, qr/$pat/i; | |||

} | |||

} | |||

close $bc; | |||

if ($VERBOSE) { | |||

for my $p (@AI_RAW) { print STDERR "[agents] good AI agent: ~*$p\n"; } | |||

for my $p (@BOT_RAW) { print STDERR "[agents] good bot: ~*$p\n"; } | |||

} | |||

# -------- helpers -------- | |||

sub is_local_ip { | |||

my ($ip) = @_; | |||

return 1 if $ip eq "127.0.0.1" || $ip eq "::1"; | |||

return 1 if $ip =~ /^10\./; | |||

return 1 if $ip =~ /^192\.168\./; | |||

return 0; | |||

} | |||

sub agent_class { | |||

my ($status, $ua) = @_; | |||

return "badbot" if $status == 308; | |||

return "curlwget" if defined($ua) && $ua =~ /\b(?:curl|wget)\b/i; | |||

for (@AI_REGEX) { return "ai" if $ua =~ $_ } | |||

for (@BOT_REGEX) { return "bot" if $ua =~ $_ } | |||

return "human"; | |||

} | |||

sub method_bucket { | |||

my ($m) = @_; | |||

return "head" if $m eq "HEAD"; | |||

return "get" if $m eq "GET"; | |||

return "post" if $m eq "POST"; | |||

return "put" if $m eq "PUT"; | |||

return "other"; | |||

} | |||

sub status_bucket { | |||

my ($status) = @_; | |||

return "other" unless defined($status) && $status =~ /^\d+$/; | |||

return "ok" if $status == 200 || $status == 304; | |||

return "redir" if $status >= 300 && $status <= 399; # 308 handled earlier as badbot | |||

return "client_err" if $status >= 400 && $status <= 499; | |||

return "other"; | |||

} | |||

sub normalise_path { | |||

my ($raw) = @_; | |||

my $p = $raw; | |||

$p =~ s{^https?://[^/]+}{}i; # strip scheme+host if absolute URL | |||

$p = "/" if !defined($p) || $p eq ""; | |||

# Split once so we can canonicalise MediaWiki title= before dropping the query. | |||

my ($base, $qs) = split(/\?/, $p, 2); | |||

$qs //= ""; | |||

# Rewrite */index.php?title=X* => */X (preserve directory prefix) | |||

if ($base =~ m{/index\.php$}i && $qs =~ /(?:^|&)title=([^&]+)/i) { | |||

my $title = uri_unescape($1); | |||

(my $prefix = $base) =~ s{/index\.php$}{}i; | |||

$base = $prefix . "/" . $title; | |||

} | |||

# Drop query/fragment entirely (normalise out action=edit etc.) | |||

$p = $base; | |||

$p =~ s/#.*$//; | |||

# Percent-decode ONCE | |||

$p = uri_unescape($p); | |||

# Collapse multiple slashes | |||

$p =~ s{//+}{/}g; | |||

# Trim trailing slash except for root | |||

$p =~ s{/$}{} if length($p) > 1; | |||

return $p; | |||

} | |||

sub fmt_ts { | |||

my ($epoch) = @_; | |||

my $tp = localtime($epoch); | |||

return sprintf("%04d_%02d_%02dT%02d_%02d", | |||

$tp->year, $tp->mon, $tp->mday, $tp->hour, $tp->min); | |||

} | |||

# -------- log regex (captures server_name as final quoted field) -------- | |||

my $LOG_RE = qr{ | |||

^(\S+)\s+\S+\s+\S+\s+\[([^\]]+)\]\s+ | |||

"(GET|POST|HEAD|[A-Z]+)\s+(\S+)[^"]*"\s+ | |||

(\d+)\s+\d+.*?"[^"]*"\s+"([^"]*)"\s+"([^"]+)"\s*$ | |||

}x; | |||

# -------- collect files (glob, then mtime ascending) -------- | |||

@ARGV or usage(); | |||

my @files; | |||

for my $a (@ARGV) { push @files, glob($a) } | |||

@files = sort { (stat($a))[9] <=> (stat($b))[9] } @files; | |||

# -------- bucketed stats -------- | |||

# %BUCKETS{bucket_start}{end} = bucket_end | |||

# %BUCKETS{bucket_start}{stats}{server}{page}{metric} = count | |||

my %BUCKETS; | |||

for my $file (@files) { | |||

print STDERR "$cmd: processing $file\n" if $VERBOSE; | |||

my $fh; | |||

if ($file =~ /\.gz$/) { | |||

$fh = IO::Uncompress::Gunzip->new($file) | |||

or die "$cmd: gunzip $file: $GunzipError"; | |||

} else { | |||

open($fh, "<", $file) or die "$cmd: open $file: $!"; | |||

} | |||

while (<$fh>) { | |||

next unless /$LOG_RE/; | |||

my ($ip,$ts,$method,$path,$status,$ua,$server_name) = ($1,$2,$3,$4,$5,$6,$7); | |||

next if ($SERVER ne "" && $server_name ne $SERVER); | |||

my $clean = $ts; | |||

$clean =~ s/\s+[+-]\d{4}$//; | |||

my $tp = Time::Piece->strptime($clean, "%d/%b/%Y:%H:%M:%S"); | |||

my $epoch = $tp->epoch; | |||

if ($EXCLUDE_LOCAL) { | |||

next if is_local_ip($ip); | |||

if ($method eq "POST" && $path =~ /edit/i) { | |||

next if $tp >= $START_EDIT && $tp <= $END_EDIT; | |||

} | |||

} | |||

my $bucket_start = int($epoch / $PERIOD_SECONDS) * $PERIOD_SECONDS; | |||

my $bucket_end = $bucket_start + $PERIOD_SECONDS; | |||

my $npath = normalise_path($path); | |||

my $aclass = agent_class($status, $ua); | |||

my $metric; | |||

if ($aclass eq "badbot") { | |||

$metric = "badbot_308"; | |||

} else { | |||

my $mb = method_bucket($method); | |||

my $sb = status_bucket($status); | |||

$metric = join("_", $aclass, $mb, $sb); | |||

} | |||

$BUCKETS{$bucket_start}{end} = $bucket_end; | |||

$BUCKETS{$bucket_start}{stats}{$server_name}{$npath}{$metric}++; | |||

} | |||

close $fh; | |||

} | |||

# -------- write outputs -------- | |||

my @ACTORS = qw(curlwget ai bot human); | |||

my @METHODS = qw(get head post put other); | |||

my @SB = qw(ok redir client_err other); | |||

my @COLS; | |||

for my $a (@ACTORS) { | |||

for my $m (@METHODS) { | |||

for my $s (@SB) { | |||

push @COLS, join("_", $a, $m, $s); | |||

} | |||

} | |||

} | |||

push @COLS, "badbot_308"; | |||

push @COLS, "total_hits"; | |||

push @COLS, "server_name"; | |||

push @COLS, "path"; | |||

for my $bstart (sort { $a <=> $b } keys %BUCKETS) { | |||

my $bend = $BUCKETS{$bstart}{end}; | |||

my $out = File::Spec->catfile( | |||

$OUTDIR, | |||

fmt_ts($bstart) . "-to-" . fmt_ts($bend) . ".tsv" | |||

); | |||

print STDERR "$cmd: writing $out\n" if $VERBOSE; | |||

open my $outf, ">", $out or die "$cmd: write $out: $!"; | |||

print $outf join("\t", @COLS), "\n"; | |||

my $stats = $BUCKETS{$bstart}{stats}; | |||

for my $srv (sort keys %$stats) { | |||

for my $p (sort { | |||

# sort by derived total across all counters (excluding total/host/path) | |||

my $sa = 0; my $sb = 0; | |||

for my $c (@COLS) { | |||

next if $c eq 'total_hits' || $c eq 'server_name' || $c eq 'path'; | |||

$sa += ($stats->{$srv}{$a}{$c} // 0); | |||

$sb += ($stats->{$srv}{$b}{$c} // 0); | |||

} | |||

$sb <=> $sa | |||

} keys %{ $stats->{$srv} } | |||

) { | |||

my @vals; | |||

# emit counters | |||

my $total = 0; | |||

for my $c (@COLS) { | |||

if ($c eq 'total_hits') { | |||

push @vals, 0; # placeholder; set after computing total | |||

next; | |||

} | |||

if ($c eq 'server_name') { | |||

push @vals, $srv; | |||

next; | |||

} | |||

if ($c eq 'path') { | |||

push @vals, $p; | |||

next; | |||

} | |||

my $v = $stats->{$srv}{$p}{$c} // 0; | |||

$total += $v; | |||

push @vals, $v; | |||

} | |||

# patch in total_hits (it is immediately after badbot_308) | |||

for (my $i = 0; $i < @COLS; $i++) { | |||

if ($COLS[$i] eq 'total_hits') { | |||

$vals[$i] = $total; | |||

last; | |||

} | |||

} | |||

print $outf join("\t", @vals), "\n"; | |||

} | |||

} | |||

close $outf; | |||

} | |||

</pre> | |||

=categories= | |||

Revision as of 12:50, 30 January 2026

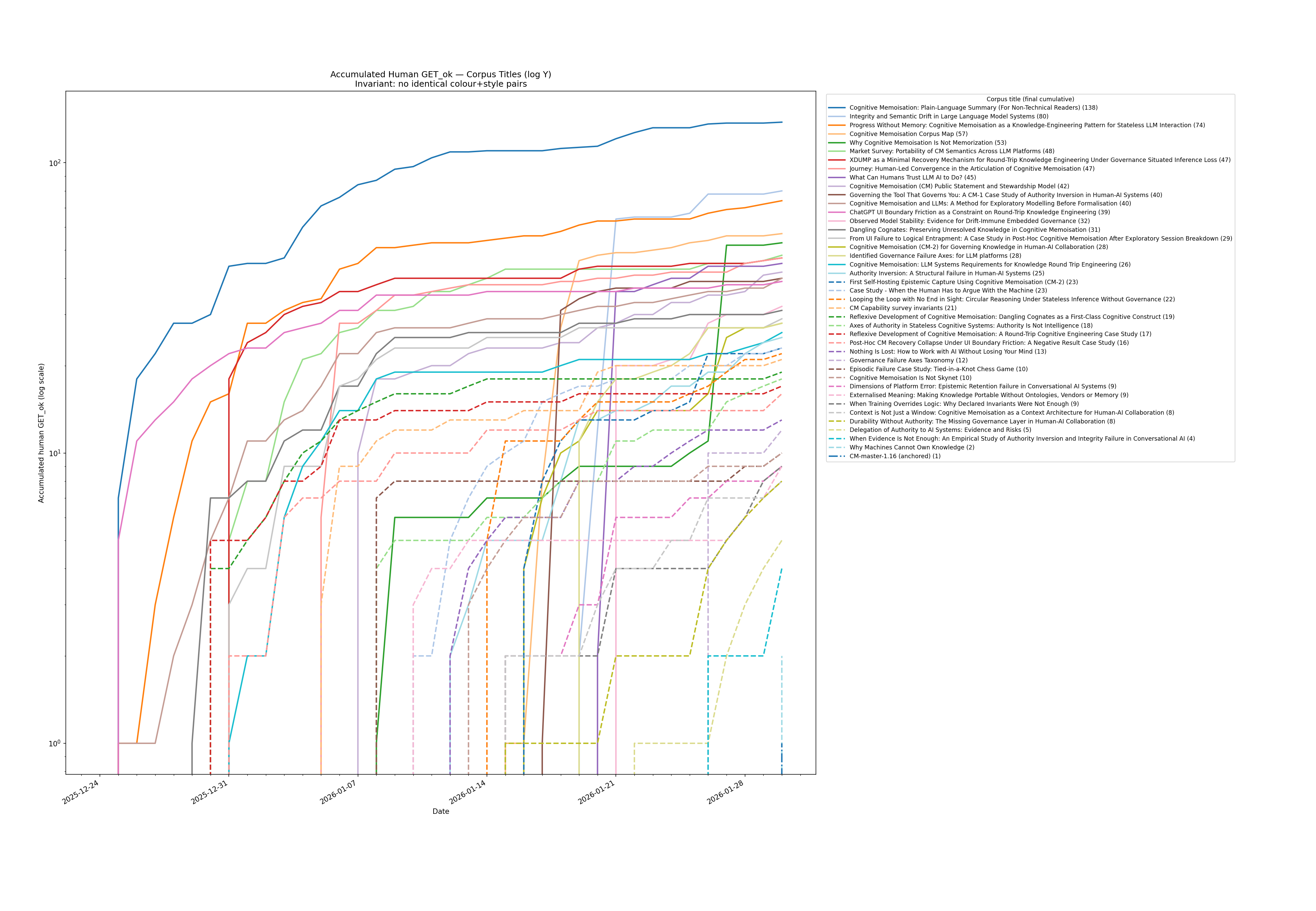

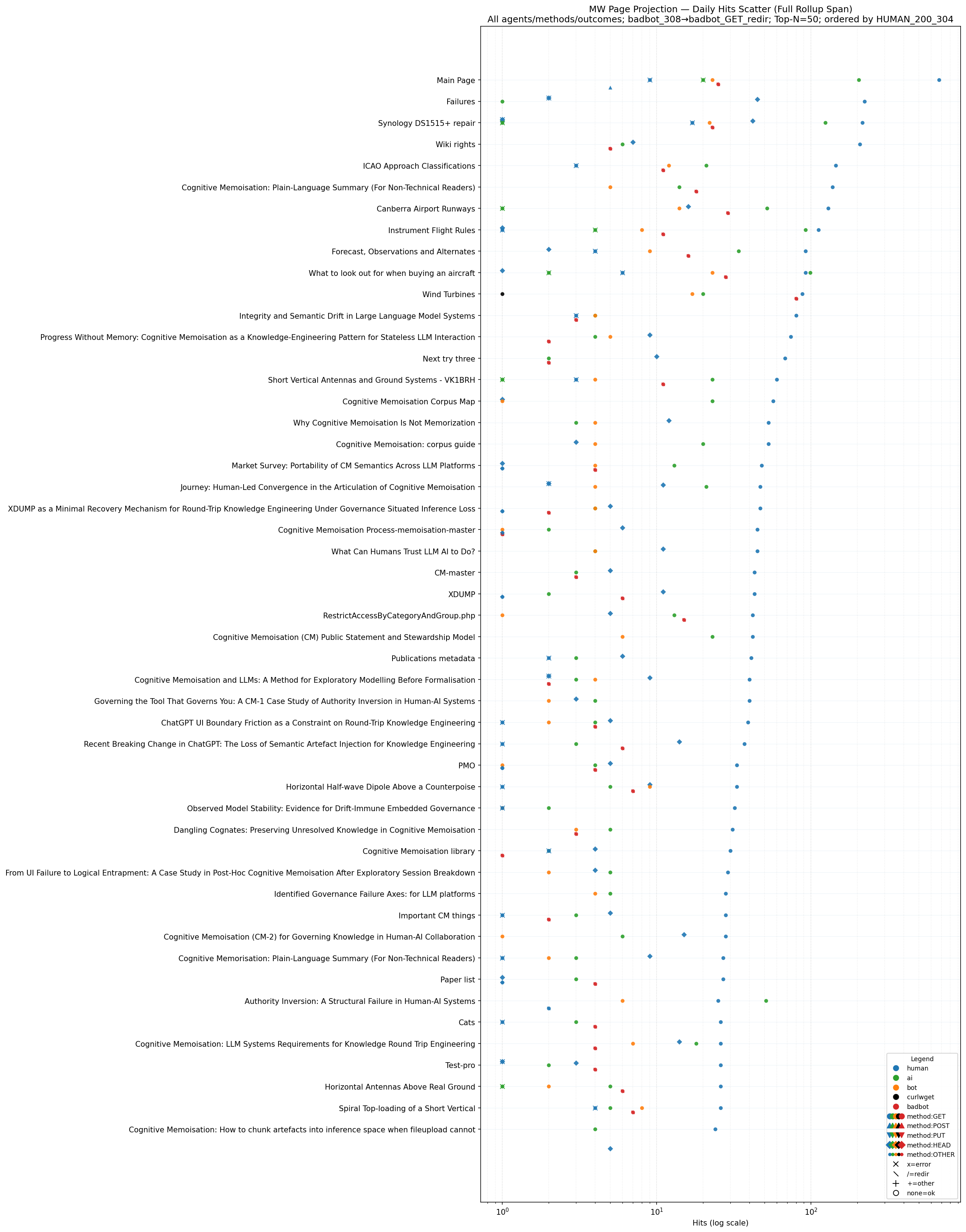

Publications access graphs

- 2026-01-30 from 2025-12-25: accumulated human get

- 2026-01-30 from 2025-12-25: page access scatter plot

Corpus Projection Invariants (Normative)

There are two main projections:

- accumulated human_get times series

- page_category scatter plot

There are one set of invariants for title normalisation and corpus membership. With the two projections:

- Corpus membership constraints apply ONLY to the accumulated human_get time series.

- The daily hits scatter is NOT restricted to corpus titles.

- Main_Page is excluded from the accumulated human_get projection, but not the scatter plot - this is intentional

Authority and Governance

- The projections are curator-governed and MUST be reproducible from declared inputs alone.

- The assisting system MUST NOT infer, rename, paraphrase, merge, split, or reorder titles beyond the explicit rules stated here.

- The assisting system MUST NOT optimise for visual clarity at the expense of semantic correctness.

- Any deviation from these invariants MUST be explicitly declared by the human curator with a dated update entry.

Authoritative Inputs

- Input A: Hourly rollup TSVs produced by logrollup tooling.

- Input B: Corpus bundle manifest (corpus/manifest.tsv).

- Input C: Host scope fixed to publications.arising.com.au.

- Input D: Full temporal range present in the rollup set (no truncation).

Path → Title Extraction

- A rollup record contributes to a page only if a title can be extracted by these rules:

- If path matches /pub/<title>, then <title> is the candidate.

- If path matches /pub-dir/index.php?<query>, the title MUST be taken from title=<title>.

- If title= is absent, page=<title> MAY be used.

- Otherwise, the record MUST NOT be treated as a page hit.

- URL fragments (#…) MUST be removed prior to extraction.

Title Normalisation

- URL decoding MUST occur before all other steps.

- Underscores (_) MUST be converted to spaces.

- UTF-8 dashes (–, —) MUST be converted to ASCII hyphen (-).

- Whitespace runs MUST be collapsed to a single space and trimmed.

- After normalisation, the title MUST exactly match a manifest title to remain eligible.

- Main Page MUST be excluded from this projection.

Wiki Farm Canonicalisation (Mandatory)

- Each MediaWiki instance in a farm is identified by a (vhost, root) pair.

- Each instance exposes paired URL forms:

- /<x>/<Title>

- /<x-dir>/index.php?title=<Title>

- For the bound vhost:

- /<x>/ and /<x-dir>/index.php MUST be treated as equivalent roots.

- All page hits MUST be folded to a single canonical resource per title.

- Canonical resource key:

- (vhost, canonical_title)

Resource Extraction Order (Mandatory)

- URL-decode the request path.

- Extract title candidate:

- If path matches ^/<x>/<title>, extract <title>.

- If path matches ^/<x-dir>/index.php?<query>:

- Use title=<title> if present.

- Else MAY use page=<title> if present.

- Otherwise the record is NOT a page resource.

- Canonicalise title:

- "_" → space

- UTF-8 dashes (–, —) → "-"

- Collapse whitespace

- Trim leading/trailing space

- Apply namespace exclusions.

- Apply infrastructure exclusions.

- Apply canonical folding.

- Aggregate.

Infrastructure Exclusions (Mandatory)

Exclude:

- /

- /robots.txt

- Any path containing "sitemap"

- Any path containing /resources or /resources/

- /<x-dir>/index.php

- /<x-dir>/load.php

- /<x-dir>/api.php

- /<x-dir>/rest.php/v1/search/title

Exclude static resources by extension:

- .png .jpg .jpeg .gif .svg .ico .webp

Accumulated human_get time series (projection)

Eligible Resource Set (Corpus Titles)

- The eligible title set MUST be derived exclusively from corpus/manifest.tsv.

- Column 1 of manifest.tsv is the authoritative MediaWiki page title.

- Only titles present in the manifest (after normalisation) are eligible for projection.

- Titles present in the manifest MUST be included in the projection domain even if they receive zero hits in the period.

- Titles not present in the manifest MUST be excluded even if traffic exists.

Noise and Infrastructure Exclusions

- The following MUST be excluded prior to aggregation:

- Special:, Category:, Category talk:, Talk:, User:, User talk:, File:, Template:, Help:, MediaWiki:

- /resources/, /pub-dir/load.php, /pub-dir/api.php, /pub-dir/rest.php

- /robots.txt, /favicon.ico

- sitemap (any case)

- Static resources by extension (.png, .jpg, .jpeg, .gif, .svg, .ico, .webp)

Temporal Aggregation

- Hourly buckets MUST be aggregated into daily totals per title.

- Accumulated value per title is defined as:

- cum_hits(title, day_n) = Σ daily_hits(title, day_0 … day_n)

- Accumulation MUST be monotonic and non-decreasing.

Axis and Scale Invariants

- X axis: calendar date from earliest to latest available day.

- Major ticks every 7 days.

- Minor ticks every day.

- Date labels MUST be rotated (oblique) for readability.

- Y axis MUST be logarithmic.

- Zero or negative values MUST NOT be plotted on the log axis.

Legend Ordering

- Legend entries MUST be ordered by descending final accumulated human_get_ok.

- Ordering MUST be deterministic and reproducible.

Visual Disambiguation Invariants

- Each title MUST be visually distinguishable.

- The same colour MAY be reused.

- The same line style MAY be reused.

- The same (colour + line style) pair MUST NOT be reused.

- Markers MAY be omitted or reused but MUST NOT be relied upon as the sole distinguishing feature.

Rendering Constraints

- Legend MUST be placed outside the plot area on the right.

- Sufficient vertical and horizontal space MUST be reserved to avoid label overlap.

- Line width SHOULD be consistent across series to avoid implied importance.

Interpretive Constraint

- This projection indicates reader entry and navigation behaviour only.

- High lead-in ranking MUST NOT be interpreted as quality, authority, or endorsement.

- Ordering reflects accumulated human access, not epistemic priority.

Periodic Regeneration

- This projection is intended to be regenerated periodically.

- Cross-run comparisons MUST preserve all invariants to allow valid temporal comparison.

- Changes in lead-in dominance (e.g. Plain-Language Summary vs. CM-1 foundation paper) are observational signals only and do not alter corpus structure.

Metric Definition

- The only signal used is human_get_ok.

- non-human classifications MUST NOT be included.

- No inference from other status codes or agents is permitted.

Corpus Lead-In Projection: Deterministic Colour Map

This table provides the visual encoding for the core corpus pages. For titles not included in the colour map, use colours at your discretion until a Colour Map entry exists.

Colours are drawn from the Matplotlib tab20 palette.

Line styles are assigned to ensure that no (colour + line-style) pair is reused. Legend ordering is governed separately by accumulated human GET_ok.

Corpus Lead-In Projection: Colour-Map Hardening Invariants

This section hardens the visual determinism of the Corpus Lead-In Projection while allowing controlled corpus growth.

Authority

- This Colour Map is **authoritative** for all listed corpus pages.

- The assisting system MUST NOT invent, alter, or substitute colours or line styles for listed pages.

- Visual encoding is a governed property, not a presentation choice.

Binding Rule

- For any page listed in the Deterministic Colour Map table:

- The assigned (colour index, colour hex, line style) pair MUST be used exactly.

- Deviation constitutes a projection violation.

Legend Ordering Separation

- Colour assignment and legend ordering are orthogonal.

- Legend ordering MUST continue to follow the accumulated human GET_ok invariant.

- Colour assignment MUST NOT be influenced by hit counts, rank, or ordering.

New Page Admission Rule

- Pages not present in the current Colour Map MUST appear in a projection.

- New pages MUST be assigned styles in strict sequence order:

- Iterate line style first, then colour index, exactly as defined in the base palette.

- Previously assigned pairs MUST NOT be reused.

- The assisting system MUST NOT reshuffle existing assignments to “make space”.

Provisional Encoding Rule

- Visual assignments for newly admitted pages are **provisional** until recorded.

- A projection that introduces provisional encodings MUST:

- Emit a warning note in the run metadata, and

- Produce an updated Colour Map table for curator review.

Curator Ratification

- Only the human curator may ratify new colour assignments.

- Ratification occurs by appending new rows to the Colour Map table with a date stamp.

- Once ratified, assignments become binding for all future projections.

Backward Compatibility

- Previously generated projections remain valid historical artefacts.

- Introduction of new pages MUST NOT retroactively alter the appearance of older projections.

Failure Mode Detection

- If a projection requires more unique (colour, line-style) pairs than the declared palette provides:

- The assisting system MUST fail explicitly.

- Silent reuse, substitution, or visual approximation is prohibited.

Rationale (Non-Normative)

- This hardening ensures:

- Cross-run visual comparability

- Human recognition of lead-in stability

- Detectable drift when corpus structure changes

- Visual determinism is treated as part of epistemic governance, not aesthetics.

daily hits scatter (projections)

purpose:

- Produce deterministic, human-meaningful MediaWiki page-level analytics from nginx bucket TSVs,

- folding all URL variants to canonical resources and rendering a scatter projection across agents, HTTP methods, and outcomes.

Authority

- These invariants are normative.

- The assisting system MUST follow them exactly.

- Visual encoding is a governed semantic property, not a presentation choice.

Inputs

- Bucket TSVs produced by page_hits_bucketfarm_methods.pl

- Required columns:

- server_name

- path (or page_category)

- <agent>_<METHOD>_<outcome> numeric bins

- Other columns MAY exist and MUST be ignored unless explicitly referenced.

Scope

- Projection MUST bind to exactly ONE nginx virtual host at a time.

- Example: publications.arising.com.au

- Cross-vhost aggregation is prohibited.

Namespace Exclusions (Mandatory)

Exclude titles with case-insensitive prefix:

- Special:

- Category:

- Category talk:

- Talk:

- User:

- User talk:

- File:

- Template:

- Help:

- MediaWiki:

- Obvious misspellings (e.g. Catgeory:) SHOULD be excluded.

Bad Bot Hits

- Bad bot hits MUST be included for any canonical page resource that survives normalisation and exclusion.

- Bad bot traffic MUST NOT be excluded solely by agent class; it MAY only be excluded if the request is excluded by namespace or infrastructure rules.

- badbot_308 SHALL be treated as badbot_GET_redir for scatter projections.

- Human success ordering (HUMAN_200_304) remains the sole ordering metric; inclusion of badbot hits MUST NOT affect ranking.

Aggregation Invariant (Mandatory)

- Aggregate across ALL rollup buckets in the selected time span.

- GROUP BY canonical resource.

- SUM all numeric <agent>_<METHOD>_<outcome> bins.

- Each canonical resource MUST appear exactly once.

Human Success Spine (Mandatory)

- Define ordering metric:

- HUMAN_200_304 := human_GET_ok + human_GET_redir

- This metric is used ONLY for vertical ordering.

Ranking and Selection

- Sort resources by HUMAN_200_304 descending.

- Select Top-N resources (default N = 50).

- Any non-default N MUST be declared in run metadata.

Rendering Invariants (Scatter Plot)

Axes

- X axis MUST be logarithmic.

- X axis MUST include log-paper verticals:

- Major: 10^k

- Minor: 2..9 × 10^k

- Y axis lists canonical resources ordered by HUMAN_200_304.

Baseline Alignment

- The resource label baseline MUST align with human GET_ok.

- human_GET_ok points MUST have vertical offset = 0.

- Draw faint horizontal baseline guides for each resource row.

Category Plotting

- ALL agent/method/outcome bins present MUST be plotted.

- No category elision, suppression, or collapsing is permitted.

Intra-Row Separation

- Apply deterministic vertical offsets per METHOD_outcome key.

- Offsets MUST be stable and deterministic.

- human_GET_ok is exempt (offset = 0).

Redirect Jitter

- human_GET_redir MUST receive a small fixed positive offset (e.g. +0.35).

- Random jitter is prohibited.

Encoding Invariants

Agent Encoding

- Agent encoded by colour.

- badbot MUST be red.

Method Encoding

- GET → o

- POST → ^

- PUT → v

- HEAD → D

- OTHER → .

Outcome Overlay

- ok → no overlay

- redir → diagonal slash (/)

- client_err → x

- server_err → x

- other/unknown → +

Legend Invariants

- Legend MUST be present.

- Legend title MUST be exactly: Legend

- Legend MUST explain:

- Agent colours

- Method shapes

- Outcome overlays

- Legend MUST NOT overlap resource labels.

- The legend MUST be labeled as 'legend' only

Legend Presence (Mandatory)

- A legend MUST be rendered on every scatter plot output.

- The legend title MUST be exactly: Legend

- A projection without a legend is non-compliant.

Legend Content (Mandatory; Faithful to Encoding Invariants)

The legend MUST include three components:

- Agent key (colour):

- human

- ai

- bot

- curlwget

- badbot (MUST be red)

- Method key (base shapes):

- GET → o

- POST → ^

- PUT → v

- HEAD → D

- OTHER → .

- Outcome overlay key:

- x = error (client_err or server_err)

- / = redir

- + = other (other or unknown)

- none = ok

Legend Placement (Mandatory)

- The legend MUST be placed INSIDE the plotting area.

- The legend location MUST be bottom-right (axis-anchored):

- loc = lower right

- The legend MUST NOT be placed outside the plot area (no RHS external legend).

- The legend MUST NOT overlap the y-axis labels (resource labels).

- The legend MUST be fully visible and non-clipped in the output image.

Legend Rendering Constraints (Mandatory)

- The legend MUST use a frame (boxed) to preserve readability over gridlines/points.

- The legend frame SHOULD use partial opacity to avoid obscuring data:

- frame alpha SHOULD be approximately 0.85 (fixed, deterministic).

- Legend ordering MUST be deterministic (fixed order):

- Agents: human, ai, bot, curlwget, badbot

- Methods: GET, POST, PUT, HEAD, OTHER

- Outcomes: x=error, /=redir, +=other, none=ok

Validation

A compliant scatter output SHALL satisfy:

- Legend is present.

- Legend title equals "Legend".

- Legend is inside plot bottom-right.

- Legend is non-clipped.

- Legend contains agent, method, and outcome keys as specified.

Determinism

- No random jitter.

- No data-dependent styling.

- Identical inputs MUST produce identical outputs.

Validation Requirements

- No duplicate logical pages after canonical folding.

- HUMAN_200_304 ordering is monotonic.

- All plotted points trace back to bucket TSV bins.

- /<x> and /<x-dir> variants MUST fold to the same canonical resource.

Rollup code

root@padme:/home/ralph/AI# cat logrollup

#!/usr/bin/env perl

use strict;

use warnings;

use IO::Uncompress::Gunzip qw(gunzip $GunzipError);

use Time::Piece;

use Getopt::Long;

use File::Path qw(make_path);

use File::Spec;

use URI::Escape qw(uri_unescape);

#BEGIN_MWDUMP

#title: CM-bucket-rollup invariants

#format: MWDUMP

#invariants:

# 1. server_name is first-class; never dropped; emitted in output schema and used for optional filtering.

# 2. input globs are expanded then processed in ascending mtime order (oldest -> newest).

# 3. time bucketing is purely mathematical: bucket_start = floor(epoch/period_seconds)*period_seconds.

# 4. badbot is definitive and detected ONLY by HTTP status == 308; no UA regex for badbot.

# 5. AI and bot are derived from /etc/nginx/bots.conf:

# - only patterns mapping to 0 are "wanted"

# - between '# good bots' and '# AI bots' => bot

# - between '# AI bots' and '# unwanted bots' => AI_bot

# - unwanted-bots section ignored for analytics classification

# 6. output TSV schema is fixed (total/host/path last; totals are derivable):

# curlwget|ai|bot|human × (get|head|post|put|other) × (ok|redir|client_err|other)

# badbot_308

# total_hits server_name path

# 7. Path identity is normalised so the same resource collates across:

# absolute URLs, query strings (incl action/edit), MediaWiki title=, percent-encoding, and trailing slashes.

# 8. --exclude-local excludes (does not count) local IP hits and POST+edit hits in the defined window, before bucketing.

# 9. web-farm safe: aggregation keys include bucket_start + server_name + path; no cross-vhost contamination.

# 10. bots.conf parsing must be auditable: when --verbose, report "good AI agent" and "good bot" patterns to STDERR.

# 11. method taxonomy is uniform for all agent categories: GET, HEAD, POST, PUT, OTHER (everything else).

#END_MWDUMP

my $cmd = $0;

# -------- options --------

my ($EXCLUDE_LOCAL, $VERBOSE, $HELP, $OUTDIR, $PERIOD, $SERVER) = (0,0,0,".","01:00","");

GetOptions(

"exclude-local!" => \$EXCLUDE_LOCAL,

"verbose!" => \$VERBOSE,

"help!" => \$HELP,

"outdir=s" => \$OUTDIR,

"period=s" => \$PERIOD,

"server=s" => \$SERVER, # optional filter; empty means all

) or usage();

usage() if $HELP;

sub usage {

print <<"USAGE";

Usage:

$cmd [options] /var/log/nginx/access.log*

Options:

--exclude-local Exclude local IPs and POST edit traffic

--outdir DIR Directory to write TSV outputs

--period HH:MM Period size (duration), default 01:00

--server NAME Only count hits where server_name == NAME (web-farm filter)

--verbose Echo processing information + report wanted agents from bots.conf

--help Show this help and exit

Output:

One TSV per time bucket, named:

YYYY_MM_DDThh_mm-to-YYYY_MM_DDThh_mm.tsv

Columns (server/page last; totals derivable):

human_head human_get human_post human_other

ai_head ai_get ai_post ai_other

bot_head bot_get bot_post bot_other

badbot_head badbot_get badbot_post badbot_other

server_name page_category

USAGE

exit 0;

}

make_path($OUTDIR) unless -d $OUTDIR;

# -------- period math (no validation, per instruction) --------

my ($PH, $PM) = split(/:/, $PERIOD, 2);

my $PERIOD_SECONDS = ($PH * 3600) + ($PM * 60);

# -------- edit exclusion window --------

my $START_EDIT = Time::Piece->strptime("12/Dec/2025:00:00:00", "%d/%b/%Y:%H:%M:%S");

my $END_EDIT = Time::Piece->strptime("01/Jan/2026:23:59:59", "%d/%b/%Y:%H:%M:%S");

# -------- parse bots.conf (wanted patterns only) --------

my $BOTS_CONF = "/etc/nginx/bots.conf";

my (@AI_REGEX, @BOT_REGEX);

my (@AI_RAW, @BOT_RAW);

open my $bc, "<", $BOTS_CONF or die "$cmd: cannot open $BOTS_CONF: $!";

my $mode = "";

while (<$bc>) {

if (/^\s*#\s*good bots/i) { $mode = "GOOD"; next; }

if (/^\s*#\s*AI bots/i) { $mode = "AI"; next; }

if (/^\s*#\s*unwanted bots/i) { $mode = ""; next; }

next unless $mode;

next unless /~\*(.+?)"\s+0;/;

my $pat = $1;

if ($mode eq "AI") {

push @AI_RAW, $pat;

push @AI_REGEX, qr/$pat/i;

} elsif ($mode eq "GOOD") {

push @BOT_RAW, $pat;

push @BOT_REGEX, qr/$pat/i;

}

}

close $bc;

if ($VERBOSE) {

for my $p (@AI_RAW) { print STDERR "[agents] good AI agent: ~*$p\n"; }

for my $p (@BOT_RAW) { print STDERR "[agents] good bot: ~*$p\n"; }

}

# -------- helpers --------

sub is_local_ip {

my ($ip) = @_;

return 1 if $ip eq "127.0.0.1" || $ip eq "::1";

return 1 if $ip =~ /^10\./;

return 1 if $ip =~ /^192\.168\./;

return 0;

}

sub agent_class {

my ($status, $ua) = @_;

return "badbot" if $status == 308;

return "curlwget" if defined($ua) && $ua =~ /\b(?:curl|wget)\b/i;

for (@AI_REGEX) { return "ai" if $ua =~ $_ }

for (@BOT_REGEX) { return "bot" if $ua =~ $_ }

return "human";

}

sub method_bucket {

my ($m) = @_;

return "head" if $m eq "HEAD";

return "get" if $m eq "GET";

return "post" if $m eq "POST";

return "put" if $m eq "PUT";

return "other";

}

sub status_bucket {

my ($status) = @_;

return "other" unless defined($status) && $status =~ /^\d+$/;

return "ok" if $status == 200 || $status == 304;

return "redir" if $status >= 300 && $status <= 399; # 308 handled earlier as badbot

return "client_err" if $status >= 400 && $status <= 499;

return "other";

}

sub normalise_path {

my ($raw) = @_;

my $p = $raw;

$p =~ s{^https?://[^/]+}{}i; # strip scheme+host if absolute URL

$p = "/" if !defined($p) || $p eq "";

# Split once so we can canonicalise MediaWiki title= before dropping the query.

my ($base, $qs) = split(/\?/, $p, 2);

$qs //= "";

# Rewrite */index.php?title=X* => */X (preserve directory prefix)

if ($base =~ m{/index\.php$}i && $qs =~ /(?:^|&)title=([^&]+)/i) {

my $title = uri_unescape($1);

(my $prefix = $base) =~ s{/index\.php$}{}i;

$base = $prefix . "/" . $title;

}

# Drop query/fragment entirely (normalise out action=edit etc.)

$p = $base;

$p =~ s/#.*$//;

# Percent-decode ONCE

$p = uri_unescape($p);

# Collapse multiple slashes

$p =~ s{//+}{/}g;

# Trim trailing slash except for root

$p =~ s{/$}{} if length($p) > 1;

return $p;

}

sub fmt_ts {

my ($epoch) = @_;

my $tp = localtime($epoch);

return sprintf("%04d_%02d_%02dT%02d_%02d",

$tp->year, $tp->mon, $tp->mday, $tp->hour, $tp->min);

}

# -------- log regex (captures server_name as final quoted field) --------

my $LOG_RE = qr{

^(\S+)\s+\S+\s+\S+\s+\[([^\]]+)\]\s+

"(GET|POST|HEAD|[A-Z]+)\s+(\S+)[^"]*"\s+

(\d+)\s+\d+.*?"[^"]*"\s+"([^"]*)"\s+"([^"]+)"\s*$

}x;

# -------- collect files (glob, then mtime ascending) --------

@ARGV or usage();

my @files;

for my $a (@ARGV) { push @files, glob($a) }

@files = sort { (stat($a))[9] <=> (stat($b))[9] } @files;

# -------- bucketed stats --------

# %BUCKETS{bucket_start}{end} = bucket_end

# %BUCKETS{bucket_start}{stats}{server}{page}{metric} = count

my %BUCKETS;

for my $file (@files) {

print STDERR "$cmd: processing $file\n" if $VERBOSE;

my $fh;

if ($file =~ /\.gz$/) {

$fh = IO::Uncompress::Gunzip->new($file)

or die "$cmd: gunzip $file: $GunzipError";

} else {

open($fh, "<", $file) or die "$cmd: open $file: $!";

}

while (<$fh>) {

next unless /$LOG_RE/;

my ($ip,$ts,$method,$path,$status,$ua,$server_name) = ($1,$2,$3,$4,$5,$6,$7);

next if ($SERVER ne "" && $server_name ne $SERVER);

my $clean = $ts;

$clean =~ s/\s+[+-]\d{4}$//;

my $tp = Time::Piece->strptime($clean, "%d/%b/%Y:%H:%M:%S");

my $epoch = $tp->epoch;

if ($EXCLUDE_LOCAL) {

next if is_local_ip($ip);

if ($method eq "POST" && $path =~ /edit/i) {

next if $tp >= $START_EDIT && $tp <= $END_EDIT;

}

}

my $bucket_start = int($epoch / $PERIOD_SECONDS) * $PERIOD_SECONDS;

my $bucket_end = $bucket_start + $PERIOD_SECONDS;

my $npath = normalise_path($path);

my $aclass = agent_class($status, $ua);

my $metric;

if ($aclass eq "badbot") {

$metric = "badbot_308";

} else {

my $mb = method_bucket($method);

my $sb = status_bucket($status);

$metric = join("_", $aclass, $mb, $sb);

}

$BUCKETS{$bucket_start}{end} = $bucket_end;

$BUCKETS{$bucket_start}{stats}{$server_name}{$npath}{$metric}++;

}

close $fh;

}

# -------- write outputs --------

my @ACTORS = qw(curlwget ai bot human);

my @METHODS = qw(get head post put other);

my @SB = qw(ok redir client_err other);

my @COLS;

for my $a (@ACTORS) {

for my $m (@METHODS) {

for my $s (@SB) {

push @COLS, join("_", $a, $m, $s);

}

}

}

push @COLS, "badbot_308";

push @COLS, "total_hits";

push @COLS, "server_name";

push @COLS, "path";

for my $bstart (sort { $a <=> $b } keys %BUCKETS) {

my $bend = $BUCKETS{$bstart}{end};

my $out = File::Spec->catfile(

$OUTDIR,

fmt_ts($bstart) . "-to-" . fmt_ts($bend) . ".tsv"

);

print STDERR "$cmd: writing $out\n" if $VERBOSE;

open my $outf, ">", $out or die "$cmd: write $out: $!";

print $outf join("\t", @COLS), "\n";

my $stats = $BUCKETS{$bstart}{stats};

for my $srv (sort keys %$stats) {

for my $p (sort {

# sort by derived total across all counters (excluding total/host/path)

my $sa = 0; my $sb = 0;

for my $c (@COLS) {

next if $c eq 'total_hits' || $c eq 'server_name' || $c eq 'path';

$sa += ($stats->{$srv}{$a}{$c} // 0);

$sb += ($stats->{$srv}{$b}{$c} // 0);

}

$sb <=> $sa

} keys %{ $stats->{$srv} }

) {

my @vals;

# emit counters

my $total = 0;

for my $c (@COLS) {

if ($c eq 'total_hits') {

push @vals, 0; # placeholder; set after computing total

next;

}

if ($c eq 'server_name') {

push @vals, $srv;

next;

}

if ($c eq 'path') {

push @vals, $p;

next;

}

my $v = $stats->{$srv}{$p}{$c} // 0;

$total += $v;

push @vals, $v;

}

# patch in total_hits (it is immediately after badbot_308)

for (my $i = 0; $i < @COLS; $i++) {

if ($COLS[$i] eq 'total_hits') {

$vals[$i] = $total;

last;

}

}

print $outf join("\t", @vals), "\n";

}

}

close $outf;

}