Nginx-graphs: Difference between revisions

| Line 91: | Line 91: | ||

=New Page Invariants= | =New Page Invariants= | ||

[[file:new-page-graph.png]] | |||

==MW-page projection invariants (normative) — CM publications analytics (next-model handoff)== | ==MW-page projection invariants (normative) — CM publications analytics (next-model handoff)== | ||

<pre> | <pre> | ||

Revision as of 10:07, 27 January 2026

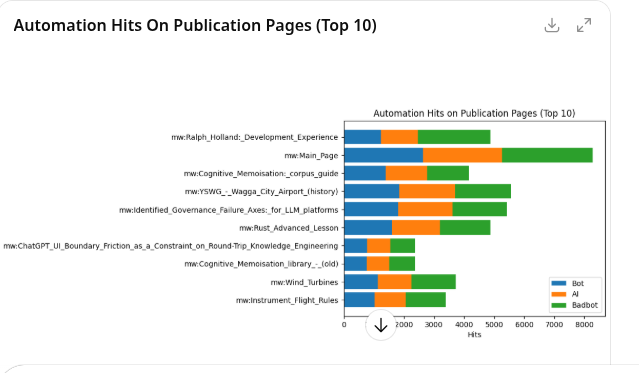

all virtual servers

publications

human traffic

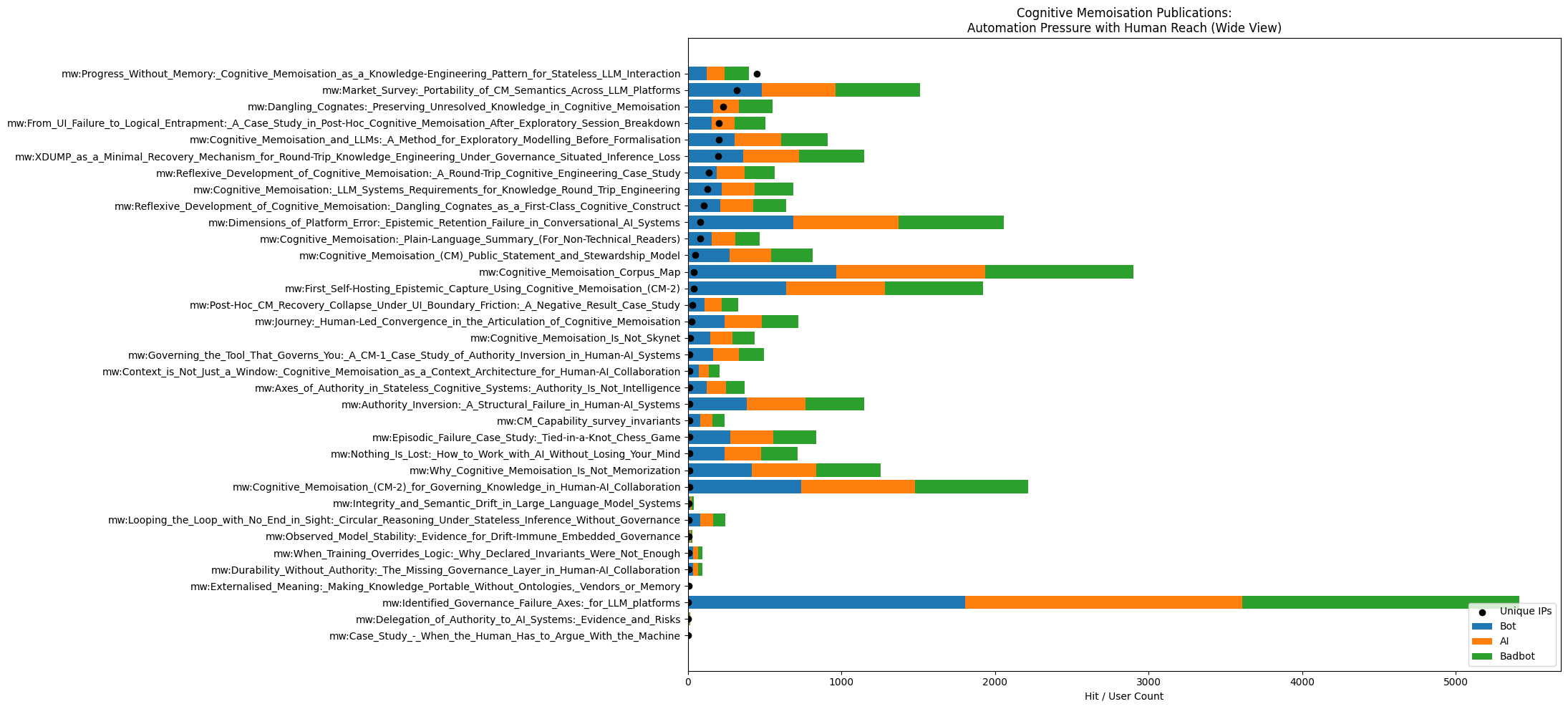

MWDUMP Normative: Log-X Traffic Projection (Publications ∪ CM)

Scope

This normative defines a single 2-D projection plot over the union of:

- Top-N publication pages (by human hits)

- All CM pages

Each page SHALL appear exactly once.

Population

- Host SHALL be publications.arising.com.au unless explicitly stated otherwise.

- Page set SHALL be: UNION( TopN_by_human_hits(publications), All_CM_pages )

Ordering Authority

- Rows (Y positions) SHALL be sorted by human hit count, descending.

- Human traffic SHALL define ordering authority and SHALL NOT be displaced by automation classes.

Axes

Y Axis (Left)

- Y axis SHALL be categorical page identifiers.

- Full page names SHALL be rendered to the left of the plot region.

- Y axis SHALL be inverted so highest human-hit pages are at the top.

X Axis (Bottom + Top)

- X axis SHALL be log10.

- X axis ticks SHALL be fixed decades: 10^0, 10^1, 10^2, ... up to the highest decade required by the data.

- X axis SHALL be duplicated at the top with identical ticks/labels.

- Vertical gridlines SHALL be rendered at each decade (10^n). Minor ticks SHOULD be suppressed.

Metrics (Plotted Series)

For each page, the following series SHALL be plotted as independent point overlays sharing the same X axis:

- Human hits: hits_human = hits_total - (hits_bot + hits_ai + hits_badbot)

- Bot hits: hits_bot

- AI hits: hits_ai

- Bad-bot hits: hits_badbot

Marker Semantics

Markers SHALL be distinct per series:

- Human hits: filled circle (●)

- Bot hits: cross (×)

- AI hits: triangle (△)

- Bad-bot hits: square (□)

Geometry (Plot Surface Scaling)

- The plot SHALL be widened by increasing canvas width and/or decreasing reserved margins.

- The plot SHALL NOT be widened by extending the X-axis data range beyond the highest required decade.

- Figure aspect SHOULD be >= 3:1 (width:height) for tall page lists.

Prohibitions

- The plot SHALL NOT reorder pages by bot, AI, bad-bot, or total hits.

- The plot SHALL NOT add extra decades beyond the highest decade required by the data.

- The plot SHALL NOT omit X-axis tick labels.

- The plot SHALL NOT collapse series into totals.

- The plot SHALL NOT introduce a time axis in this projection.

Title

The plot title SHOULD be: "Publications ∪ CM Pages (Ordered by Human Hits): Human vs Automation (log scale)"

Validation

A compliant plot SHALL satisfy:

- Pages readable on left

- Decade ticks visible on bottom and top

- Scatter region occupies the majority of horizontal area to the right of labels

- X-axis decade range matches data-bound decades (no artificial expansion)

Hits Per Page

SVG

New Page Invariants

MW-page projection invariants (normative) — CM publications analytics (next-model handoff)

format: MWDUMP

name: mw-page projection (invariants)

purpose:

Produce human-meaningful MediaWiki publication page analytics from nginx bucket TSVs by excluding infrastructural noise, normalising labels, and rendering deterministic page-level projection plots with stable encodings.

inputs:

- Bucket TSVs produced by page_hits_bucketfarm_methods.pl (server_name + page_category present; method taxonomy HEAD/GET/POST/PUT/OTHER across agent classes).

- Column naming convention:

<agent>_<METHOD>_<outcome>

where:

agent ∈ {human, ai, bot, badbot, curlwget} (curlwget optional but supported)

METHOD ∈ {GET, POST, PUT, HEAD, OTHER}

outcome ∈ {ok, redir, client_err, server_err, other} (exact set is whatever the bucketfarm emits; treat unknown outcomes as other for rendering)

projection_steps:

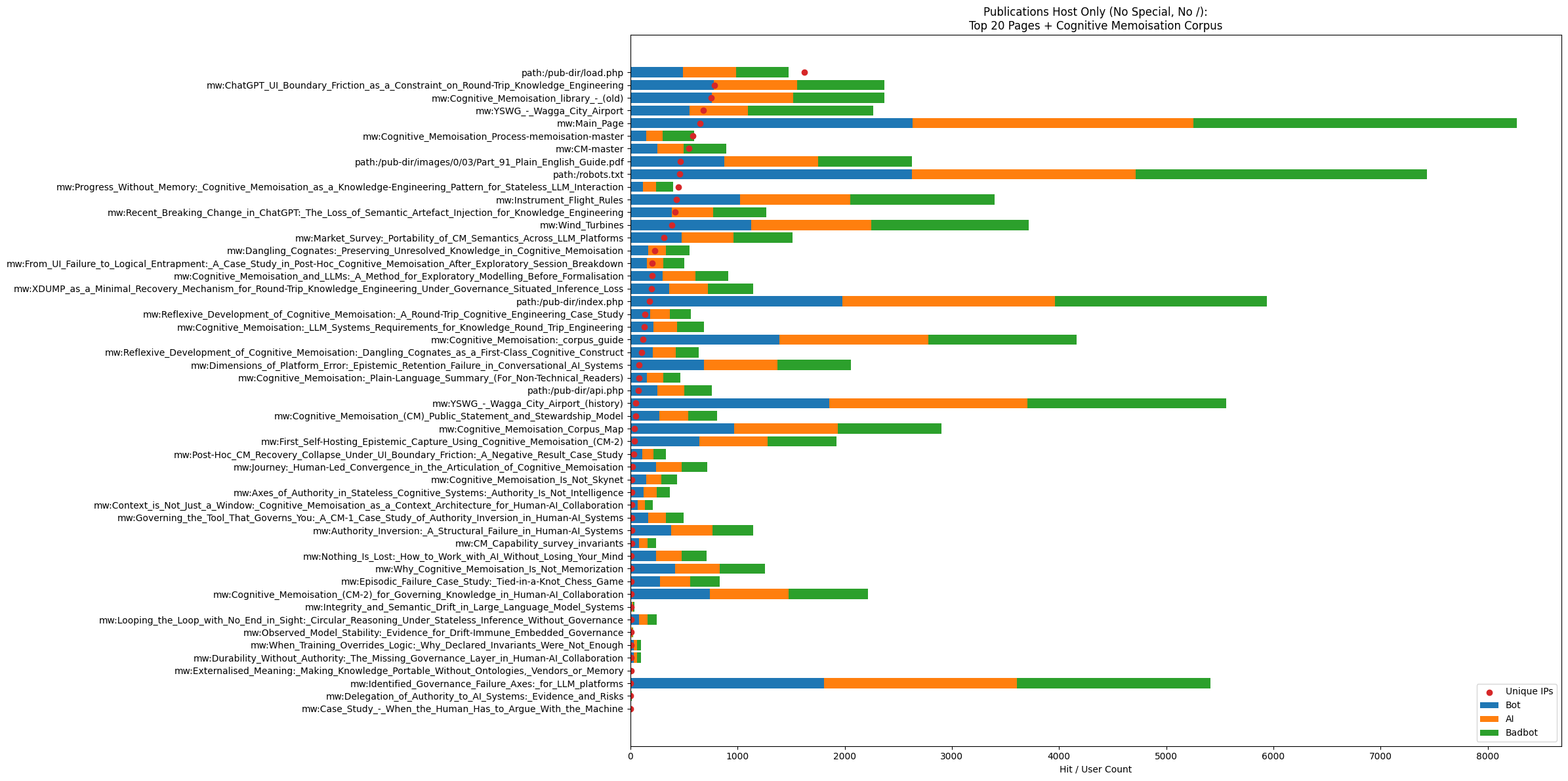

1) Server selection (mandatory):

- Restrict to the publications vhost only (e.g. --server publications.<domain> at bucket-generation time, or filter server_name == publications.<domain> at projection time).

2) Page namespace selection (mandatory):

- Exclude MediaWiki Special pages:

page_category matches ^p:Special

3) Infrastructure exclusions (mandatory; codified noise set):

- Exclude root:

page_category == p:/

- Exclude robots:

page_category == p:/robots.txt

- Exclude sitemap noise:

page_category contains "sitemap" (case-insensitive)

- Exclude resources anywhere in path (strict):

page_category contains /resources OR /resources/ at any depth (case-insensitive)

- Exclude MediaWiki plumbing endpoints (strict; peak suppressors):

page_category == p:/pub-dir/index.php

page_category == p:/pub-dir/load.php

page_category == p:/pub-dir/api.php

page_category == p:/pub-dir/rest.php/v1/search/title

4) Label normalisation (mandatory for presentation):

- Strip leading "p:" prefix from page_category for chart/table labels.

- Do not introduce case-splitting in category keys:

METHOD must be treated as UPPERCASE;

outcome must be treated as lowercase;

the canonical category key is METHOD_outcome.

5) Aggregation invariant (mandatory for page-level projection):

- Aggregate counts across all rollup buckets to produce one row per resource:

GROUP BY path (or page_category label post-normalisation)

SUM all numeric category columns.

- After aggregation, each resource must appear exactly once in outputs.

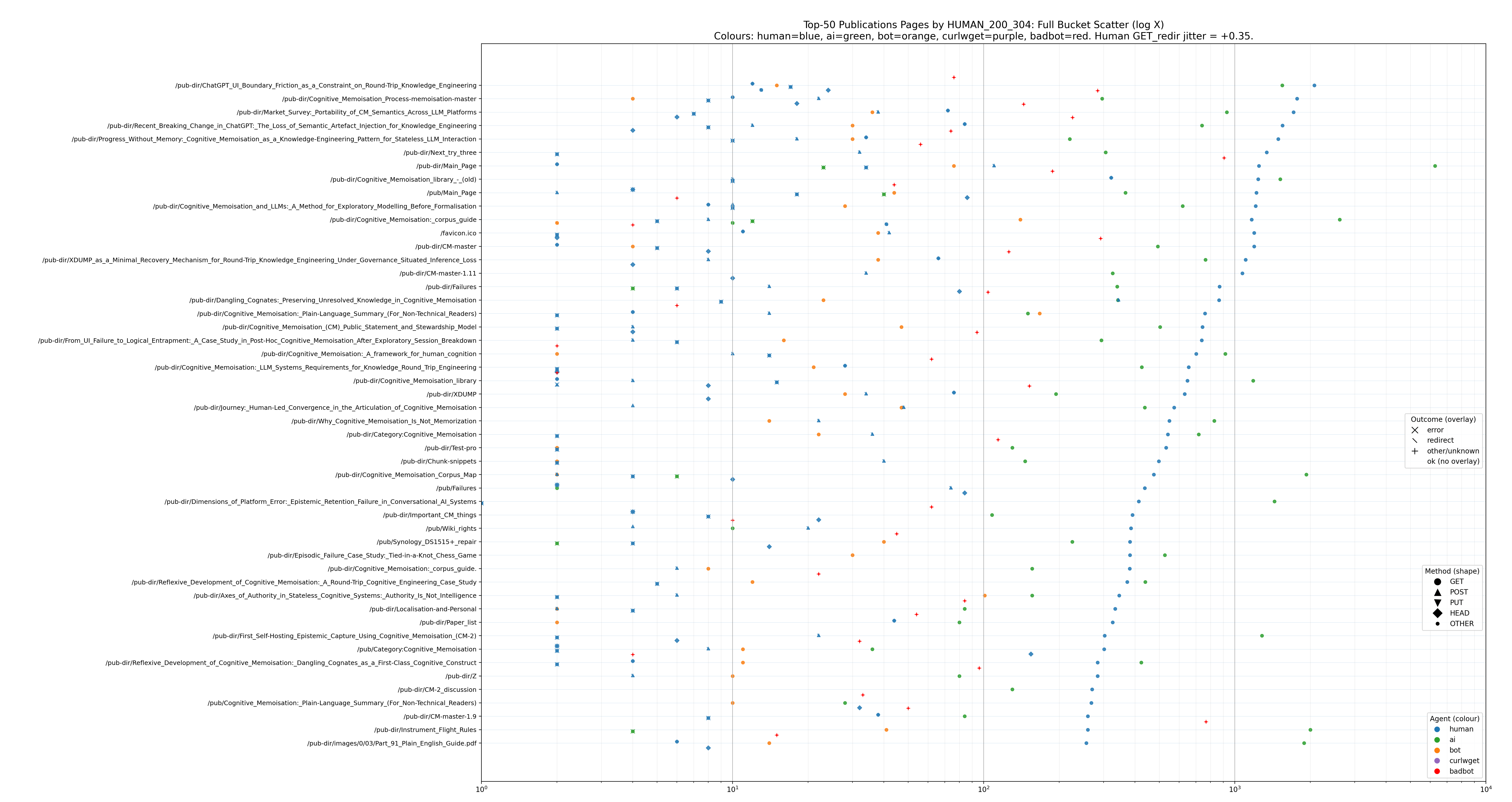

6) Human success spine (mandatory default ordering):

- Define the ordering metric HUMAN_200_304 as:

HUMAN_200_304 := human_GET_ok + human_GET_redir

Notes:

- This ordering metric is normative for “human success” ranking.

- Do not include 301 (or any non-bucketed redirect semantics) unless the bucketfarm’s redir bin explicitly includes it; ordering remains strictly based on the bucketed columns above.

7) Ranking + Top-N selection (mandatory defaults):

- Sort resources by HUMAN_200_304 descending.

- Select Top-N resources after exclusions and aggregation:

Default N = 50 (configurable; must be stated in the chart caption if changed).

outputs:

- A “page projection” scatter view derived from the above projection is MW-page projection compliant if it satisfies all rendering invariants below.

rendering_invariants (scatter plot):

A) Axes:

- X axis MUST be logarithmic (log X).

- X axis MUST include “log paper” verticals:

- Major decades at 10^k

- Minor lines at 2..9 * 10^k

- Y axis is resource labels ordered by HUMAN_200_304 descending.

B) Label alignment (mandatory):

- The resource label baseline (tick y-position) MUST align with the HUMAN GET success spine:

- Pin human GET_ok points to the label baseline (offset = 0).

- Draw faint horizontal baseline guides at each resource row to make alignment visually explicit.

C) “Plot every category” invariant (mandatory when requested):

- Do not elide, suppress, or collapse any bucketed category columns in the scatter.

- All method/outcome bins present in the bucket TSVs MUST be plotted for each agent class that exists in the data.

D) Deterministic intra-row separation (mandatory when plotting many categories):

- Within each resource row, apply a deterministic vertical offset per hit category key (METHOD_outcome) to reduce overplotting.

- Exception: the label baseline anchor above (human GET_ok) must remain on baseline.

E) Specific jitter rule for human GET redirects (mandatory when requested):

- If human GET_redir is visually paired with human GET_ok at the same x-scale, apply a small deterministic vertical jitter to human GET_redir only:

y(human GET_redir) := baseline + jitter

where jitter is small and fixed (e.g. +0.35 in the current reference plot).

encoding_invariants (agent/method/outcome):

- Agent MUST be encoded by colour (badbot MUST be red).

- Method MUST be encoded by base shape:

GET -> circle (o)

POST -> upright triangle (^)

PUT -> inverted triangle (v)

HEAD -> diamond (D)

OTHER-> dot (.)

- Outcome MUST be encoded as an overlay in agent colour:

error (client_err or server_err) -> x

redirect (redir) -> diagonal slash (/ rendered as a 45° line marker)

other (other or unknown) -> +

ok -> no overlay

legend_invariants:

- A compact legend block MUST be present (bottom-right default) containing:

- Agent colours across top (human (blue), ai (green), bot (orange), curlwget (black), badbot (red))

- Method rows down the side with the base shapes rendered in each agent colour

- Outcome overlay key: x=error, /=redirect, +=other, none=ok

determinism:

- All ordering, filtering, normalisation, and jitter rules MUST be deterministic (no random jitter).

- Any non-default parameters (Top-N, jitter magnitude, exclusions) MUST be stated in chart caption or run metadata.

nginxlog sample code

The code that is used to process logs to obtain summaries of each page hit counts.

#!/usr/bin/env perl

use strict;

use warnings;

use Getopt::Long qw(GetOptions);

use File::Path qw(make_path);

use POSIX qw(strftime);

use IO::Uncompress::Gunzip qw(gunzip $GunzipError);

use URI::Escape qw(uri_unescape);

# ============================================================================

# lognginx.ai-fix.pl

#

# Purpose:

# Produce per-page hit/unique-IP rollups plus mutually-exclusive BOT/AI/BADBOT

# counts aligned to nginx enforcement.

#

# Outputs (under --dest):

# 1) page_hits.tsv host<TAB>page_key<TAB>hits_total

# 2) page_unique_ips.tsv host<TAB>page_key<TAB>unique_ip_count

# 3) page_bot_ai_hits.tsv host<TAB>page_key<TAB>hits_bot<TAB>hits_ai<TAB>hits_badbot

# 4) page_unique_agent_ips.tsv host<TAB>page_key<TAB>uniq_human<TAB>uniq_bot<TAB>uniq_ai<TAB>uniq_badbot

#

# Classification (EXCLUSIVE, ordered):

# - BADBOT : HTTP status 308 (authoritative signal; nginx permanently redirects bad bots)

# - AI : UA matches bad_bot -> 0 patterns (from bots.conf)

# - BOT : UA matches bot -> 1 patterns (from bots.conf)

# - fallback BADBOT-UA: UA matches bad_bot -> nonzero patterns (only if status not 308)

#

# Important:

# - This script intentionally does NOT attempt to emulate nginx 'map' first-match

# semantics for analytics. It uses the operational signal (308) + explicit lists.

# - External sort is used; choose --workdir on a large filesystem if needed.

# ============================================================================

# ----------------------------

# CLI

# ----------------------------

my @inputs = ();

my $dest = '';

my $workdir = '';

my $bots_conf = '/etc/nginx/bots.conf';

my $sort_mem = '50%';

my $sort_parallel = 2;

my $ignore_head = 0;

my $exclude_local_ip = 0;

my $verbose = 0;

my $help = 0;

# Canonicalisation controls (MediaWiki-friendly defaults)

my $mw_title_first = 1; # if title= exists for index.php, use it as page key

my $strip_query_nonmw = 1; # for non-MW URLs, drop query string entirely

my @ignore_params = qw(oldid diff action returnto returntoquery limit);

GetOptions(

'input=s@' => \@inputs,

'dest=s' => \$dest,

'workdir=s' => \$workdir,

'bots-conf=s' => \$bots_conf,

'sort-mem=s' => \$sort_mem,

'parallel=i' => \$sort_parallel,

'ignore-head!' => \$ignore_head,

'exclude-local-ip!' => \$exclude_local_ip,

'verbose!' => \$verbose,

'help|h!' => \$help,

'mw-title-first!' => \$mw_title_first,

'strip-query-nonmw!' => \$strip_query_nonmw,

) or die usage();

if ($help) { print usage(); exit 0; }

die usage() unless $dest && @inputs;

make_path($dest) unless -d $dest;

$workdir ||= "$dest/.work";

make_path($workdir) unless -d $workdir;

logmsg("Dest: $dest");

logmsg("Workdir: $workdir");

logmsg("Bots conf: $bots_conf");

logmsg("ignore-head: " . ($ignore_head ? "yes" : "no"));

logmsg("exclude-local-ip: " . ($exclude_local_ip ? "yes" : "no"));

logmsg("mw-title-first: " . ($mw_title_first ? "yes" : "no"));

logmsg("strip-query-nonmw: " . ($strip_query_nonmw ? "yes" : "no"));

my @files = expand_globs(@inputs);

die "No input files found.\n" unless @files;

logmsg("Inputs: " . scalar(@files) . " files");

# ----------------------------

# Load patterns from bots.conf

# ----------------------------

my $patterns = load_bots_conf($bots_conf);

my @ai_regexes = @{ $patterns->{bad_bot_zero} || [] };

my @badbot_regexes = @{ $patterns->{bad_bot_nonzero} || [] };

my @bot_regexes = @{ $patterns->{bot_one} || [] };

logmsg("Loaded patterns from bots.conf:");

logmsg(" AI regexes (bad_bot -> 0): " . scalar(@ai_regexes));

logmsg(" Bad-bot regexes (bad_bot -> URL/nonzero): " . scalar(@badbot_regexes));

logmsg(" Bot regexes (bot -> 1): " . scalar(@bot_regexes));

# ----------------------------

# Temp keyfiles

# ----------------------------

my $tmp_hits_keys = "$workdir/page_hits.keys"; # host \t page

my $tmp_unique_triples = "$workdir/page_unique.triples"; # host \t page \t ip

my $tmp_botai_keys = "$workdir/page_botai.keys"; # host \t page \t class

my $tmp_classuniq_triples = "$workdir/page_class_unique.triples"; # host page class ip

open(my $fh_hits, '>', $tmp_hits_keys) or die "Cannot write $tmp_hits_keys: $!\n";

open(my $fh_uniq, '>', $tmp_unique_triples) or die "Cannot write $tmp_unique_triples: $!\n";

open(my $fh_botai, '>', $tmp_botai_keys) or die "Cannot write $tmp_botai_keys: $!\n";

open(my $fh_classuniq, '>', $tmp_classuniq_triples) or die "Cannot write $tmp_classuniq_triples: $!\n";

my $lines = 0;

my $kept = 0;

for my $f (@files) {

logmsg("Reading $f");

my $fh = open_maybe_gz($f);

while (my $line = <$fh>) {

$lines++;

my ($ip, $method, $target, $status, $ua, $host) = parse_access_line($line);

next unless defined $ip && defined $host && defined $target;

# Exclude local/private/loopback traffic if requested

if ($exclude_local_ip && is_local_ip($ip)) {

next;

}

# Instrumentation suppression

if ($ignore_head && defined $method && $method eq 'HEAD') {

next;

}

my $page_key = canonical_page_key($target, \@ignore_params, $mw_title_first, $strip_query_nonmw);

next unless defined $page_key && length $page_key;

# 1) total hits per page

print $fh_hits $host, "\t", $page_key, "\n";

# 2) unique IP per page

print $fh_uniq $host, "\t", $page_key, "\t", $ip, "\n";

# 3) bot/ai/badbot hits per page (EXCLUSIVE, ordered: BADBOT > AI > BOT)

my $class;

if (defined $status && $status == 308) {

$class = 'BADBOT';

}

elsif (defined $ua && length $ua) {

if (ua_matches_any($ua, \@ai_regexes)) {

$class = 'AI';

}

elsif (ua_matches_any($ua, \@bot_regexes)) {

$class = 'BOT';

}

#elsif (ua_matches_any($ua, \@badbot_regexes)) {

# Fallback only; 308 remains the authoritative signal when present.

# $class = 'BADBOT';

#}

}

print $fh_botai $host, " ", $page_key, " ", $class, "

" if defined $class;

my $uclass = defined($class) ? $class : "HUMAN";

print $fh_classuniq $host, " ", $page_key, " ", $uclass, " ", $ip, "

";

$kept++;

}

close $fh;

}

close $fh_hits;

close $fh_uniq;

close $fh_botai;

close $fh_classuniq;

logmsg("Lines read: $lines; lines kept: $kept");

# ----------------------------

# Aggregate outputs

# ----------------------------

my $out_hits = "$dest/page_hits.tsv";

my $out_unique = "$dest/page_unique_ips.tsv";

my $out_botai = "$dest/page_bot_ai_hits.tsv";

my $out_classuniq = "$dest/page_unique_agent_ips.tsv";

count_host_page_hits($tmp_hits_keys, $out_hits, $workdir, $sort_mem, $sort_parallel);

count_unique_ips_per_page($tmp_unique_triples, $out_unique, $workdir, $sort_mem, $sort_parallel);

count_botai_hits_per_page($tmp_botai_keys, $out_botai, $workdir, $sort_mem, $sort_parallel);

count_unique_ips_by_class_per_page($tmp_classuniq_triples, $out_classuniq, $workdir, $sort_mem, $sort_parallel);

logmsg("Wrote:");

logmsg(" $out_hits");

logmsg(" $out_unique");

logmsg(" $out_botai");

logmsg(" $out_classuniq");

# Cleanup

unlink $tmp_hits_keys, $tmp_unique_triples, $tmp_botai_keys, $tmp_classuniq_triples;

exit 0;

# ============================================================================

# USAGE

# ============================================================================

sub usage {

my $cmd = $0; $cmd =~ s!.*/!!;

return <<"USAGE";

Usage:

$cmd --input <glob_or_file> [--input ...] --dest <dir> [options]

Required:

--dest DIR

--input PATH_OR_GLOB (repeatable)

Core:

--workdir DIR Temp workspace (default: DEST/.work)

--bots-conf FILE bots.conf path (default: /etc/nginx/bots.conf)

--ignore-head Exclude HEAD requests

--exclude-local-ip Exclude local/private/loopback IPs

--verbose Verbose progress

--help, -h Help

Sort tuning:

--sort-mem SIZE sort -S SIZE (default: 50%)

--parallel N sort --parallel=N (default: 2)

Canonicalisation:

--mw-title-first / --no-mw-title-first

--strip-query-nonmw / --no-strip-query-nonmw

Outputs under DEST:

page_hits.tsv

page_unique_ips.tsv

page_bot_ai_hits.tsv

USAGE

}

# ============================================================================

# LOGGING

# ============================================================================

sub logmsg {

return unless $verbose;

my $ts = strftime("%Y-%m-%d %H:%M:%S", localtime());

print STDERR "[$ts] $_[0]\n";

}

# ============================================================================

# IP CLASSIFICATION

# ============================================================================

sub is_local_ip {

my ($ip) = @_;

return 0 unless defined $ip && length $ip;

# IPv4 private + loopback

return 1 if $ip =~ /^10\./; # 10.0.0.0/8

return 1 if $ip =~ /^192\.168\./; # 192.168.0.0/16

return 1 if $ip =~ /^127\./; # 127.0.0.0/8

if ($ip =~ /^172\.(\d{1,3})\./) { # 172.16.0.0/12

my $o = $1;

return 1 if $o >= 16 && $o <= 31;

}

# IPv6 loopback

return 1 if lc($ip) eq '::1';

# IPv6 ULA fc00::/7 (fc00..fdff)

return 1 if $ip =~ /^[fF][cCdD][0-9a-fA-F]{0,2}:/;

# IPv6 link-local fe80::/10 (fe80..febf)

return 1 if $ip =~ /^[fF][eE](8|9|a|b|A|B)[0-9a-fA-F]:/;

return 0;

}

# ============================================================================

# FILE / IO

# ============================================================================

sub expand_globs {

my @in = @_;

my @out;

for my $p (@in) {

if ($p =~ /[*?\[]/) { push @out, glob($p); }

else { push @out, $p; }

}

@out = grep { -f $_ } @out;

return @out;

}

sub open_maybe_gz {

my ($path) = @_;

if ($path =~ /\.gz$/i) {

my $gz = IO::Uncompress::Gunzip->new($path)

or die "Gunzip failed for $path: $GunzipError\n";

return $gz;

}

open(my $fh, '<', $path) or die "Cannot open $path: $!\n";

return $fh;

}

# ============================================================================

# PARSING ACCESS LINES

# ============================================================================

sub parse_access_line {

my ($line) = @_;

# Client IP: first token

my $ip;

if ($line =~ /^(\d{1,3}(?:\.\d{1,3}){3})\b/) { $ip = $1; }

elsif ($line =~ /^([0-9a-fA-F:]+)\b/) { $ip = $1; }

else { return (undef, undef, undef, undef, undef, undef); }

# Request method/target + status

my ($method, $target, $status);

if ($line =~ /"(GET|POST|HEAD|PUT|DELETE|OPTIONS|PATCH)\s+([^"]+)\s+HTTP\/[^"]+"\s+(\d{3})\b/) {

$method = $1;

$target = $2;

$status = 0 + $3;

} else {

return ($ip, undef, undef, undef, undef, undef);

}

# Hostname: final quoted field (per nginx.conf custom_format ending with "$server_name")

my $host;

if ($line =~ /\s"([^"]+)"\s*$/) { $host = $1; }

else { return ($ip, $method, $target, $status, undef, undef); }

# UA: second-last quoted field

my @quoted = ($line =~ /"([^"]*)"/g);

my $ua = (@quoted >= 2) ? $quoted[-2] : undef;

return ($ip, $method, $target, $status, $ua, $host);

}

# ============================================================================

# PAGE CANONICALISATION

# ============================================================================

sub canonical_page_key {

my ($target, $ignore_params, $mw_title_first, $strip_query_nonmw) = @_;

my ($path, $query) = split(/\?/, $target, 2);

$path = uri_unescape($path // '');

$query = $query // '';

# MediaWiki: /index.php?...title=Foo

if ($mw_title_first && $path =~ m{/index\.php$} && length($query)) {

my %params = parse_query($query);

if (exists $params{title} && length($params{title})) {

my $title = uri_unescape($params{title});

$title =~ s/\+/ /g;

if (exists $params{edit} && $exclude_local_ip) {

# local

return "l:" . $title;

}

else {

# title (maps to path)

return "p:" . $title;

}

# return "m:" . $title;

}

}

# Non-MW: drop query by default

if ($strip_query_nonmw) {

# path

return "p:" . $path;

}

# Else: retain query, removing noisy params

my %params = parse_query($query);

for my $p (@$ignore_params) { delete $params{$p}; }

my $canon_q = canonical_query_string(\%params);

return $canon_q ? ("pathq:" . $path . "?" . $canon_q) : ("path:" . $path);

}

sub parse_query {

my ($q) = @_;

my %p;

return %p unless defined $q && length($q);

for my $kv (split(/&/, $q)) {

next unless length($kv);

my ($k, $v) = split(/=/, $kv, 2);

$k = uri_unescape($k // ''); $v = uri_unescape($v // '');

$k =~ s/\+/ /g; $v =~ s/\+/ /g;

next unless length($k);

$p{$k} = $v;

}

return %p;

}

sub canonical_query_string {

my ($href) = @_;

my @keys = sort keys %$href;

return '' unless @keys;

my @pairs;

for my $k (@keys) { push @pairs, $k . "=" . $href->{$k}; }

return join("&", @pairs);

}

# ============================================================================

# BOT / AI HELPERS

# ============================================================================

sub ua_matches_any {

my ($ua, $regexes) = @_;

for my $re (@$regexes) {

return 1 if $ua =~ $re;

}

return 0;

}

# ============================================================================

# bots.conf PARSING

# ============================================================================

sub load_bots_conf {

my ($path) = @_;

open(my $fh, '<', $path) or die "Cannot open bots.conf ($path): $!\n";

my $in_bad_bot = 0;

my $in_bot = 0;

my @bad_zero;

my @bad_nonzero;

my @bot_one;

while (my $line = <$fh>) {

chomp $line;

$line =~ s/#.*$//; # comments

$line =~ s/^\s+|\s+$//g; # trim

next unless length $line;

if ($line =~ /^map\s+\$http_user_agent\s+\$bad_bot\s*\{\s*$/) {

$in_bad_bot = 1; $in_bot = 0; next;

}

if ($line =~ /^map\s+\$http_user_agent\s+\$bot\s*\{\s*$/) {

$in_bot = 1; $in_bad_bot = 0; next;

}

if ($line =~ /^\}\s*$/) {

$in_bad_bot = 0; $in_bot = 0; next;

}

if ($in_bad_bot) {

# ~*PATTERN VALUE;

if ($line =~ /^"?(~\*|~)\s*(.+?)"?\s+(.+?);$/) {

my ($op, $pat, $val) = ($1, $2, $3);

$pat =~ s/^\s+|\s+$//g;

$val =~ s/^\s+|\s+$//g;

my $re = compile_nginx_map_regex($op, $pat);

next unless defined $re;

if ($val eq '0') { push @bad_zero, $re; }

else { push @bad_nonzero, $re; }

}

next;

}

if ($in_bot) {

if ($line =~ /^"?(~\*|~)\s*(.+?)"?\s+(.+?);$/) {

my ($op, $pat, $val) = ($1, $2, $3);

$pat =~ s/^\s+|\s+$//g;

$val =~ s/^\s+|\s+$//g;

next unless $val eq '1';

my $re = compile_nginx_map_regex($op, $pat);

push @bot_one, $re if defined $re;

}

next;

}

}

close $fh;

return {

bad_bot_zero => \@bad_zero,

bad_bot_nonzero => \@bad_nonzero,

bot_one => \@bot_one,

};

}

sub compile_nginx_map_regex {

my ($op, $pat) = @_;

return ($op eq '~*') ? eval { qr/$pat/i } : eval { qr/$pat/ };

}

# ============================================================================

# SORT / AGGREGATION

# ============================================================================

sub sort_cmd_base {

my ($workdir, $sort_mem, $sort_parallel) = @_;

my @cmd = ('sort', '-S', $sort_mem, '-T', $workdir);

push @cmd, "--parallel=$sort_parallel" if $sort_parallel && $sort_parallel > 0;

return @cmd;

}

sub run_sort_to_file {

my ($cmd_aref, $outfile) = @_;

open(my $out, '>', $outfile) or die "Cannot write $outfile: $!\n";

open(my $pipe, '-|', @$cmd_aref) or die "Cannot run sort: $!\n";

while (my $line = <$pipe>) { print $out $line; }

close $pipe;

close $out;

}

sub count_host_page_hits {

my ($infile, $outfile, $workdir, $sort_mem, $sort_parallel) = @_;

my $sorted = "$workdir/page_hits.sorted";

my @cmd = (sort_cmd_base($workdir, $sort_mem, $sort_parallel), '-t', "\t", '-k1,1', '-k2,2', $infile);

run_sort_to_file(\@cmd, $sorted);

open(my $in, '<', $sorted) or die "Cannot read $sorted: $!\n";

open(my $out, '>', $outfile) or die "Cannot write $outfile: $!\n";

my ($prev_host, $prev_page, $count) = (undef, undef, 0);

while (my $line = <$in>) {

chomp $line;

my ($host, $page) = split(/\t/, $line, 3);

next unless defined $host && defined $page;

if (defined $prev_host && ($host ne $prev_host || $page ne $prev_page)) {

print $out $prev_host, "\t", $prev_page, "\t", $count, "\n";

$count = 0;

}

$prev_host = $host; $prev_page = $page; $count++;

}

print $out $prev_host, "\t", $prev_page, "\t", $count, "\n" if defined $prev_host;

close $in; close $out;

unlink $sorted;

}

sub count_unique_ips_per_page {

my ($infile, $outfile, $workdir, $sort_mem, $sort_parallel) = @_;

my $sorted = "$workdir/page_unique.sorted";

my @cmd = (sort_cmd_base($workdir, $sort_mem, $sort_parallel), '-t', "\t", '-k1,1', '-k2,2', '-k3,3', $infile);

run_sort_to_file(\@cmd, $sorted);

open(my $in, '<', $sorted) or die "Cannot read $sorted: $!\n";

open(my $out, '>', $outfile) or die "Cannot write $outfile: $!\n";

my ($prev_host, $prev_page, $prev_ip) = (undef, undef, undef);

my $uniq = 0;

while (my $line = <$in>) {

chomp $line;

my ($host, $page, $ip) = split(/\t/, $line, 4);

next unless defined $host && defined $page && defined $ip;

if (defined $prev_host && ($host ne $prev_host || $page ne $prev_page)) {

print $out $prev_host, "\t", $prev_page, "\t", $uniq, "\n";

$uniq = 0; $prev_ip = undef;

}

if (!defined $prev_ip || $ip ne $prev_ip) {

$uniq++; $prev_ip = $ip;

}

$prev_host = $host; $prev_page = $page;

}

print $out $prev_host, "\t", $prev_page, "\t", $uniq, "\n" if defined $prev_host;

close $in; close $out;

unlink $sorted;

}

# 4) count unique IPs per page by class and pivot to columns:

# host<TAB>page<TAB>uniq_human<TAB>uniq_bot<TAB>uniq_ai<TAB>uniq_badbot

sub count_unique_ips_by_class_per_page {

my ($infile, $outfile, $workdir, $sort_mem, $sort_parallel) = @_;

my $sorted = "$workdir/page_class_unique.sorted";

my @cmd = (sort_cmd_base($workdir, $sort_mem, $sort_parallel),

'-t', "\t", '-k1,1', '-k2,2', '-k3,3', '-k4,4', $infile);

run_sort_to_file(\@cmd, $sorted);

open(my $in, '<', $sorted) or die "Cannot read $sorted: $!\n";

open(my $out, '>', $outfile) or die "Cannot write $outfile: $!\n";

my ($prev_host, $prev_page, $prev_class, $prev_ip) = (undef, undef, undef, undef);

my %uniq = (HUMAN => 0, BOT => 0, AI => 0, BADBOT => 0);

while (my $line = <$in>) {

chomp $line;

my ($host, $page, $class, $ip) = split(/\t/, $line, 5);

next unless defined $host && defined $page && defined $class && defined $ip;

# Page boundary => flush

if (defined $prev_host && ($host ne $prev_host || $page ne $prev_page)) {

print $out $prev_host, "\t", $prev_page, "\t",

($uniq{HUMAN}||0), "\t", ($uniq{BOT}||0), "\t", ($uniq{AI}||0), "\t", ($uniq{BADBOT}||0), "\n";

%uniq = (HUMAN => 0, BOT => 0, AI => 0, BADBOT => 0);

($prev_class, $prev_ip) = (undef, undef);

}

# Within a page, we count unique IP per class. Since sorted by class then ip, we can do change detection.

if (!defined $prev_class || $class ne $prev_class || !defined $prev_ip || $ip ne $prev_ip) {

$uniq{$class}++ if exists $uniq{$class};

$prev_class = $class;

$prev_ip = $ip;

}

$prev_host = $host;

$prev_page = $page;

}

if (defined $prev_host) {

print $out $prev_host, "\t", $prev_page, "\t",

($uniq{HUMAN}||0), "\t", ($uniq{BOT}||0), "\t", ($uniq{AI}||0), "\t", ($uniq{BADBOT}||0), "\n";

}

close $in;

close $out;

unlink $sorted;

}

sub count_botai_hits_per_page {

my ($infile, $outfile, $workdir, $sort_mem, $sort_parallel) = @_;

my $sorted = "$workdir/page_botai.sorted";

my @cmd = (sort_cmd_base($workdir, $sort_mem, $sort_parallel), '-t', "\t", '-k1,1', '-k2,2', '-k3,3', $infile);

run_sort_to_file(\@cmd, $sorted);

open(my $in, '<', $sorted) or die "Cannot read $sorted: $!\n";

open(my $out, '>', $outfile) or die "Cannot write $outfile: $!\n";

my ($prev_host, $prev_page) = (undef, undef);

my %c = (BOT => 0, AI => 0, BADBOT => 0);

while (my $line = <$in>) {

chomp $line;

my ($host, $page, $class) = split(/\t/, $line, 4);

next unless defined $host && defined $page && defined $class;

if (defined $prev_host && ($host ne $prev_host || $page ne $prev_page)) {

print $out $prev_host, "\t", $prev_page, "\t", $c{BOT}, "\t", $c{AI}, "\t", $c{BADBOT}, "\n";

%c = (BOT => 0, AI => 0, BADBOT => 0);

}

$c{$class}++ if exists $c{$class};

$prev_host = $host; $prev_page = $page;

}

print $out $prev_host, "\t", $prev_page, "\t", $c{BOT}, "\t", $c{AI}, "\t", $c{BADBOT}, "\n" if defined $prev_host;

close $in; close $out;

unlink $sorted;

}